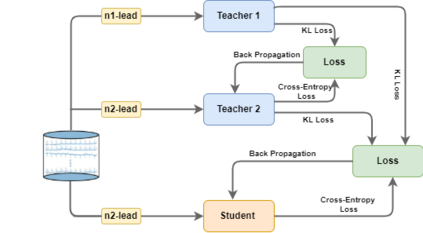

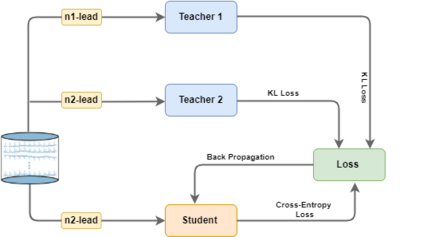

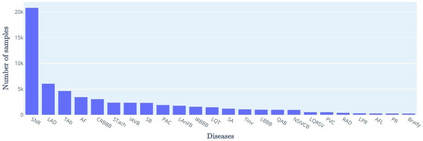

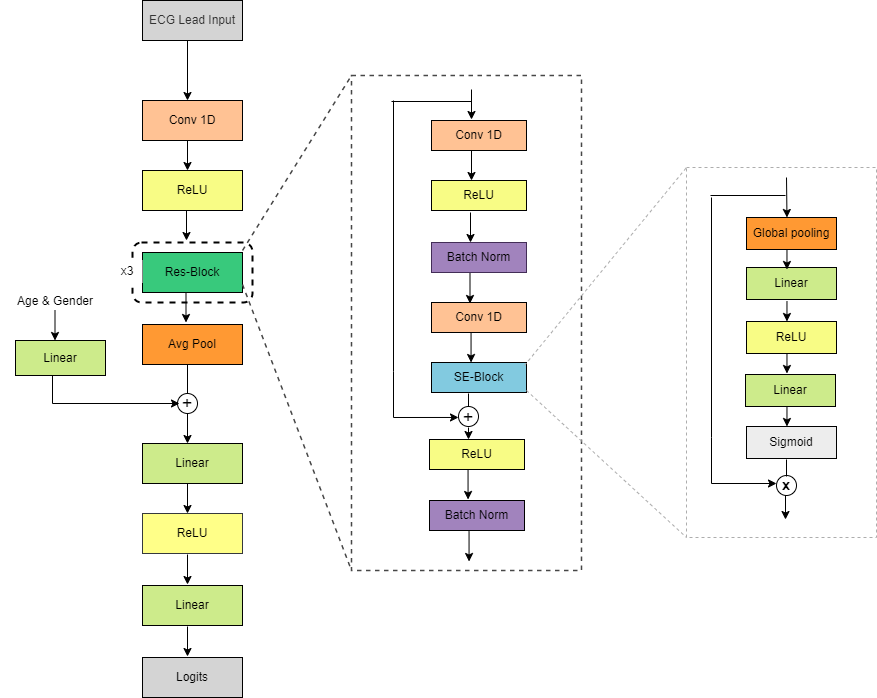

An electrocardiogram (ECG) monitors the electrical activity generated by the heart and is used to detect fatal cardiovascular diseases (CVDs). Conventionally, to capture the precise electrical activity, clinical experts use multiple-lead ECGs (typically 12 leads). But in recent times, large-size deep learning models have been used to detect these diseases. However, such models require heavy compute resources like huge memory and long inference time. To alleviate these shortcomings, we propose a low-parameter model, named Low Resource Heart-Network (LRH-Net), which uses fewer leads to detect ECG anomalies in a resource-constrained environment. A multi-level knowledge distillation process is used on top of that to get better generalization performance on our proposed model. The multi-level knowledge distillation process distills the knowledge to LRH-Net trained on a reduced number of leads from higher parameter (teacher) models trained on multiple leads to reduce the performance gap. The proposed model is evaluated on the PhysioNet-2020 challenge dataset with constrained input. The parameters of the LRH-Net are 106x less than our teacher model for detecting CVDs. The performance of the LRH-Net was scaled up to 3.2% and the inference time scaled down by 75% compared to the teacher model. In contrast to the compute- and parameter-intensive deep learning techniques, the proposed methodology uses a subset of ECG leads using the low resource LRH-Net, making it eminently suitable for deployment on edge devices.

翻译:心电图(ECG)监测心脏生成的电动活动,用于检测致命心血管疾病(CVDs)的致命性能活动(CVDs)。从公约角度来说,临床专家利用多级高级ECG(通常为12条线索)来捕捉准确的电动活动。但是,最近,使用大型深层学习模型来检测这些疾病。然而,这类模型需要大量计算资源,如巨大的记忆和长推力时间等。为了减轻这些缺陷,我们提议了一个名为低资源心电网的低参数模型(LRH-Net),该模型在资源紧张的环境中用较少的线索来探测ECG异常现象。多层次知识蒸馏过程除此以外,还利用多层次高级知识蒸馏过程来提高我们拟议模型的通用性能。多层次知识蒸馏过程将知识推广到LRHT-Net,从高参数(教师)模型中减少导力来缩小性能差距。提议的模型在Physiel-Net(LRRH-Net)的低密度数据设置比重,在教师的精度模型上比重LRHVD的精度模型,在SLMLM-C-C-C-C-C-C-LVS-C-C-LS-C-C-C-C-C-C-C-C-C-C-LS-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-