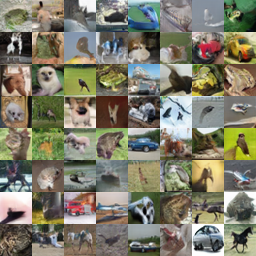

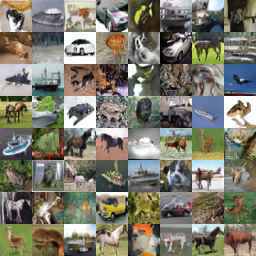

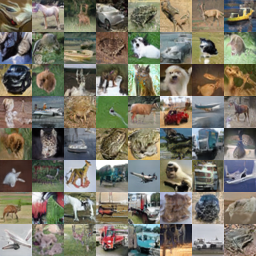

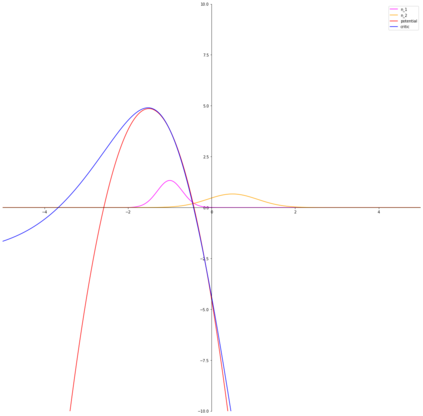

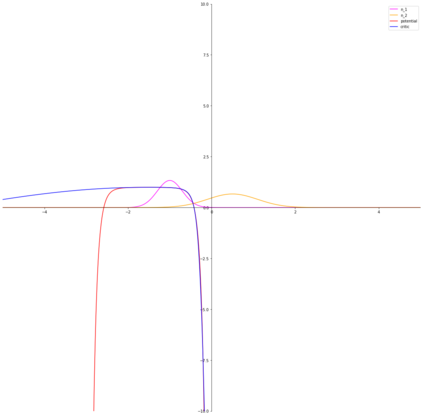

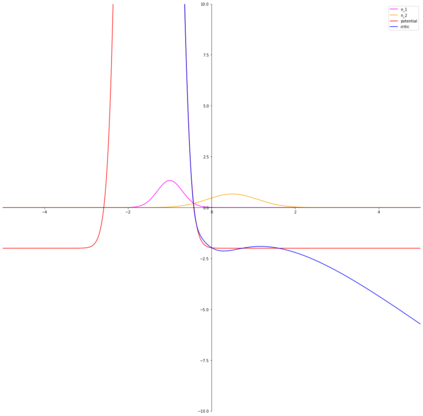

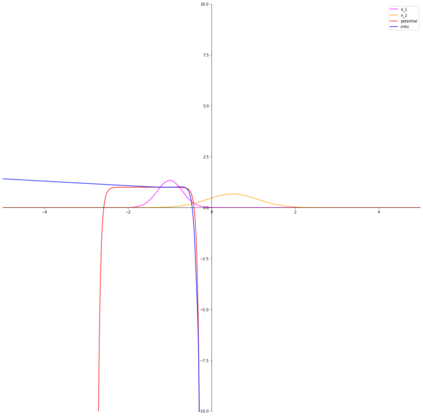

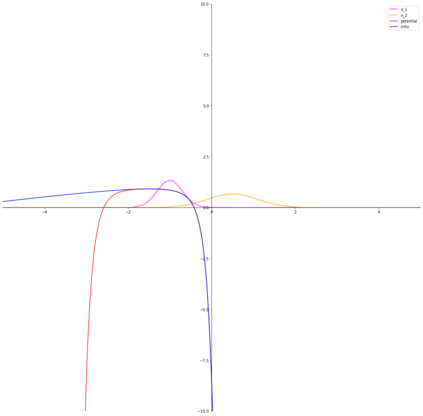

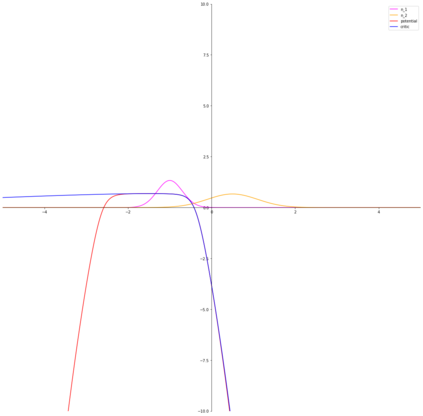

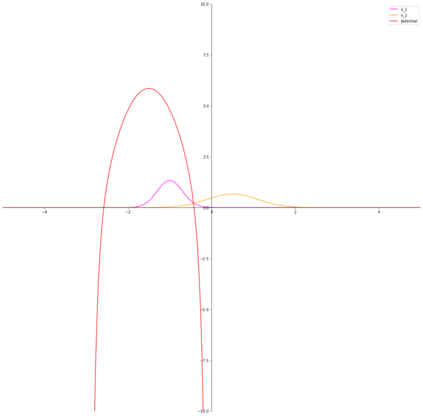

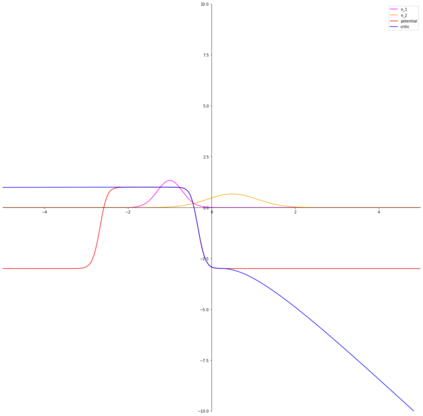

Variational representations of $f$-divergences are central to many machine learning algorithms, with Lipschitz constrained variants recently gaining attention. Inspired by this, we define the Moreau-Yosida approximation of $f$-divergences with respect to the Wasserstein-$1$ metric. The corresponding variational formulas provide a generalization of a number of recent results, novel special cases of interest and a relaxation of the hard Lipschitz constraint. Additionally, we prove that the so-called tight variational representation of $f$-divergences can be to be taken over the quotient space of Lipschitz functions, and give a characterization of functions achieving the supremum in the variational representation. On the practical side, we propose an algorithm to calculate the tight convex conjugate of $f$-divergences compatible with automatic differentiation frameworks. As an application of our results, we propose the Moreau-Yosida $f$-GAN, providing an implementation of the variational formulas for the Kullback-Leibler, reverse Kullback-Leibler, $\chi^2$, reverse $\chi^2$, squared Hellinger, Jensen-Shannon, Jeffreys, triangular discrimination and total variation divergences as GANs trained on CIFAR-10, leading to competitive results and a simple solution to the problem of uniqueness of the optimal critic.

翻译:以美元计息的变式表示,是许多机器学习算法的核心,而利普申茨受限制的变式最近日益受到注意。受此启发,我们定义了与瓦塞斯坦-1美元衡量标准有关的莫罗-约西达近似美元-美元差价。相应的变式公式提供了一系列近期结果的概括性,新的特殊感兴趣案例,并放宽了利普申茨限制。此外,我们证明所谓的“独特变式”的美元差价代表制可以超过利普申茨功能的商基空间,并给出了在变式代表中实现等值的功能的定性。在实际方面,我们提出一种算法,用以计算与自动差异框架兼容的美元差价的紧连锁。为了应用我们的结果,我们建议用“莫罗-Yosida $-GAN”, 将简单易利普利伯克-利伯尔、高端-直径克-直径、高端-直径、高端-正-直径、高端-直径-直径、高端-直径、高端-直径、高端-直径、高端-直径-直方-直方-直方-正-直方-直方-直方-直方-直方-直方-直方-直-直-直-直-直-直-直方-直方-直方-直方-直方-直-直方-直方-直方-直方-直方-直方-直方-直方-直方-直方-直方-直方-直方-直方-直方-直方-直方-直方-直方-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-直-