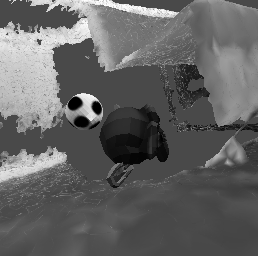

The broad scope of obstacle avoidance has led to many kinds of computer vision-based approaches. Despite its popularity, it is not a solved problem. Traditional computer vision techniques using cameras and depth sensors often focus on static scenes, or rely on priors about the obstacles. Recent developments in bio-inspired sensors present event cameras as a compelling choice for dynamic scenes. Although these sensors have many advantages over their frame-based counterparts, such as high dynamic range and temporal resolution, event-based perception has largely remained in 2D. This often leads to solutions reliant on heuristics and specific to a particular task. We show that the fusion of events and depth overcomes the failure cases of each individual modality when performing obstacle avoidance. Our proposed approach unifies event camera and lidar streams to estimate metric time-to-impact without prior knowledge of the scene geometry or obstacles. In addition, we release an extensive event-based dataset with six visual streams spanning over 700 scanned scenes.

翻译:避免障碍的广泛范围导致了多种基于计算机的视觉方法。尽管受到欢迎,但这不是一个解决的问题。使用照相机和深度传感器的传统计算机视觉技术往往侧重于静态场景,或依赖于对障碍的事先认识。生物激励传感器的最近发展将事件摄像机作为动态场景的令人信服的选择。虽然这些传感器比基于框架的对等系统具有许多优势,例如动态范围大和时间分辨率高,但基于事件的看法大部分仍留在2D中。这往往导致依赖超常和特定任务的具体解决方案。我们表明,事件和深度的结合克服了在避免障碍时每一种方式的失败案例。我们提议的方法将事件摄像机和岩浆流统一起来,以便在不事先了解现场几何或障碍的情况下估计时间到影响。此外,我们发布了一个基于事件的广泛数据集,有六个视觉流,覆盖700多个扫描场。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem