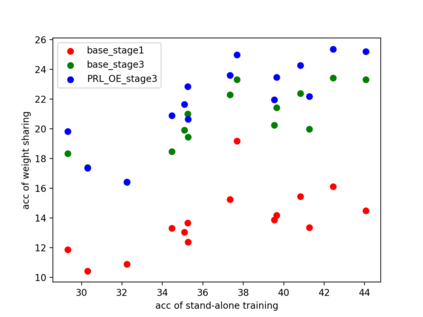

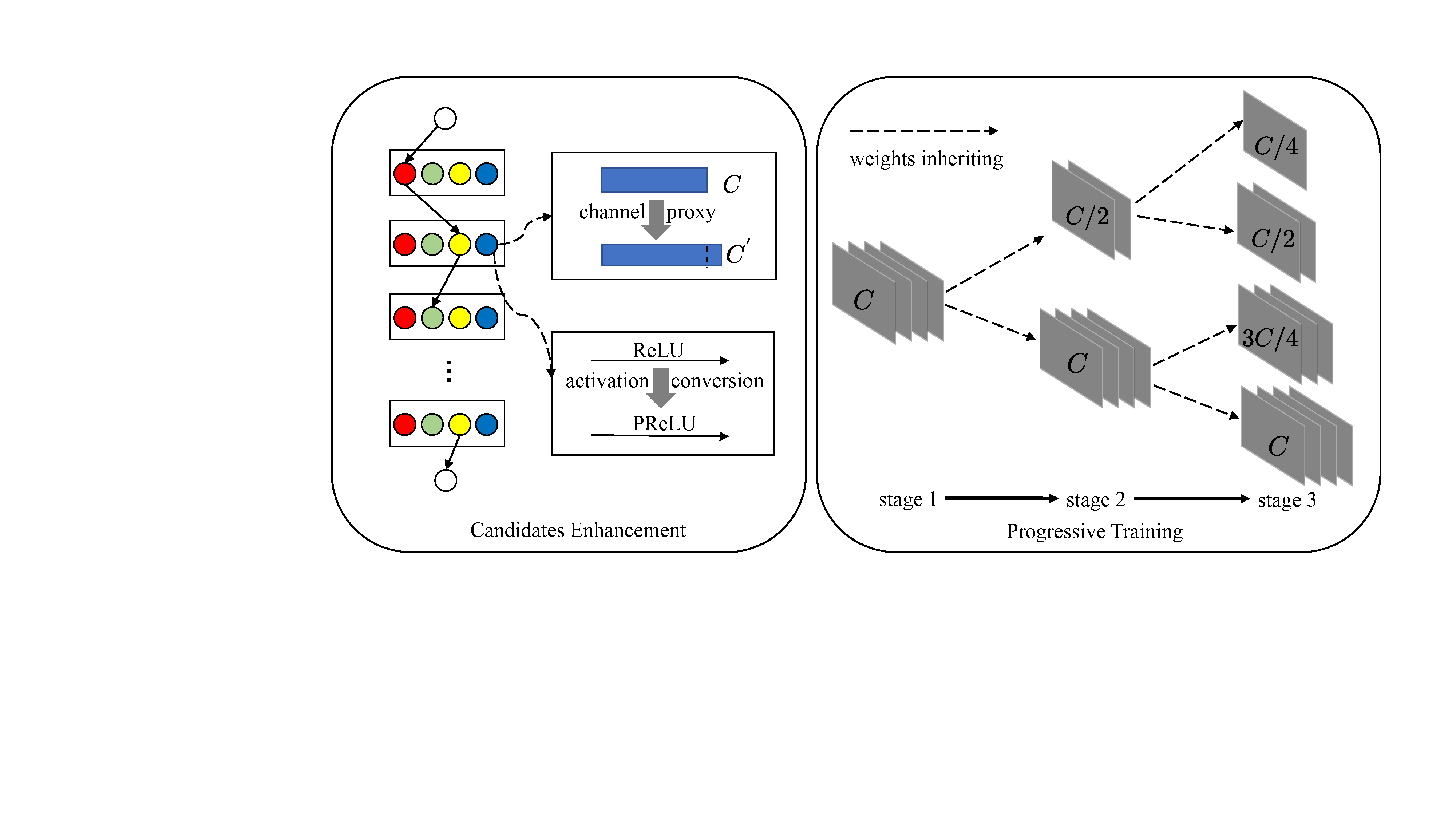

One-shot neural architecture search (NAS) applies weight-sharing supernet to reduce the unaffordable computation overhead of automated architecture designing. However, the weight-sharing technique worsens the ranking consistency of performance due to the interferences between different candidate networks. To address this issue, we propose a candidates enhancement method and progressive training pipeline to improve the ranking correlation of supernet. Specifically, we carefully redesign the sub-networks in the supernet and map the original supernet to a new one of high capacity. In addition, we gradually add narrow branches of supernet to reduce the degree of weight sharing which effectively alleviates the mutual interference between sub-networks. Finally, our method ranks the 1st place in the Supernet Track of CVPR2021 1st Lightweight NAS Challenge.

翻译:单发神经结构搜索(NAS)应用权重共享超级网来减少自动化建筑设计无法负担的计算间接费用。然而,由于不同候选网络之间的干扰,权重共享技术使业绩的排名一致性更加恶化。为了解决这一问题,我们提出了一个候选人强化方法和渐进培训管道,以改善超级网络的排名相关性。具体地说,我们仔细重新设计超级网络中的子网络,并将原超级网络绘制成一个新的高容量网络。此外,我们逐渐增加狭小的超级网络分支,以降低权重共享的程度,从而有效地减轻子网络之间的相互干扰。最后,我们的方法排在CVPR2021 1 1号轻量NAS挑战超级网络轨道的第1位。