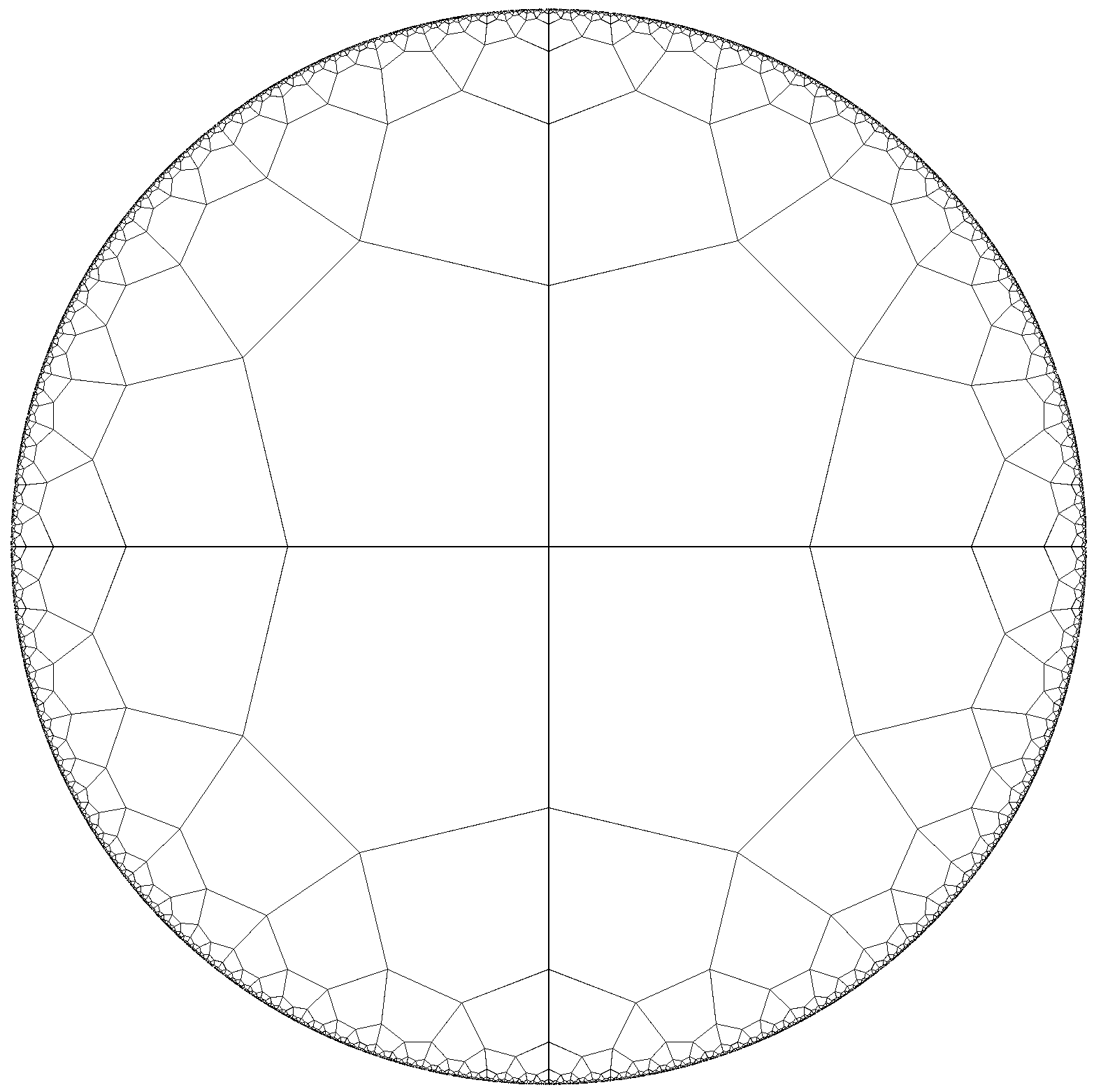

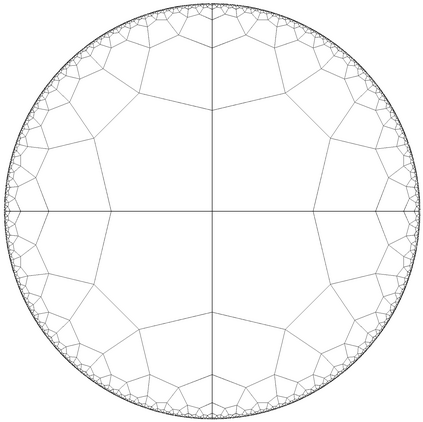

In recent years there has been significant effort to adapt the key tools and ideas in convex optimization to the Riemannian setting. One key challenge has remained: Is there a Nesterov-like accelerated gradient method for geodesically convex functions on a Riemannian manifold? Recent work has given partial answers and the hope was that this ought to be possible. Here we dash these hopes. We prove that in a noisy setting, there is no analogue of accelerated gradient descent for geodesically convex functions on the hyperbolic plane. Our results apply even when the noise is exponentially small. The key intuition behind our proof is short and simple: In negatively curved spaces, the volume of a ball grows so fast that information about the past gradients is not useful in the future.

翻译:近年来,人们作出了重大努力,使关键的工具和想法适应里曼尼环境。一个关键的挑战依然存在:在里曼多管上,是否有类似于内斯特罗夫(Nesterov)的加速梯度法,用于测地性二次曲线函数?最近的工作给出了部分答案,希望这是可能的。我们在这里破灭了这些希望。我们证明,在吵闹的环境下,超双曲线上的大地曲线函数没有加速梯度下降的类似物。我们的结果即使在噪音极小时也适用。我们证据背后的关键直觉是短而简单的:在负曲线的空隙中,球的体积迅速增长,以致关于过去梯度的信息在未来没有用处。