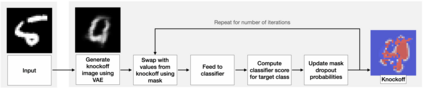

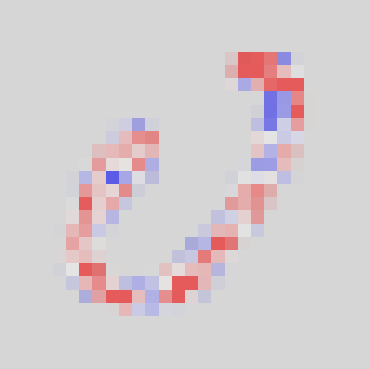

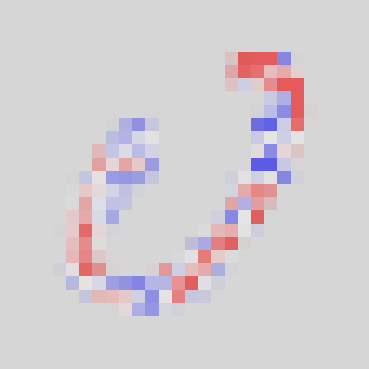

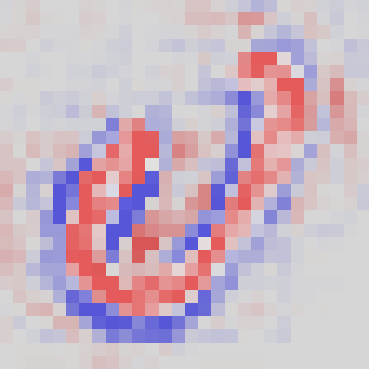

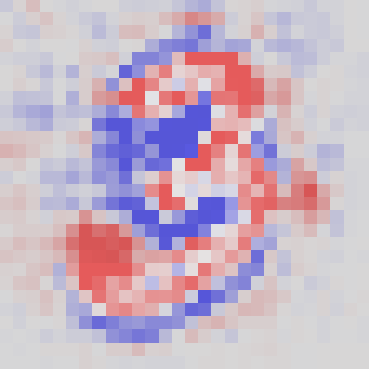

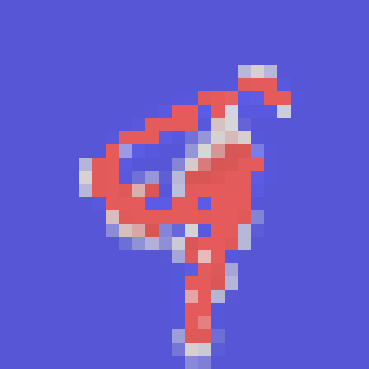

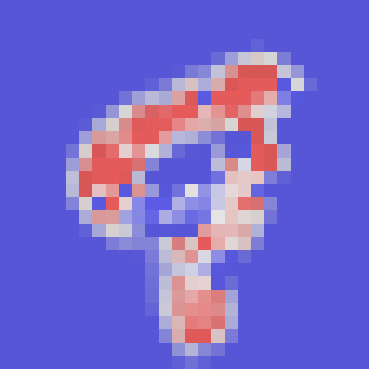

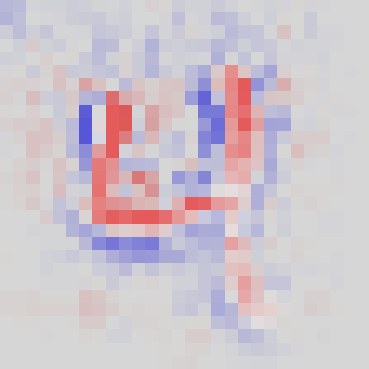

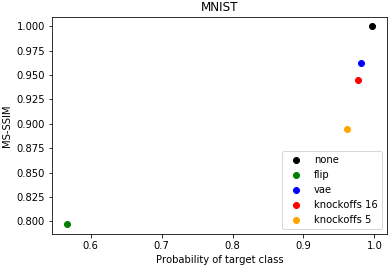

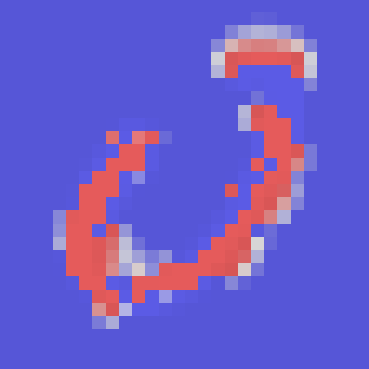

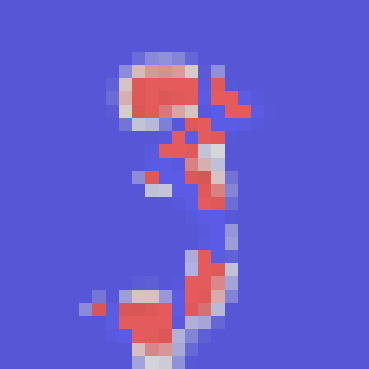

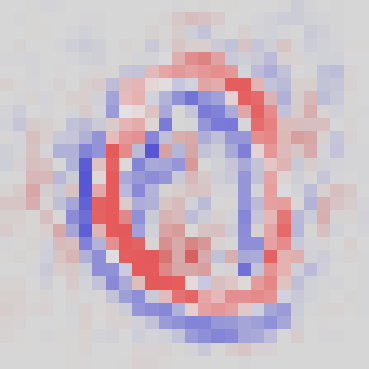

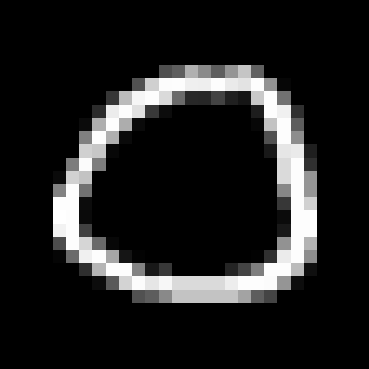

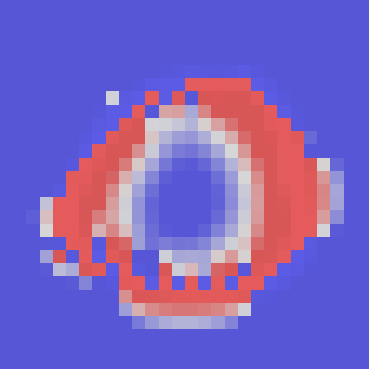

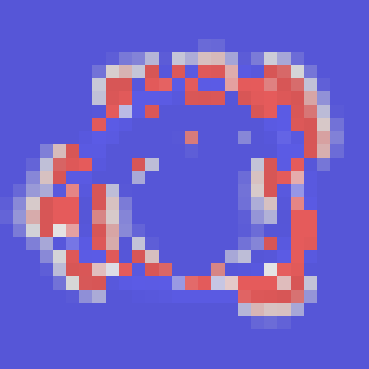

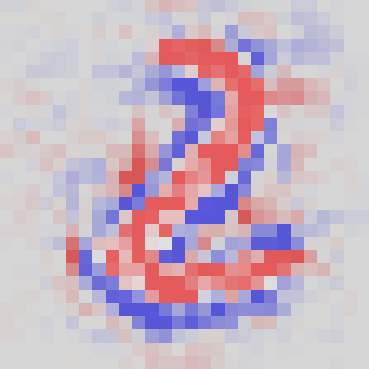

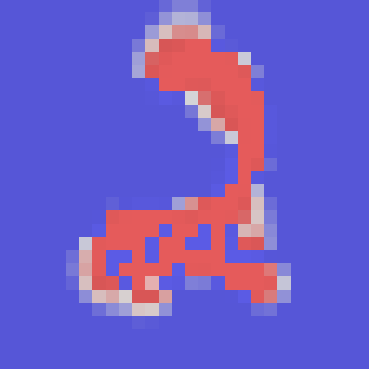

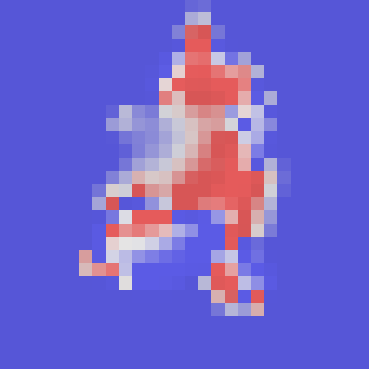

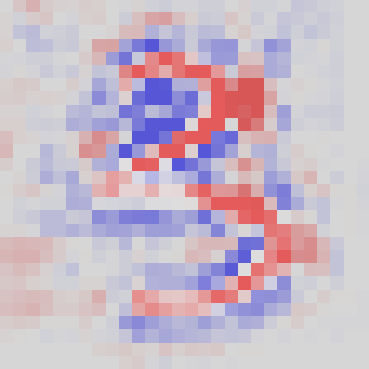

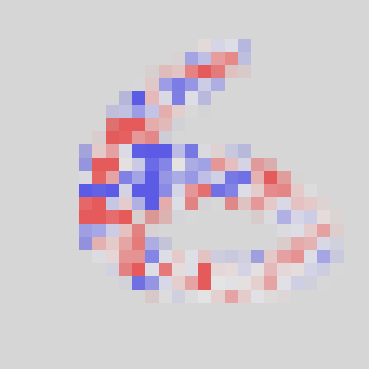

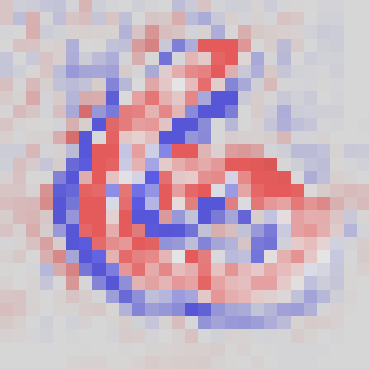

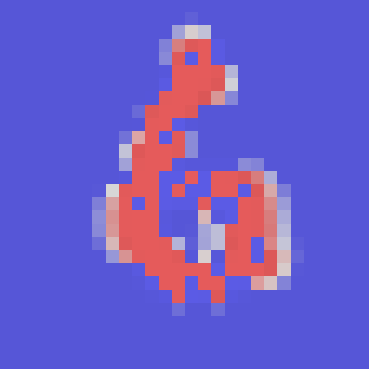

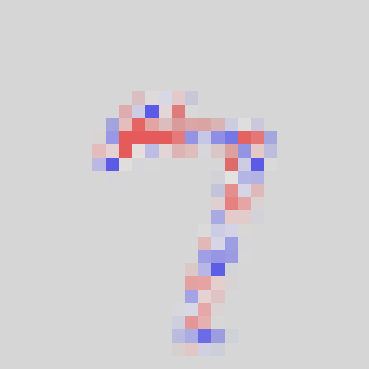

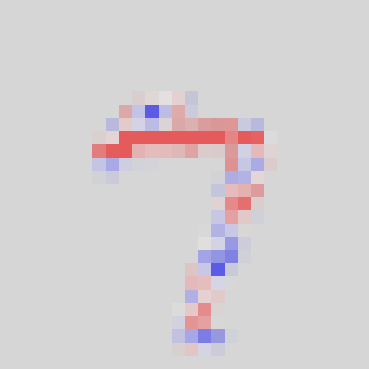

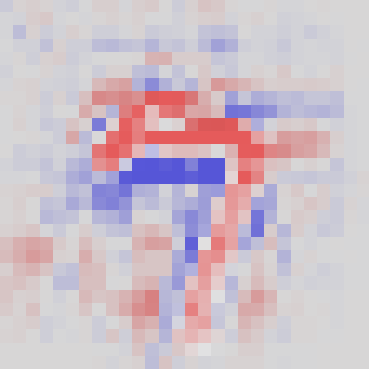

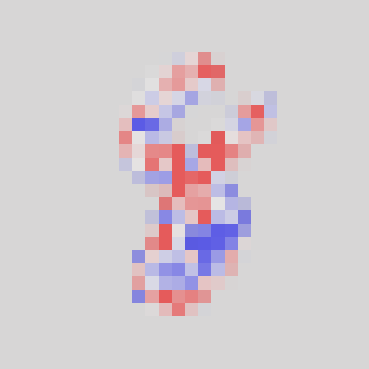

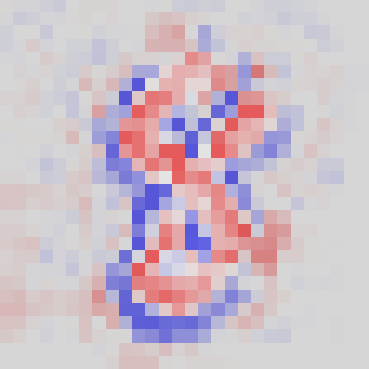

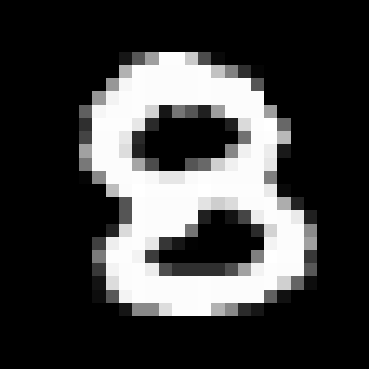

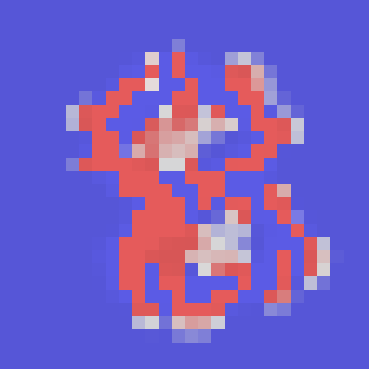

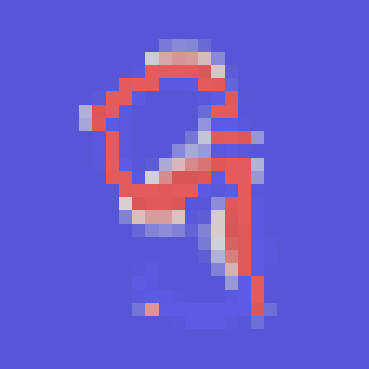

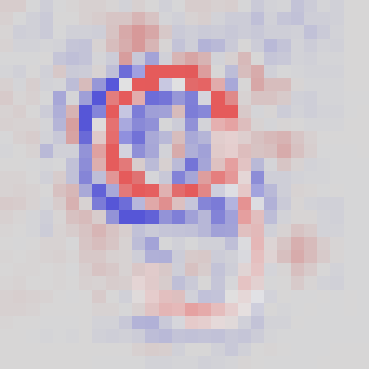

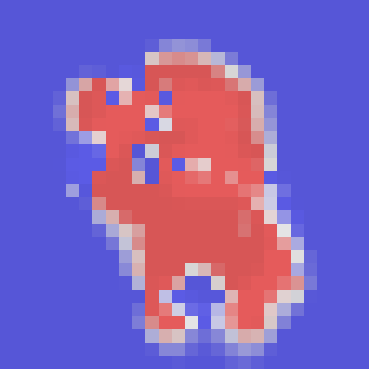

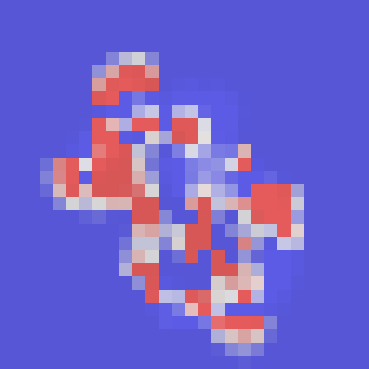

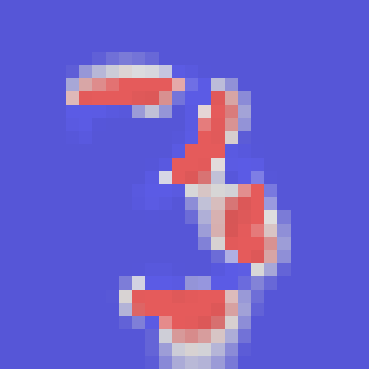

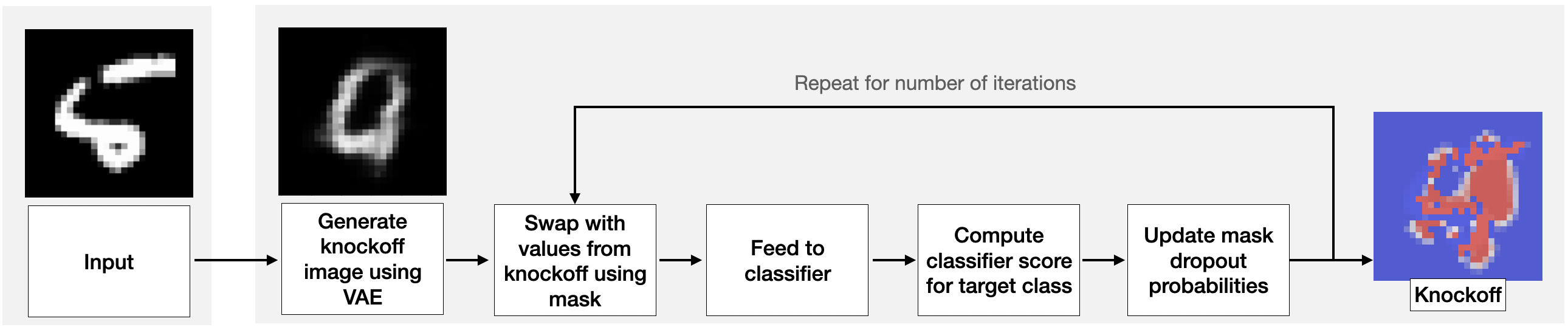

Human interpretability of deep neural networks' decisions is crucial, especially in domains where these directly affect human lives. Counterfactual explanations of already trained neural networks can be generated by perturbing input features and attributing importance according to the change in the classifier's outcome after perturbation. Perturbation can be done by replacing features using heuristic or generative in-filling methods. The choice of in-filling function significantly impacts the number of artifacts, i.e., false-positive attributions. Heuristic methods result in false-positive artifacts because the image after the perturbation is far from the original data distribution. Generative in-filling methods reduce artifacts by producing in-filling values that respect the original data distribution. However, current generative in-filling methods may also increase false-negatives due to the high correlation of in-filling values with the original data. In this paper, we propose to alleviate this by generating in-fillings with the statistically-grounded Knockoffs framework, which was developed by Barber and Cand\`es in 2015 as a tool for variable selection with controllable false discovery rate. Knockoffs are statistically null-variables as decorrelated as possible from the original data, which can be swapped with the originals without changing the underlying data distribution. A comparison of different in-filling methods indicates that in-filling with knockoffs can reveal explanations in a more causal sense while still maintaining the compactness of the explanations.

翻译:深度神经网络决定的人类可解释性至关重要, 特别是在这些直接影响到人类生活的领域。 已经受过训练的神经网络的反事实解释可以通过扰动输入特性产生, 并随着分类器在扰动后结果的变化而赋予重要性。 可以通过使用湿度或基因化的填充方法替换特性来进行扰动。 填充功能的选择会大大影响人工制品的数量, 即错误的阳性属性。 超常解释方法导致虚假的艺术制品, 因为过扰动后图像远离原始数据分布的远。 显性填充方法通过生成尊重原始数据分布的填充值来减少艺术制品。 然而, 目前的填充方法也会增加虚假的负性, 原因是填充值与原始数据高度相关。 在本文中, 我们提议通过以统计背景的敲击框架来减轻这一点, 由 Barber 和 Cand ⁇ es 所开发的图像与原始数据分布的易变现性分析方法相比, 在2015年, 与原始数据排序中的原变现性分析中, 与原始数据选择的易变式分析工具可以与原始数据排序相比, 与原始的平变式的变式的变式分析方法可以与原数据排序相比, 显示为无效的原数据选择工具。