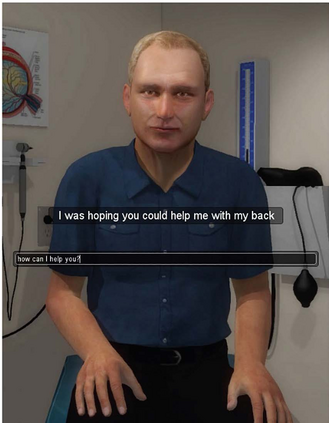

A Virtual Patient (VP) is a powerful tool for training medical students to take patient histories, where responding to a diverse set of spoken questions is essential to simulate natural conversations with a student. The performance of such a Spoken Language Understanding system (SLU) can be adversely affected by both the presence of Automatic Speech Recognition (ASR) errors in the test data and a high degree of class imbalance in the SLU training data. While these two issues have been addressed separately in prior work, we develop a novel two-step training methodology that tackles both these issues effectively in a single dialog agent. As it is difficult to collect spoken data from users without a functioning SLU system, our method does not rely on spoken data for training, rather we use an ASR error predictor to "speechify" the text data. Our method shows significant improvements over strong baselines on the VP intent classification task at various word error rate settings.

翻译:虚拟病人(VP)是培训医科学生记录病人历史的有力工具,因为要模拟与学生的自然对话,必须回答一系列不同的口头问题。这种口语语言理解系统(SLU)的性能可能受到测试数据中自动语音识别错误和SLU培训数据中高度阶级不平衡的不利影响。这两个问题在先前的工作中已经分别解决,但我们开发了一种新型的两步培训方法,在单一的对话框中有效解决这两个问题。由于无法从用户收集口语数据,而没有运行的 SLU 系统,我们的方法并不依靠口语数据进行培训,而是使用ASR错误预测器来“听到”文本数据。我们的方法显示,在不同的单词错误率设置下,对VP意图分类任务的强基线有了重大改进。