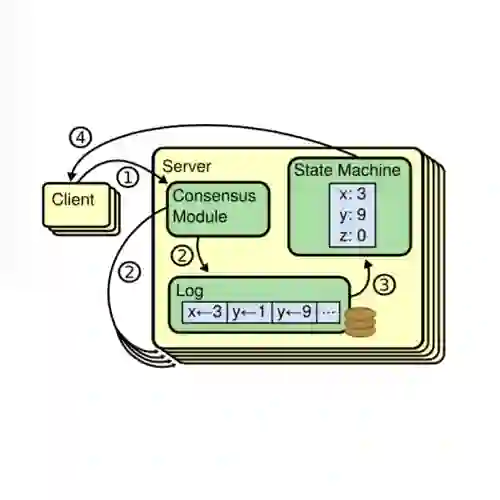

Generating realistic and diverse LiDAR point clouds is crucial for autonomous driving simulation. Although previous methods achieve LiDAR point cloud generation from user inputs, they struggle to attain high-quality results while enabling versatile controllability, due to the imbalance between the complex distribution of LiDAR point clouds and the simple control signals. To address the limitation, we propose LiDARDraft, which utilizes the 3D layout to build a bridge between versatile conditional signals and LiDAR point clouds. The 3D layout can be trivially generated from various user inputs such as textual descriptions and images. Specifically, we represent text, images, and point clouds as unified 3D layouts, which are further transformed into semantic and depth control signals. Then, we employ a rangemap-based ControlNet to guide LiDAR point cloud generation. This pixel-level alignment approach demonstrates excellent performance in controllable LiDAR point clouds generation, enabling "simulation from scratch", allowing self-driving environments to be created from arbitrary textual descriptions, images and sketches.

翻译:生成真实且多样化的激光雷达点云对于自动驾驶仿真至关重要。尽管现有方法已能根据用户输入生成激光雷达点云,但由于激光雷达点云的复杂分布与简单控制信号之间存在不平衡,这些方法难以在实现多样化可控性的同时获得高质量结果。为突破此限制,我们提出LiDARDraft,该方法利用三维布局在多样化条件信号与激光雷达点云之间建立桥梁。三维布局可轻松通过文本描述、图像等多种用户输入生成。具体而言,我们将文本、图像和点云统一表征为三维布局,进而转换为语义与深度控制信号。随后,采用基于距离图的ControlNet引导激光雷达点云生成。这种像素级对齐方法在可控激光雷达点云生成中展现出卓越性能,实现了"从零开始仿真",使得自动驾驶环境能够根据任意文本描述、图像及草图进行创建。