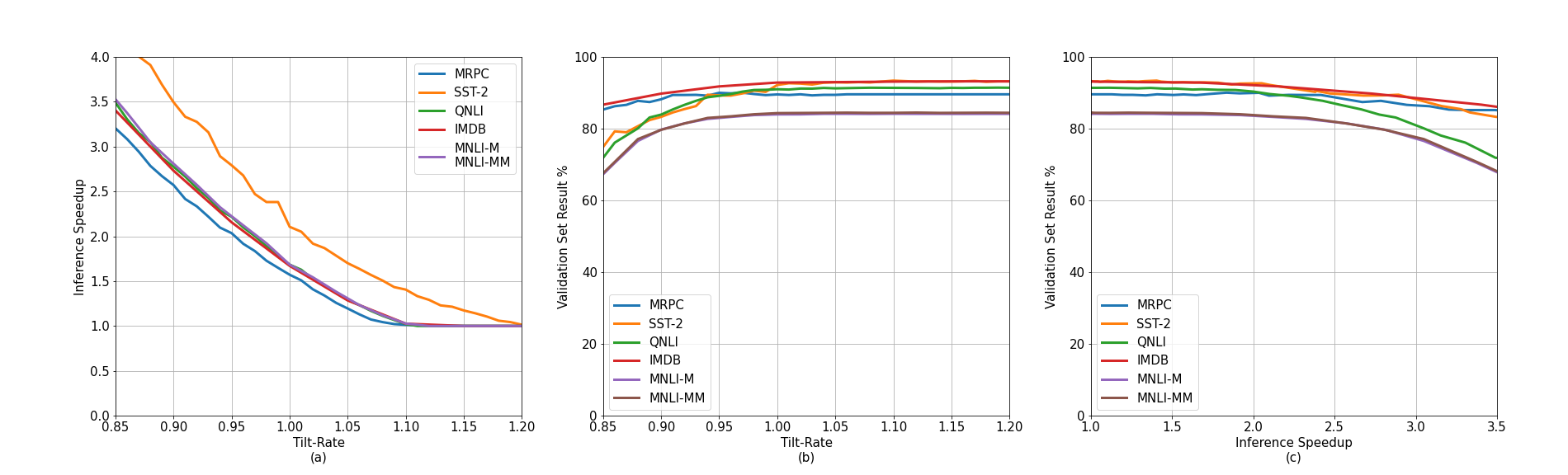

In this paper, a novel adjustable fine-tuning method is proposed that improves the inference time of BERT model on downstream tasks. The proposed method detects the more important word vectors in each layer by the proposed Attention Context Contribution (ACC) metric and eliminates the less important word vectors by the proposed strategy. In the TiltedBERT method the model learns to work with a considerably lower number of Floating Point Operations (FLOPs) than the original BERTbase model. The proposed method does not need training from scratch, and it can be generalized to other transformer-based models. The extensive experiments show that the word vectors in higher layers have less contribution that can be eliminated and improve the inference time. Experimental results on extensive sentiment analysis, classification and regression datasets, and benchmarks like IMDB and GLUE showed that TiltedBERT is effective in various datasets. TiltedBERT improves the inference time of BERTbase up to 4.8 times with less than 0.75% accuracy drop on average. After the fine-tuning by the offline-tuning property, the inference time of the model can be adjusted for a wide range of Tilt-Rate selections. Also, A mathematical speedup analysis is proposed to estimate TiltedBERT method's speedup accurately. With the help of this analysis, a proper Tilt-Rate value can be selected before fine-tuning and during offline-tuning phases.

翻译:在本文中,提出了一种新颖的可调整微调方法,改进BERT模型在下游任务下游任务的推算时间。建议的方法通过拟议的注意环境贡献(ACAC)衡量标准,发现每个层次中更重要的字矢量,并消除拟议战略中较不重要的字矢量。在TiledBERTET方法中,模型学会与比原始的BERT数据库模型少得多的浮动点操作(Fleops)数量(Fleops)一起工作。拟议的方法不需要从零开始培训,它可以推广到其他基于变压器的模型。广泛的实验实验表明,较高层的字矢量对每个层次中更重要的字矢量的贡献较少,可以消除,并改进推导出的时间。在广泛的情绪分析、分类和回归数据集以及IMDB和GLUE等基准的实验结果显示,TiltetedBERT在不同的数据集中有效。TiltebERT改进了BERBBBBBBest在平均时间上升到4.8次之前的推推导时间,低于0.75的精度下降。在调整后,在Timal 分析中,在拟议的TILA 分析中,可以调整后,在拟议的货币分析中进行正确的推展后,可以调整后,在计算。