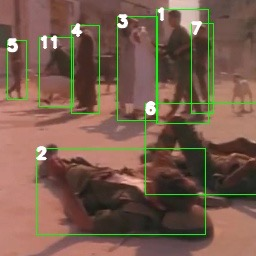

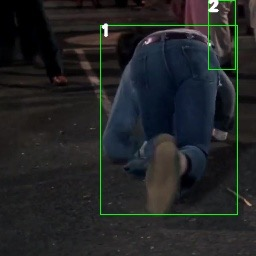

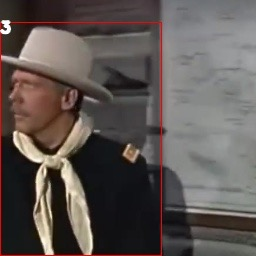

Spatial-temporal action detection is a vital part of video understanding. Current spatial-temporal action detection methods mostly use an object detector to obtain person candidates and classify these person candidates into different action categories. So-called two-stage methods are heavy and hard to apply in real-world applications. Some existing methods build one-stage pipelines, But a large performance drop exists with the vanilla one-stage pipeline and extra classification modules are needed to achieve comparable performance. In this paper, we explore a simple and effective pipeline to build a strong one-stage spatial-temporal action detector. The pipeline is composed by two parts: one is a simple end-to-end spatial-temporal action detector. The proposed end-to-end detector has minor architecture changes to current proposal-based detectors and does not add extra action classification modules. The other part is a novel labeling strategy to utilize unlabeled frames in sparse annotated data. We named our model as SE-STAD. The proposed SE-STAD achieves around 2% mAP boost and around 80% FLOPs reduction. Our code will be released at https://github.com/4paradigm-CV/SE-STAD.

翻译:空间时空动作探测是视频理解的一个重要部分。 当前的空间时空动作探测方法大多使用物体探测器来获取个人候选人,并将这些人候选人分为不同的行动类别。 所谓的两阶段方法在现实应用中是繁重的, 很难应用。 有些现有方法建立一阶段管道, 但香草一阶段管道存在大量性能下降, 需要额外的分类模块来实现可比性能。 在本文中, 我们探索一个简单而有效的管道, 以建立一个强大的一阶段空间时空动作探测器。 管道由两个部分组成 : 一个是简单的终端到终端空间时空动作探测器。 提议的端到端探测器对当前基于提案的探测器有轻微的结构变化, 不添加额外的行动分类模块。 另一部分是使用无标签框架的新型标签战略, 以在稀释的附加说明的数据中使用。 我们将我们的模型命名为 SE-STAD。 拟议的 SE-STAD 实现大约2% mAP 的加速度和大约80% FLOP 。 我们的代码将在 https://gius/ SEPAD.