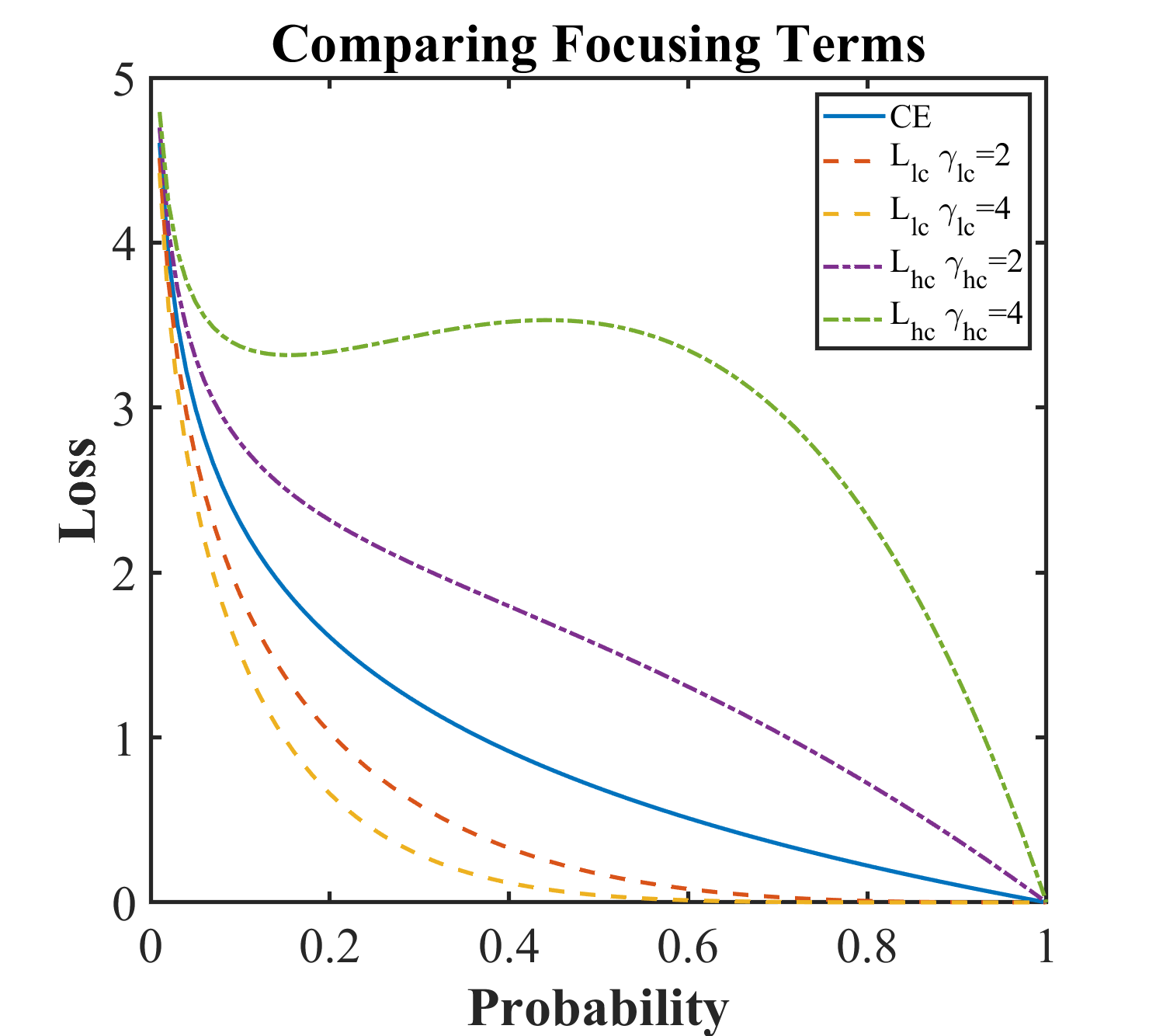

The cross-entropy softmax loss is the primary loss function used to train deep neural networks. On the other hand, the focal loss function has been demonstrated to provide improved performance when there is an imbalance in the number of training samples in each class, such as in long-tailed datasets. In this paper, we introduce a novel cyclical focal loss and demonstrate that it is a more universal loss function than cross-entropy softmax loss or focal loss. We describe the intuition behind the cyclical focal loss and our experiments provide evidence that cyclical focal loss provides superior performance for balanced, imbalanced, or long-tailed datasets. We provide numerous experimental results for CIFAR-10/CIFAR-100, ImageNet, balanced and imbalanced 4,000 training sample versions of CIFAR-10/CIFAR-100, and ImageNet-LT and Places-LT from the Open Long-Tailed Recognition (OLTR) challenge. Implementing the cyclical focal loss function requires only a few lines of code and does not increase training time. In the spirit of reproducibility, our code is available at \url{https://github.com/lnsmith54/CFL}.

翻译:另一方面,当每类(如长尾数据集)的培训样本数量不平衡时,焦点损失功能已证明能够提供更好的性能。在本文件中,我们引入了一种新的周期性核心损失,并表明它比跨热带软轴损失或中枢损失更具普遍性的损失功能。我们描述周期性中心损失背后的直觉,我们的实验提供证据表明周期性中心损失为平衡、不平衡或长尾数据集提供了优异性能。我们为CIRA-10-CIFAR-100、图像网、平衡和不平衡的4 000个培训样本样本提供了许多实验结果,这些样本来自开放长期识别(OLTR)的挑战,包括CIFAR-10/CIFAR-100以及图像网络-LT和场所-LT。实施周期性中心损失功能只需要几行代码,不会增加培训时间。本着可复制性的精神,我们的代码可以在urlas/github. lnsimsimmission/CFMYL}URL}网站上查阅。