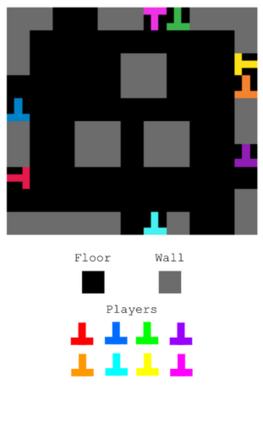

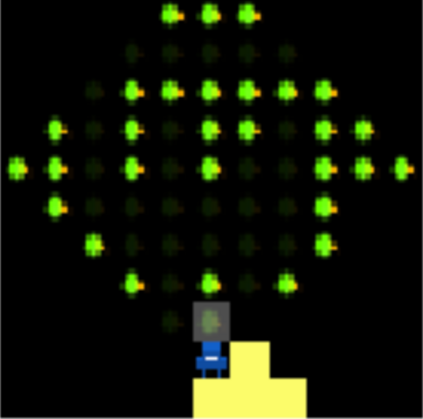

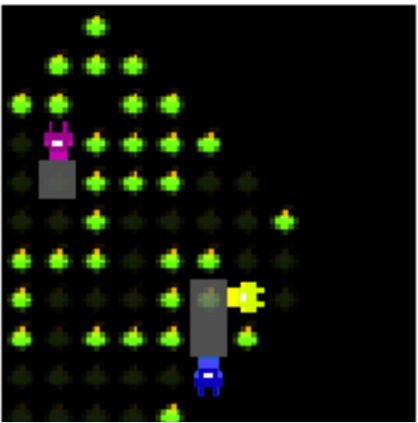

Generalization is a major challenge for multi-agent reinforcement learning. How well does an agent perform when placed in novel environments and in interactions with new co-players? In this paper, we investigate and quantify the relationship between generalization and diversity in the multi-agent domain. Across the range of multi-agent environments considered here, procedurally generating training levels significantly improves agent performance on held-out levels. However, agent performance on the specific levels used in training sometimes declines as a result. To better understand the effects of co-player variation, our experiments introduce a new environment-agnostic measure of behavioral diversity. Results demonstrate that population size and intrinsic motivation are both effective methods of generating greater population diversity. In turn, training with a diverse set of co-players strengthens agent performance in some (but not all) cases.

翻译:多试剂强化学习所面临的一项重大挑战是普及化。 代理人在新环境和与新的共同玩家互动时表现如何? 在本文件中,我们调查和量化多试剂领域一般化和多样性之间的关系。 在此处考虑的多种试剂环境范围中,从程序上产生培训水平,大大提高了在持有水平上的代理效绩。然而,在培训中使用的具体水平上,代理人性能有时会因此下降。为了更好地了解共同玩家变异的影响,我们的实验采用了新的行为多样性环境不可知度。结果显示,人口规模和内在动机都是创造更大人口多样性的有效方法。反过来,与一组不同的共同玩家进行的培训会在某些(但并非所有)案例中加强代理人性能。