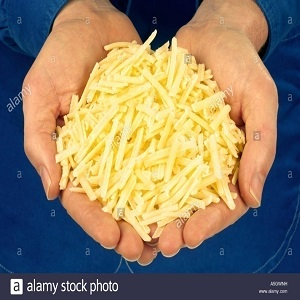

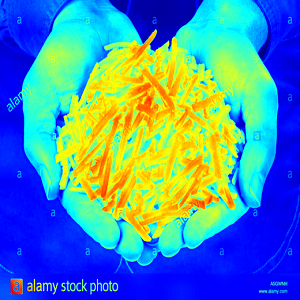

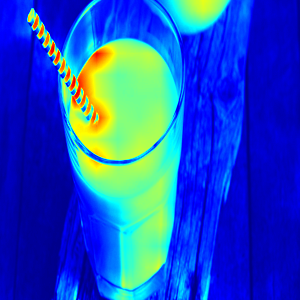

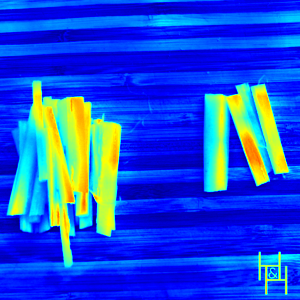

To ensure proper knowledge representation of the kitchen environment, it is vital for kitchen robots to recognize the states of the food items that are being cooked. Although the domain of object detection and recognition has been extensively studied, the task of object state classification has remained relatively unexplored. The high intra-class similarity of ingredients during different states of cooking makes the task even more challenging. Researchers have proposed adopting Deep Learning based strategies in recent times, however, they are yet to achieve high performance. In this study, we utilized the self-attention mechanism of the Vision Transformer (ViT) architecture for the Cooking State Recognition task. The proposed approach encapsulates the globally salient features from images, while also exploiting the weights learned from a larger dataset. This global attention allows the model to withstand the similarities between samples of different cooking objects, while the employment of transfer learning helps to overcome the lack of inductive bias by utilizing pretrained weights. To improve recognition accuracy, several augmentation techniques have been employed as well. Evaluation of our proposed framework on the `Cooking State Recognition Challenge Dataset' has achieved an accuracy of 94.3%, which significantly outperforms the state-of-the-art.

翻译:为确保厨房环境的适当知识代表性,厨房机器人必须认识到正在烹调的食品的状态。虽然对物体探测和识别领域进行了广泛研究,但物体状态分类的任务相对来说仍没有探索。不同烹饪状态不同,不同类内成分的高度相似性使得任务更具挑战性。研究人员最近提议采用深学习战略,但是,他们还没有取得很高的绩效。在这项研究中,我们使用了用于烹调国家识别任务的愿景变异器(VIT)结构的自我注意机制。拟议方法将全球显著特征从图像中包涵,同时也利用了从更大数据集中汲取的重量。这种全球关注使得模型能够承受不同烹饪物品样品之间的相似性,而采用转移学习有助于通过使用预先训练的重量克服缺乏感性偏差。为了提高认知准确性,我们还使用了几种增强技术。我们关于“国家识别挑战数据集”的拟议框架的评估达到了94.3%的准确性,大大超出状态。