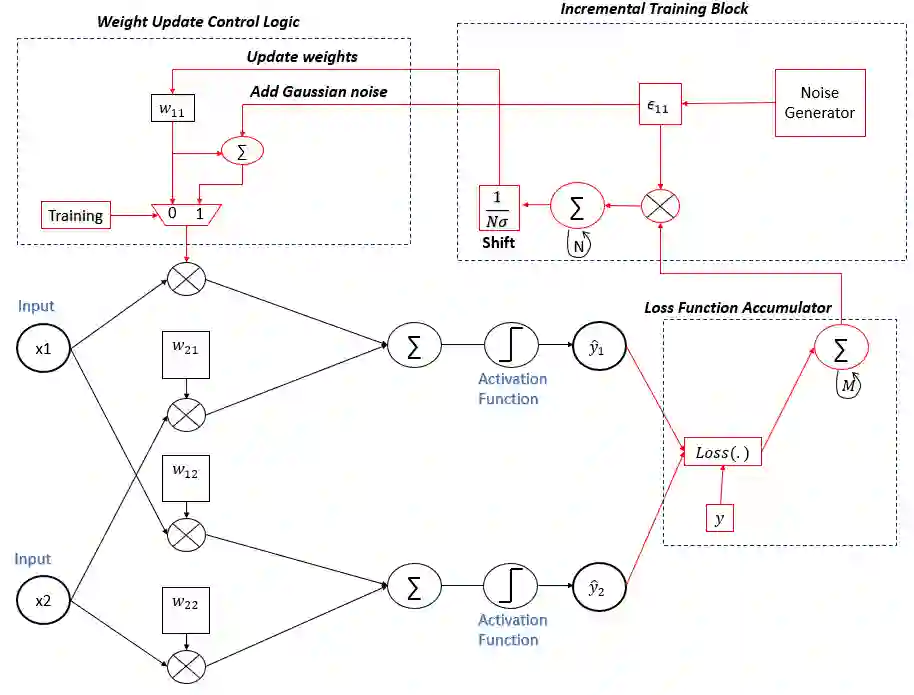

Deep Neural Networks (DNNs) are commonly deployed on end devices that exist in constantly changing environments. In order for the system to maintain it's accuracy, it is critical that it is able to adapt to changes and recover by retraining parts of the network. However, end devices have limited resources making it challenging to train on the same device. Moreover, training deep neural networks is both memory and compute intensive due to the backpropagation algorithm. In this paper we introduce a method using evolutionary strategy (ES) that can partially retrain the network enabling it to adapt to changes and recover after an error has occurred. This technique enables training on an inference-only hardware without the need to use backpropagation and with minimal resource overhead. We demonstrate the ability of our technique to retrain a quantized MNIST neural network after injecting noise to the input. Furthermore, we present the micro-architecture required to enable training on HLS4ML (an inference hardware architecture) and implement it in Verilog. We synthesize our implementation for a Xilinx Kintex Ultrascale Field Programmable Gate Array (FPGA) resulting in less than 1% resource utilization required to implement the incremental training.

翻译:深心神经网络(DNNS)通常部署在在不断变化的环境中存在的末端装置上。为了保持系统的准确性,该系统必须能够适应变化,并通过再培训网络部分进行恢复。然而,终端装置资源有限,因此难以在同一设备上进行培训。此外,培训深心神经网络是记忆和计算密集的,因为后向转换算法。在本文件中,我们引入了一种方法,使用进化战略(ES)对网络进行部分再培训,使其能够适应变化,并在出错后恢复。这一技术使得能够进行仅使用推断的硬件的培训,而无需使用反向调整和资源的间接费用。我们展示了在输入噪音后对一个四分化的MNIST神经网络进行再培训的能力。此外,我们介绍了必要的微结构,以便能够进行关于HLS4M(推断硬件结构)的培训,并在Verilog实施。我们把我们实施的安装在Xilinx Kintex 超偏向型的野外可编程Array的硬件上,比对投入的资源进行更少的递增使用。