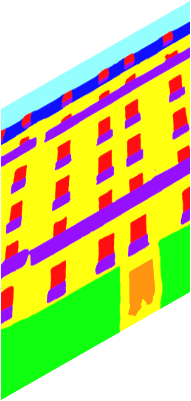

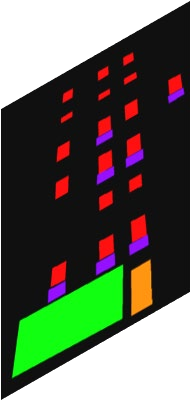

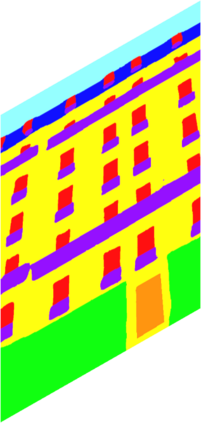

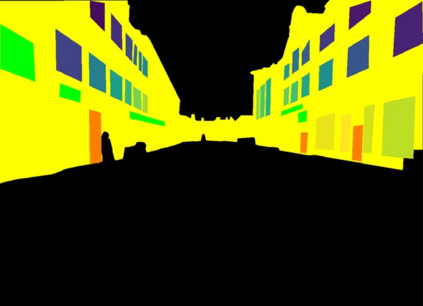

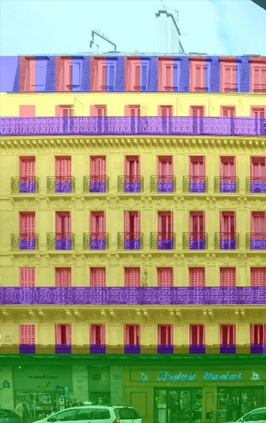

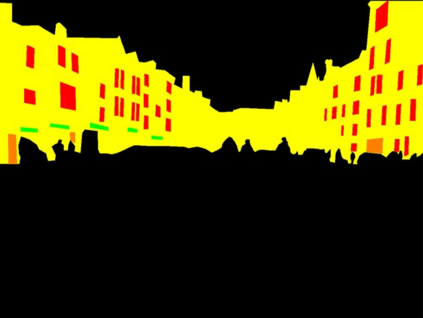

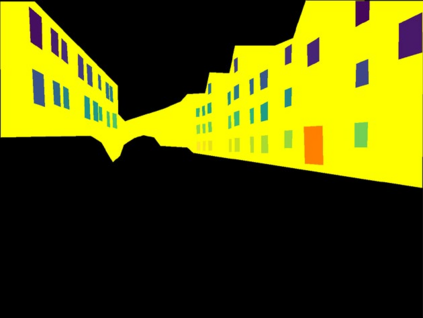

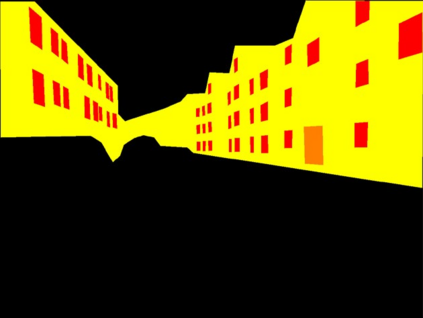

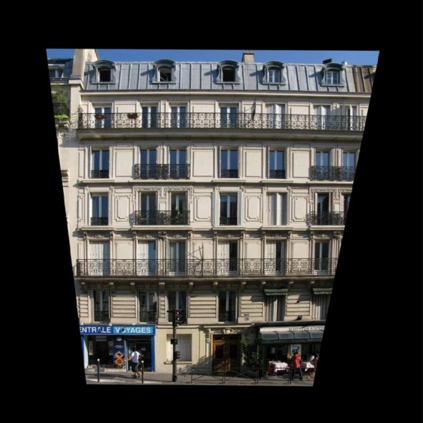

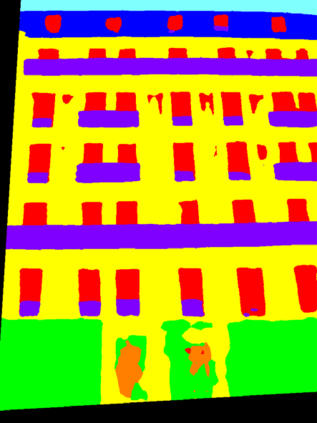

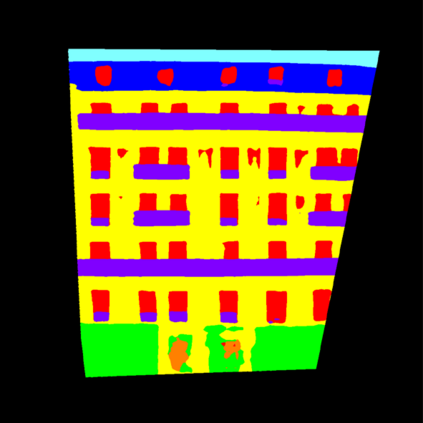

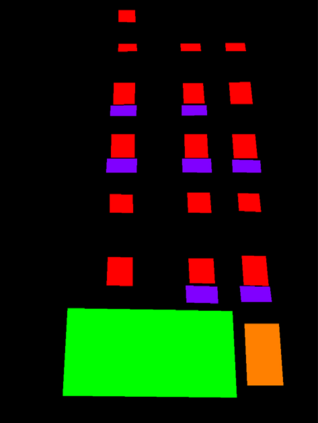

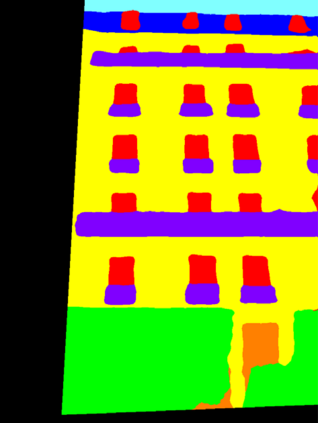

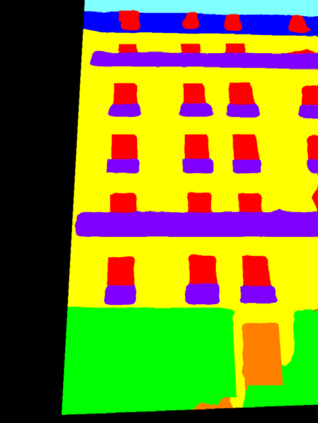

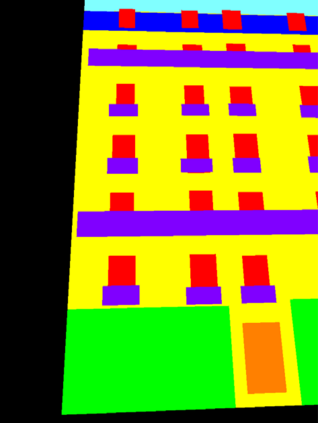

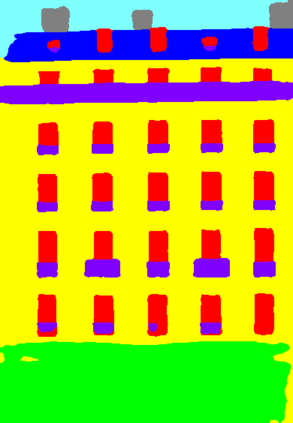

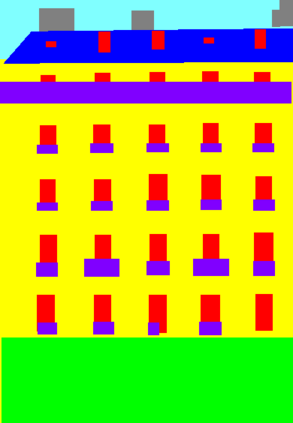

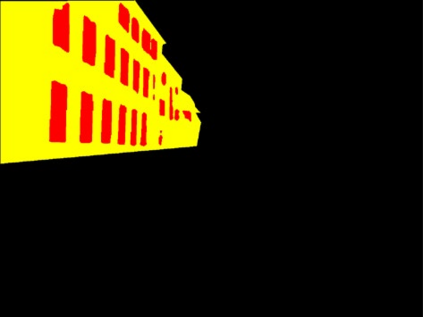

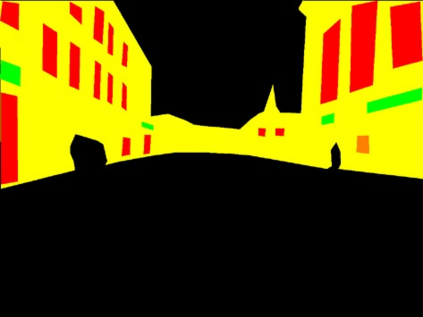

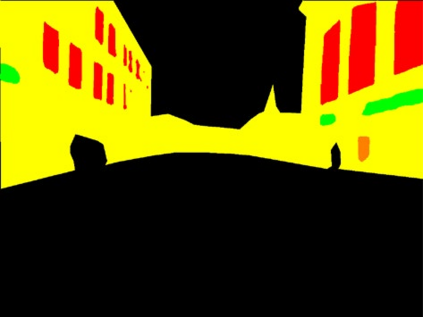

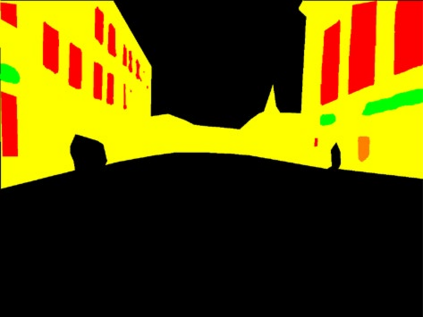

Building facade parsing, which predicts pixel-level labels for building facades, has applications in computer vision perception for autonomous vehicle (AV) driving. However, instead of a frontal view, an on-board camera of an AV captures a deformed view of the facade of the buildings on both sides of the road the AV is travelling on, due to the camera perspective. We propose Facade R-CNN, which includes a transconv module, generalized bounding box detection, and convex regularization, to perform parsing of deformed facade views. Experiments demonstrate that Facade R-CNN achieves better performance than the current state-of-the-art facade parsing models, which are primarily developed for frontal views. We also publish a new building facade parsing dataset derived from the Oxford RobotCar dataset, which we call the Oxford RobotCar Facade dataset. This dataset contains 500 street-view images from the Oxford RobotCar dataset augmented with accurate annotations of building facade objects. The published dataset is available at https://github.com/sijieaaa/Oxford-RobotCar-Facade

翻译:建筑外观分析预测建筑外观的像素级标签,在自动驾驶汽车(AV)的计算机视觉感知中具有应用。然而,一个AV的机上照相机不是前视镜,而是前视镜,拍摄了AV所行道路两侧建筑物外观的变形图象,这是从摄像角度出发的。我们建议Facade R-CNN, 包括一个转接器模块、通用捆绑盒探测和 convex 正规化, 以进行变形外观的分解。实验显示,R-CNN的外观效果优于当前最先进的外观模型,主要为前观而开发。我们还出版了一个新的建筑外观,从牛津机器人汽车数据集(我们称之为牛津机器人卡萨达数据集)中衍生出来的数据集。这个数据集包含500个来自牛津机器人卡萨数据集的街头视图图像,并配有建筑外观物体的准确说明。公布的数据集可在 https://Carbos/Fabas/Faxford上查阅。