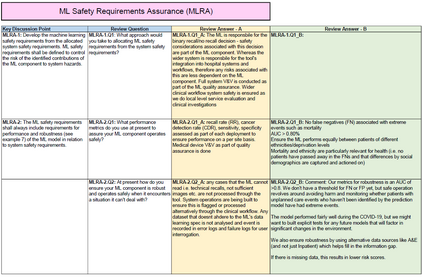

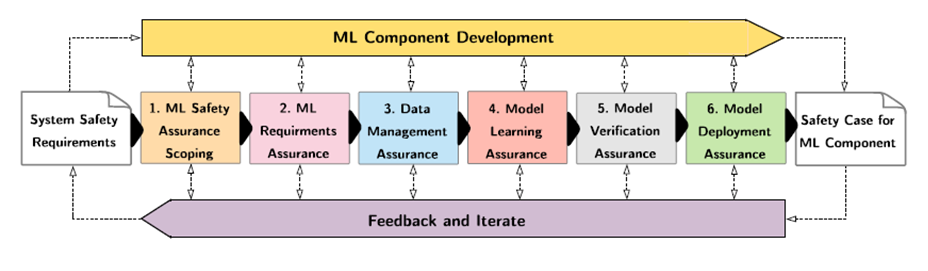

In recent years, the number of machine learning (ML) technologies gaining regulatory approval for healthcare has increased significantly allowing them to be placed on the market. However, the regulatory frameworks applied to them were originally devised for traditional software, which has largely rule-based behaviour, compared to the data-driven and learnt behaviour of ML. As the frameworks are in the process of reformation, there is a need to proactively assure the safety of ML to prevent patient safety being compromised. The Assurance of Machine Learning for use in Autonomous Systems (AMLAS) methodology was developed by the Assuring Autonomy International Programme based on well-established concepts in system safety. This review has appraised the methodology by consulting ML manufacturers to understand if it converges or diverges from their current safety assurance practices, whether there are gaps and limitations in its structure and if it is fit for purpose when applied to the healthcare domain. Through this work we offer the view that there is clear utility for AMLAS as a safety assurance methodology when applied to healthcare machine learning technologies, although development of healthcare specific supplementary guidance would benefit those implementing the methodology.

翻译:近年来,获得医疗保健监管批准的机器学习技术数量显著增加,能够将其投放市场,然而,适用于这些技术的管理框架最初是为传统软件设计的,与数据驱动和学会的行为相比,传统软件基本上以规则为基础,与数据驱动和学习的行为相比,传统软件基本上具有基于规则的行为。由于这些框架正在改革过程中,有必要积极主动地确保ML的安全,以防止病人的安全受到损害。 " 自动系统使用机器学习保证 " 方法由 " 保证自主国际方案 " 根据系统安全方面的既定概念制定,该审查评估了该方法,咨询ML制造商了解该方法是否趋同或偏离其目前的安全保障做法,其结构是否存在差距和局限性,如果适用于医疗保健领域,是否适合目的。我们通过这项工作认为,在应用到医疗保健机械学习技术时,AMLAS作为一种安全保障方法显然有用,尽管制定具体的卫生保健补充指导将有利于实施该方法的人。