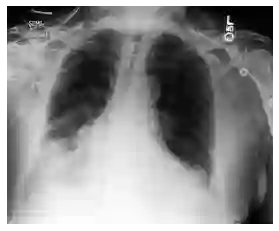

Automated diagnosis prediction from medical images is a valuable resource to support clinical decision-making. However, such systems usually need to be trained on large amounts of annotated data, which often is scarce in the medical domain. Zero-shot methods address this challenge by allowing a flexible adaption to new settings with different clinical findings without relying on labeled data. Further, to integrate automated diagnosis in the clinical workflow, methods should be transparent and explainable, increasing medical professionals' trust and facilitating correctness verification. In this work, we introduce Xplainer, a novel framework for explainable zero-shot diagnosis in the clinical setting. Xplainer adapts the classification-by-description approach of contrastive vision-language models to the multi-label medical diagnosis task. Specifically, instead of directly predicting a diagnosis, we prompt the model to classify the existence of descriptive observations, which a radiologist would look for on an X-Ray scan, and use the descriptor probabilities to estimate the likelihood of a diagnosis. Our model is explainable by design, as the final diagnosis prediction is directly based on the prediction of the underlying descriptors. We evaluate Xplainer on two chest X-ray datasets, CheXpert and ChestX-ray14, and demonstrate its effectiveness in improving the performance and explainability of zero-shot diagnosis. Our results suggest that Xplainer provides a more detailed understanding of the decision-making process and can be a valuable tool for clinical diagnosis.

翻译:自动诊断预测医学图像是支持临床决策制定的有价值的资源。然而,这种系统通常需要在注释数据的大量训练下,而在医学领域往往很少。零样本方法通过允许在不依赖标记数据的情况下灵活地适应具有不同临床发现的新设置来应对这种挑战。此外,为了将自动诊断集成到临床工作流程中,方法应具有透明和可解释性,增加医务人员的信任和促进正确性验证。在本文中,我们介绍了Xplainer,一种新颖的临床可解释的零样本诊断框架。Xplainer将对比视觉语言模型的分类按描述方法适应多标签医学诊断任务。具体而言,我们提示该模型分类描述性观察的存在,放射科医生可以查看X-Ray扫描,并使用描述符概率来估计诊断的可能性。我们的模型从设计上就是可解释的,因为最终的诊断预测直接基于底层描述符的预测。我们在两个胸部X光数据集CheXpert和ChestX-ray14上评估了Xplainer,并展示了其在改进零样本诊断的性能和可解释性方面的有效性。我们的结果表明,Xplainer提供了更详细的决策过程理解,并可以成为临床诊断的有价值工具。