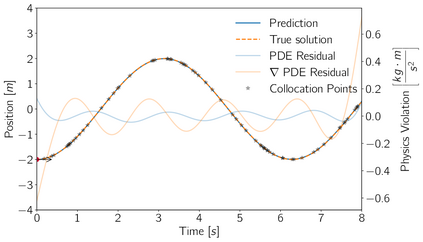

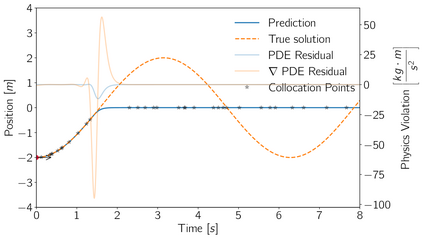

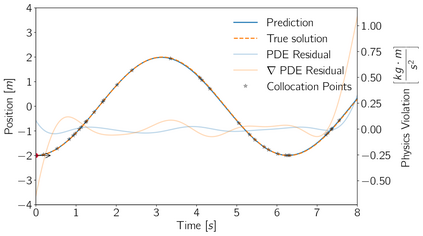

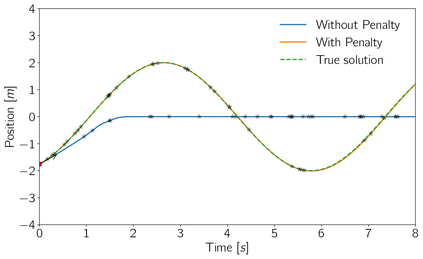

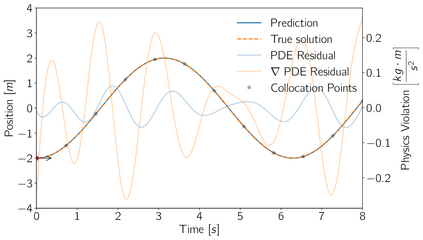

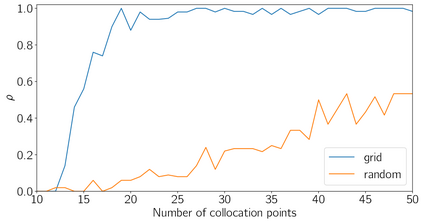

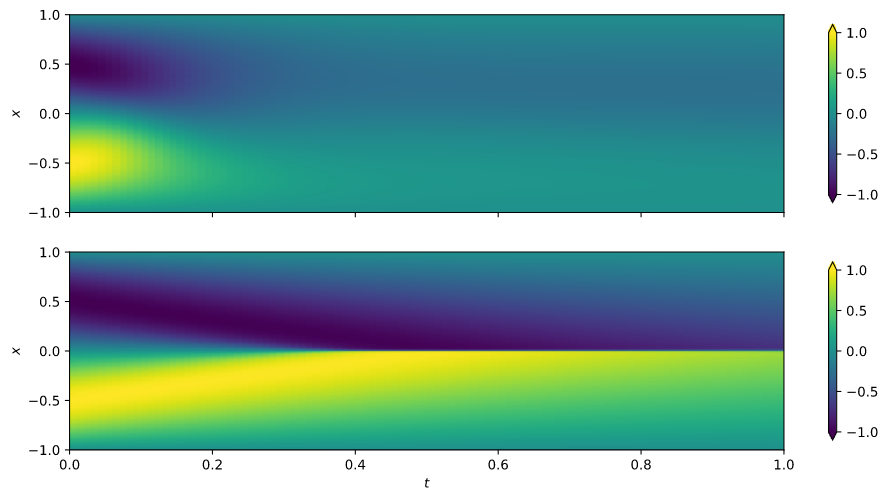

The advent of scientific machine learning (SciML) has opened up a new field with many promises and challenges in the field of simulation science by developing approaches at the interface of physics- and data-based modelling. To this end, physics-informed neural networks (PINNs) have been introduced in recent years, which cope for the scarcity in training data by incorporating physics knowledge of the problem at so-called collocation points. In this work, we investigate the prediction performance of PINNs with respect to the number of collocation points used to enforce the physics-based penalty terms. We show that PINNs can fail, learning a trivial solution that fulfills the physics-derived penalty term by definition. We have developed an alternative sampling approach and a new penalty term enabling us to remedy this core problem of PINNs in data-scarce settings with competitive results while reducing the amount of collocation points needed by up to 80 \% for benchmark problems.

翻译:科学机器学习(SciML)的到来开辟了一个新的领域,在模拟科学领域提出了许多承诺和挑战,在物理和基于数据的建模的界面上制定了方法,从而在模拟科学领域开辟了多种前景和挑战,为此,近年来引入了物理知情神经网络(PINNs),通过在所谓的合用点纳入对这一问题的物理知识,弥补了培训数据稀缺的问题。在这项工作中,我们调查了PINNs对用于执行以物理为基础的惩罚术语的合用点数的预测性能。我们表明,PINNs可以失败,学习了一个小的解决方案,用定义来完成物理衍生的处罚术语。我们开发了一种替代抽样方法和一个新的惩罚术语,使我们能够通过具有竞争性的结果,在数据存储环境中解决PINNs这一核心问题,同时将基准问题所需的合用点数量减少到80 ⁇ 。