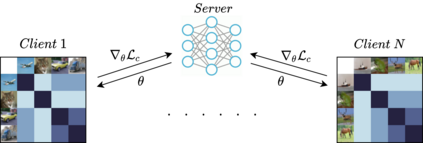

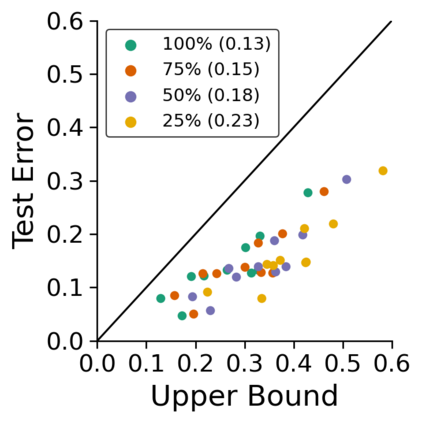

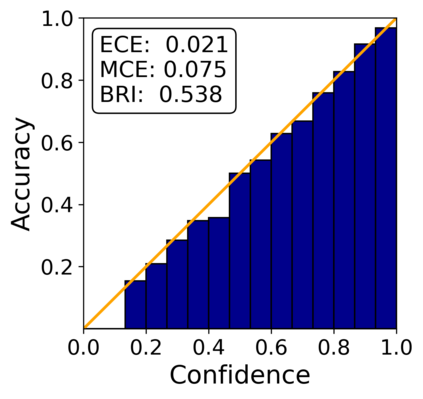

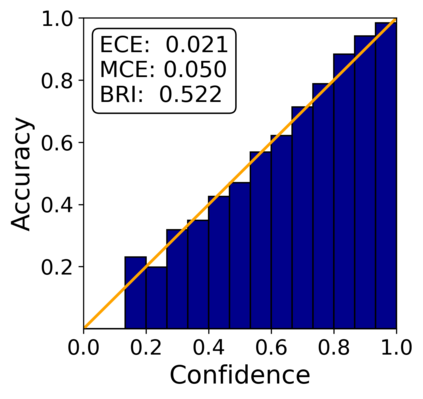

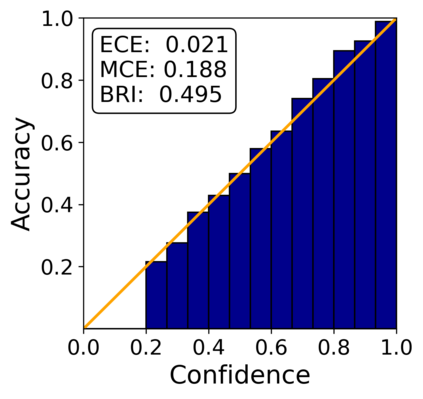

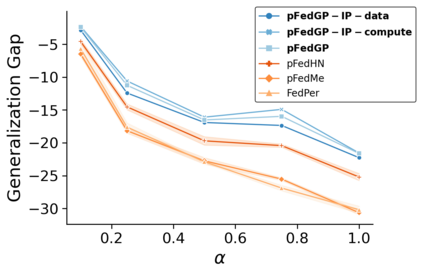

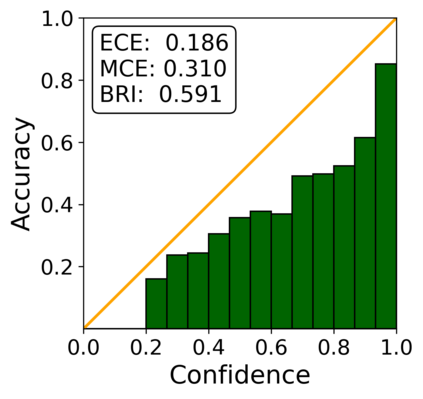

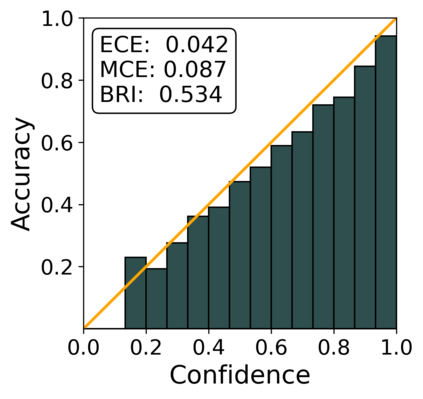

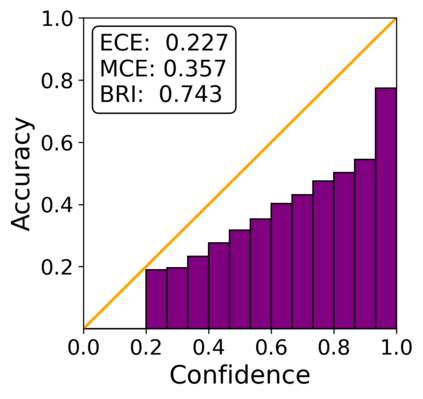

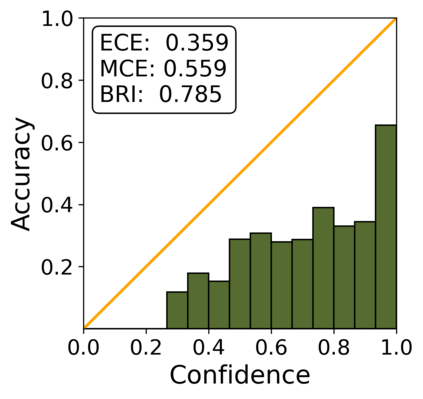

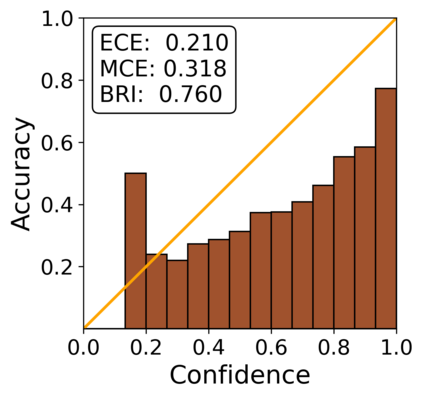

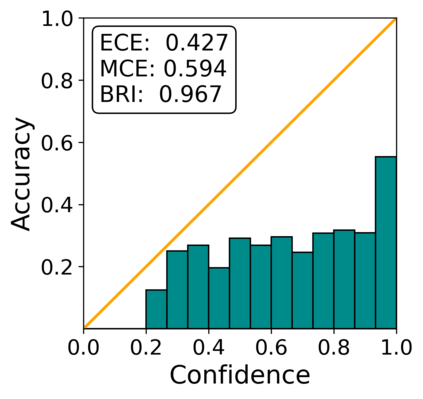

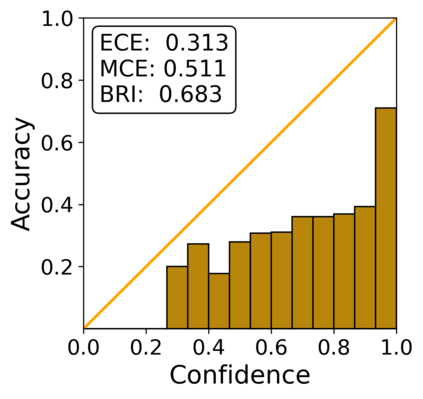

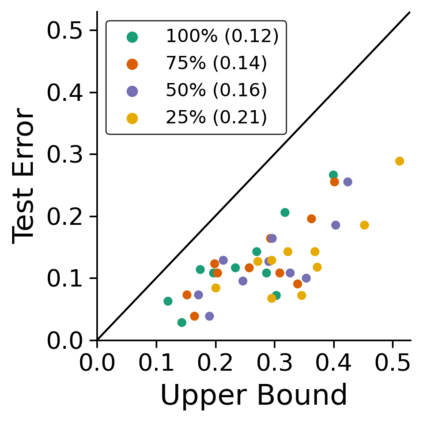

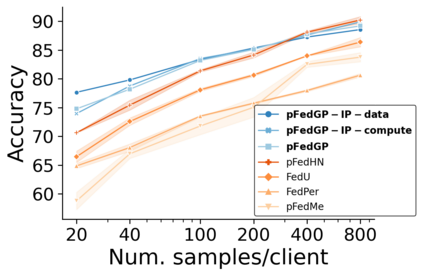

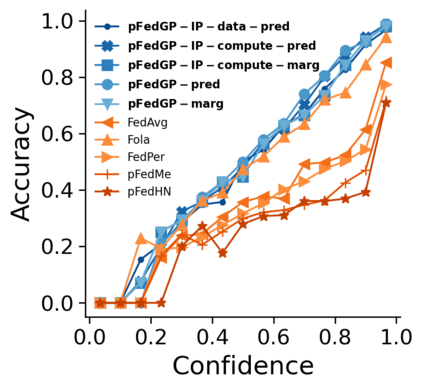

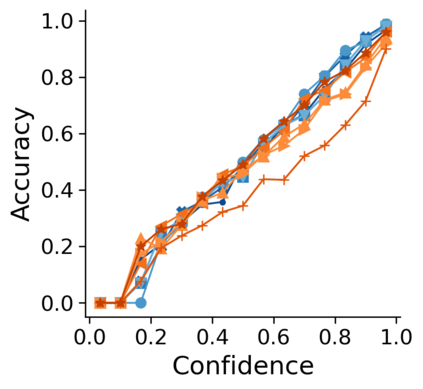

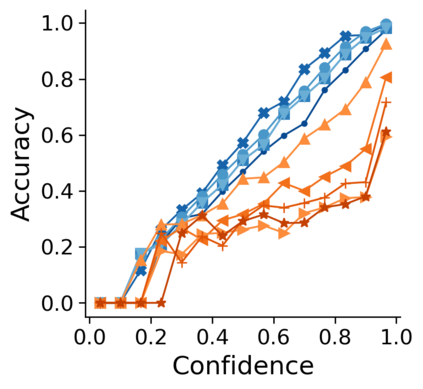

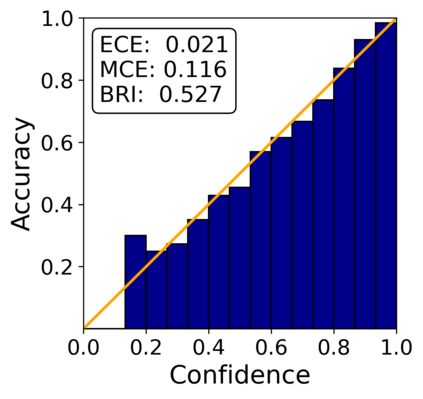

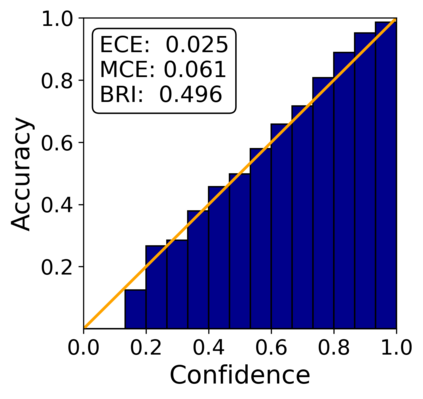

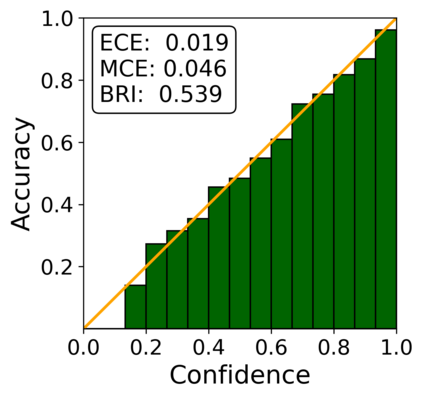

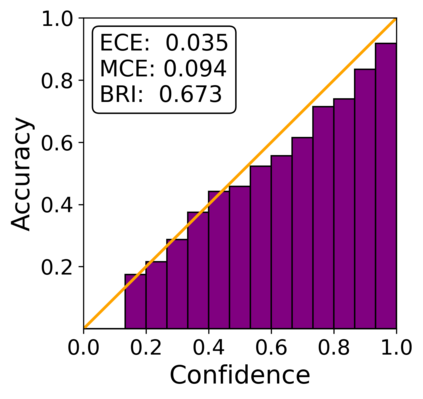

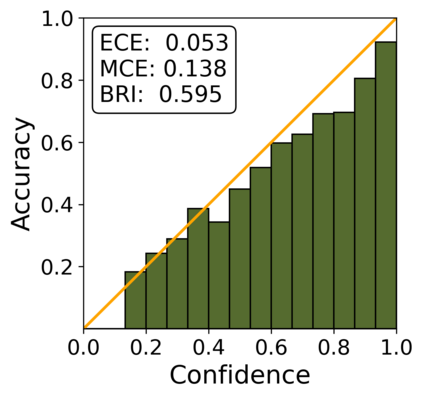

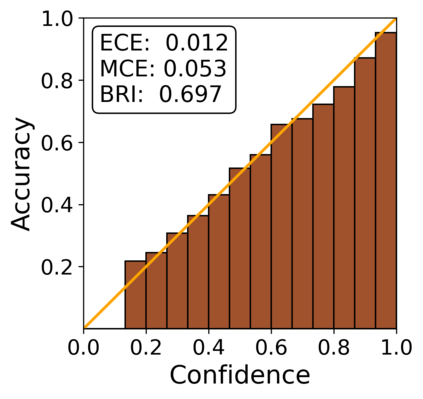

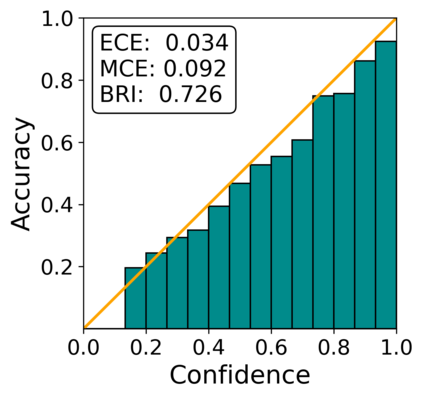

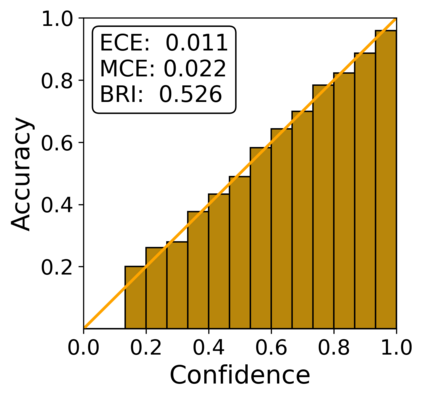

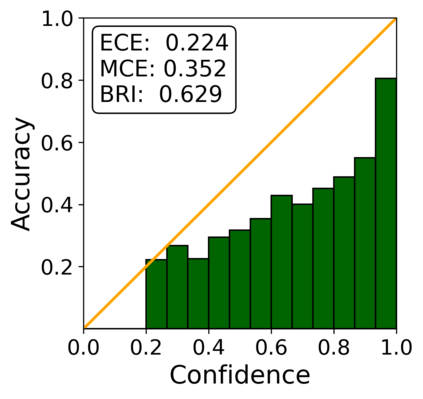

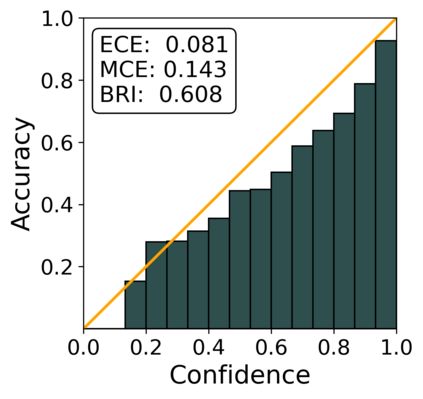

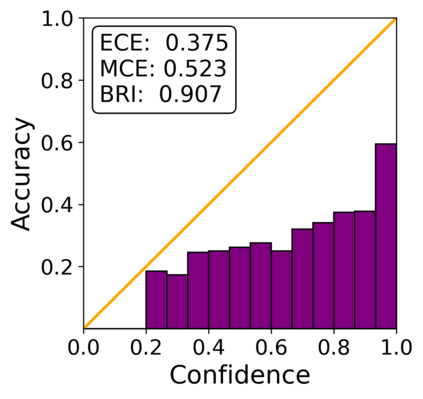

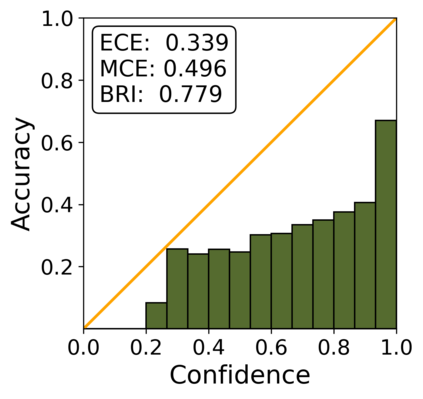

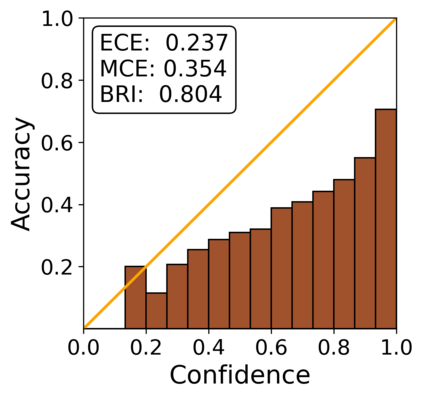

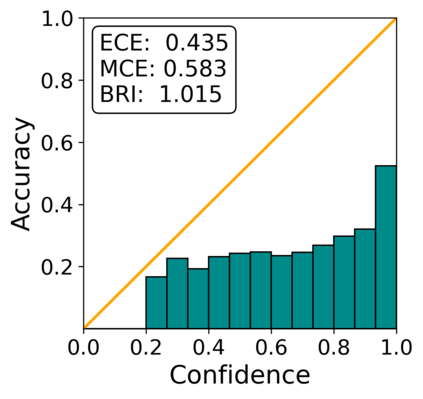

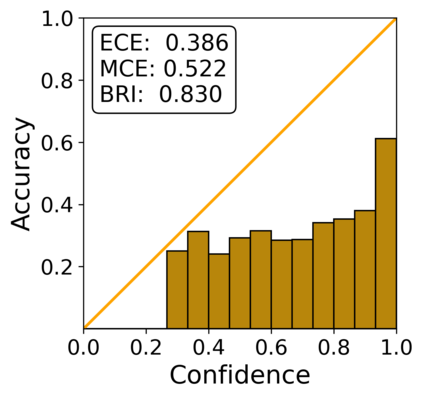

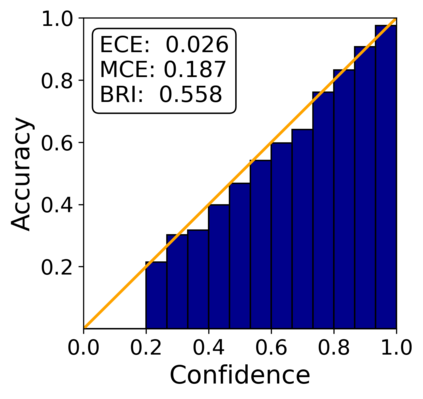

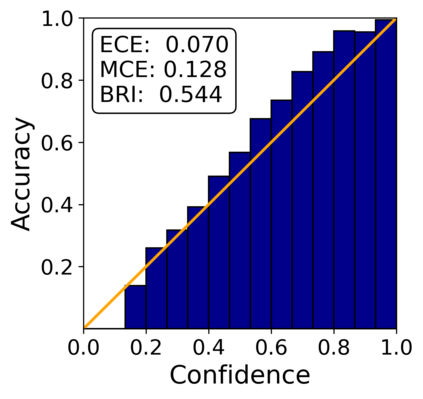

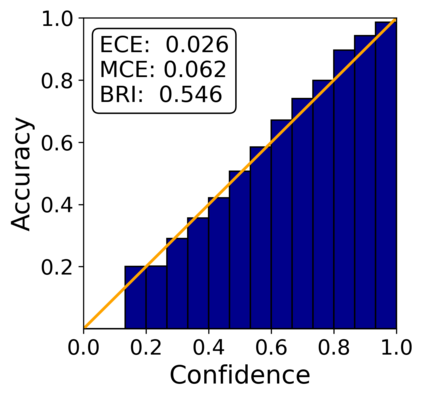

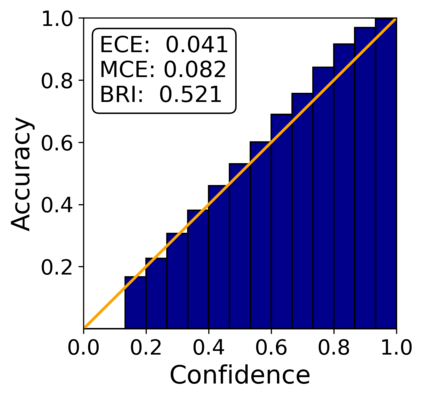

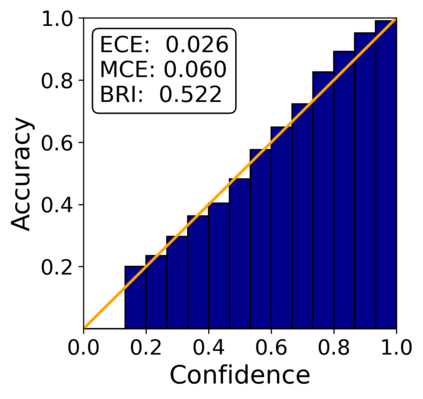

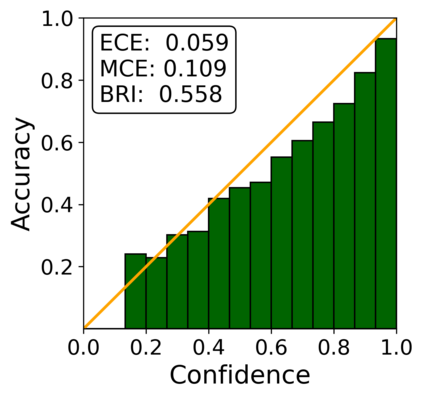

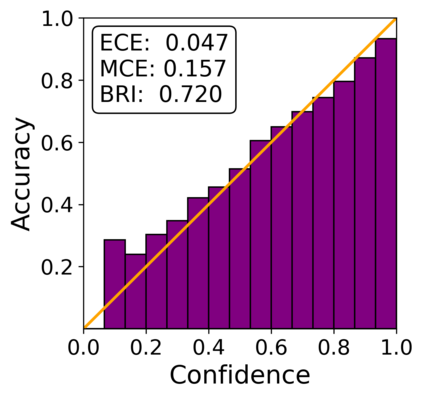

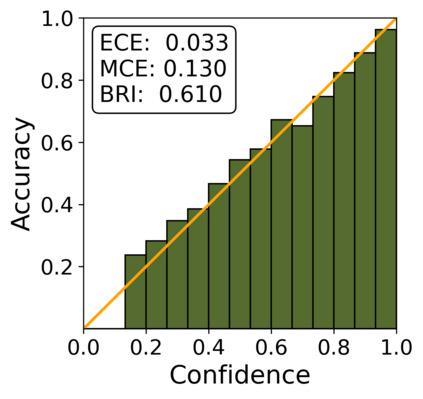

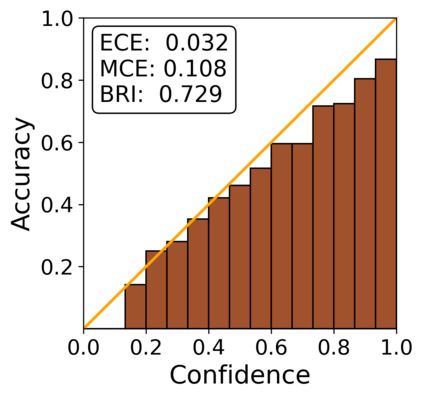

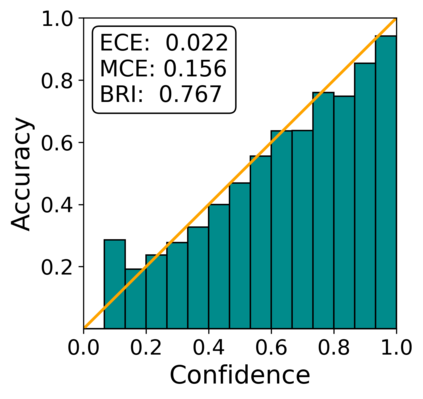

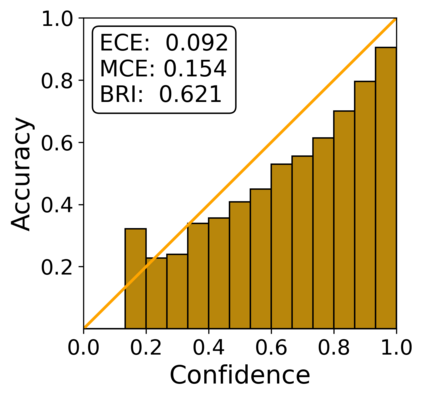

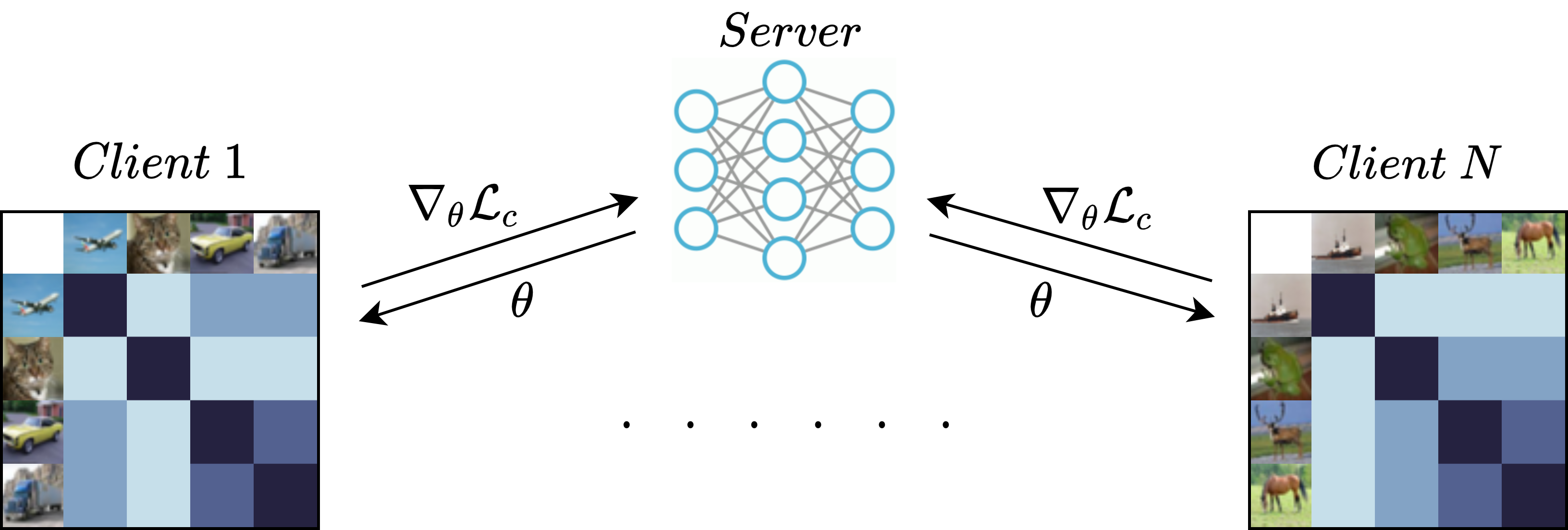

Federated learning aims to learn a global model that performs well on client devices with limited cross-client communication. Personalized federated learning (PFL) further extends this setup to handle data heterogeneity between clients by learning personalized models. A key challenge in this setting is to learn effectively across clients even though each client has unique data that is often limited in size. Here we present pFedGP, a solution to PFL that is based on Gaussian processes (GPs) with deep kernel learning. GPs are highly expressive models that work well in the low data regime due to their Bayesian nature. However, applying GPs to PFL raises multiple challenges. Mainly, GPs performance depends heavily on access to a good kernel function, and learning a kernel requires a large training set. Therefore, we propose learning a shared kernel function across all clients, parameterized by a neural network, with a personal GP classifier for each client. We further extend pFedGP to include inducing points using two novel methods, the first helps to improve generalization in the low data regime and the second reduces the computational cost. We derive a PAC-Bayes generalization bound on novel clients and empirically show that it gives non-vacuous guarantees. Extensive experiments on standard PFL benchmarks with CIFAR-10, CIFAR-100, and CINIC-10, and on a new setup of learning under input noise show that pFedGP achieves well-calibrated predictions while significantly outperforming baseline methods, reaching up to 21% in accuracy gain.

翻译:个人化联合学习(PFL)进一步扩展这一设置,通过学习个性化模型处理客户之间的数据差异。在这一设置中,关键的挑战是如何在客户之间有效学习,尽管每个客户都有其独特的数据,其规模往往有限。在这里,我们提出PFedGP,这是基于Gausian进程(GP)的PFLF的解决方案,并有深入的内核学习。GPA是高度直观的模型,由于Bayesian性质,在低数据制度中运作良好。然而,将GPFL应用到PFL会带来多重挑战。主要是,GP业绩在很大程度上取决于能否使用良好的内核功能,而学习内核内核需要大型培训。因此,我们提议在所有客户中学习一个共享的内核功能,以内核网络为参数,每个客户都有个人GPGS分类器。我们进一步扩展PFGP的基线,包括使用两种新型方法导出点,首先有助于大大改进低数据系统输入的通用,而21-AFAR的精确度实验,而后,我们通过常规的CRAFLA级的不断的计算成本。