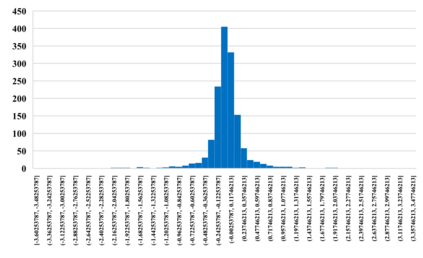

Recently, learned image compression methods have developed rapidly and exhibited excellent rate-distortion performance when compared to traditional standards, such as JPEG, JPEG2000 and BPG. However, the learning-based methods suffer from high computational costs, which is not beneficial for deployment on devices with limited resources. To this end, we propose shift-addition parallel modules (SAPMs), including SAPM-E for the encoder and SAPM-D for the decoder, to largely reduce the energy consumption. To be specific, they can be taken as plug-and-play components to upgrade existing CNN-based architectures, where the shift branch is used to extract large-grained features as compared to small-grained features learned by the addition branch. Furthermore, we thoroughly analyze the probability distribution of latent representations and propose to use Laplace Mixture Likelihoods for more accurate entropy estimation. Experimental results demonstrate that the proposed methods can achieve comparable or even better performance on both PSNR and MS-SSIM metrics to that of the convolutional counterpart with an about 2x energy reduction.

翻译:最近,与JPEG、JPEG2000和BPG等传统标准相比,学习的图像压缩方法迅速发展,并表现出极好的速度扭曲性能。然而,学习方法的计算成本很高,不利于在资源有限的装置上部署。为此,我们提议采用转移式附加平行模块,包括用于编码器的SAPM-E和用于解码器的SAPM-D,以大幅降低能源消耗量。具体地说,这些方法可以作为插件和播放部件,升级现有的有线电视新闻网的建筑,在这些结构中,转换分支用来提取大片的特征,而不是新增分支所学的小片特征。此外,我们彻底分析潜在代表的概率分布,并提议使用Laplace Mixture Linedslinehes 来更准确地估计摄像仪。实验结果表明,拟议的方法可以在PSNR和MS-SSIM指标上实现可比较或更好的性能,与革命对等单位相比,大约减少2x能源。