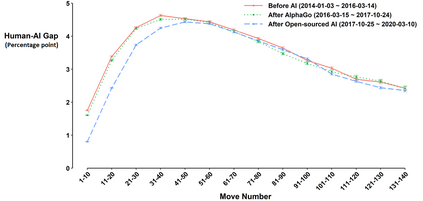

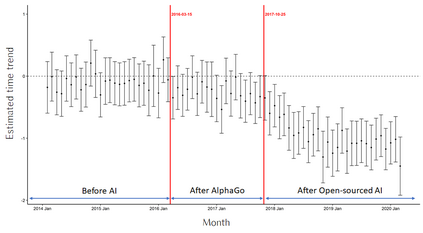

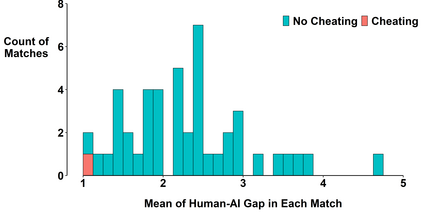

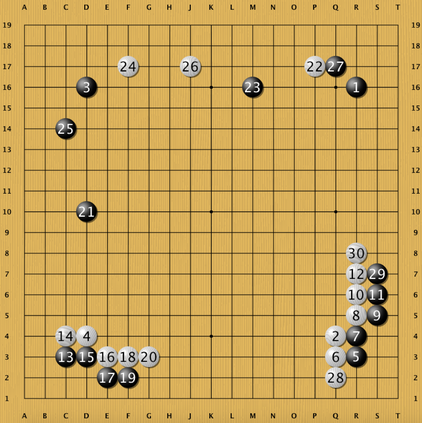

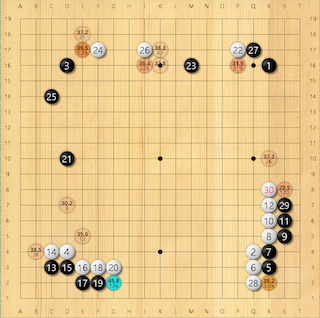

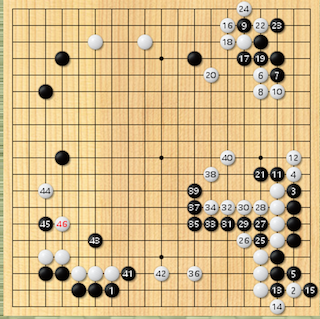

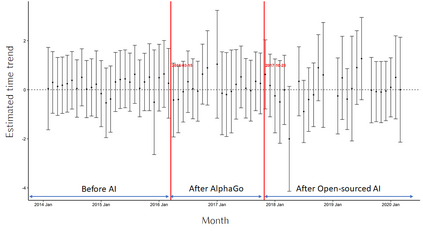

Across a growing number of domains, human experts are expected to learn from and adapt to AI with superior decision-making abilities. But how can we quantify such human adaptation to AI? We develop a simple measure of human adaptation to AI and test its usefulness in two case studies. In Case Study 1, we analyze 1.3 million move decisions made by professional Go players and find that a positive form of adaptation to AI (learning) occurred after the players could observe AI's reasoning processes rather than mere actions of AI. In Case Study 2, we test whether our measure is sensitive enough to capture a negative form of adaptation to AI (cheating aided by AI), which occurred in a match between professional Go players. We discuss our measure's applications in domains other than Go, especially in domains in which AI's decision-making ability will likely surpass that of human experts.

翻译:在越来越多的领域,人们期望人类专家学习并适应具有较高决策能力的AI。但是,我们如何量化人类适应AI?我们制定人类适应AI的简单衡量标准,并在两个案例研究中测试其效用。在案例研究1中,我们分析了130万个专业Go球员的移动决定,发现在球员能够观察AI的推理过程而不是光靠AI的行动之后,出现了对AI(学习)的积极适应形式。在案例研究2中,我们测试我们的措施是否足够敏感,足以捕捉到对AI(AI帮助的切换)的负面适应形式,这种适应形式发生在专业Go球员之间的比赛中。我们讨论了我们的措施在Go以外的领域的应用,特别是在AI决策能力可能超过人类专家的领域的应用。