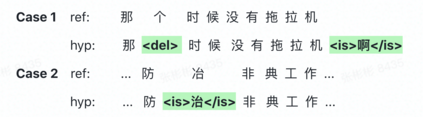

In this paper, we present WenetSpeech, a multi-domain Mandarin corpus consisting of 10000+ hours high-quality labeled speech, 2400+ hours weakly labeled speech, and about 10000 hours unlabeled speech, with 22400+ hours in total. We collect the data from YouTube and Podcast, which covers a variety of speaking styles, scenarios, domains, topics, and noisy conditions. An optical character recognition (OCR) based method is introduced to generate the audio/text segmentation candidates for the YouTube data on its corresponding video captions, while a high-quality ASR transcription system is used to generate audio/text pair candidates for the Podcast data. Then we propose a novel end-to-end label error detection approach to further validate and filter the candidates. We also provide three manually labelled high-quality test sets along with WenetSpeech for evaluation -- Dev for cross-validation purpose in training, Test_Net, collected from Internet for matched test, and Test\_Meeting, recorded from real meetings for more challenging mismatched test. Baseline systems trained with WenetSpeech are provided for three popular speech recognition toolkits, namely Kaldi, ESPnet, and WeNet, and recognition results on the three test sets are also provided as benchmarks. To the best of our knowledge, WenetSpeech is the current largest open-sourced Mandarin speech corpus with transcriptions, which benefits research on production-level speech recognition.

翻译:在本文中,我们展示了WenetSpeech,这是由10000+小时高质量标签演讲、24+小时低标签演讲和大约10000小时无标签演讲组成的多功能文体文体。我们收集了来自YouTube和Podcast的数据,这些数据涵盖各种语言风格、情景、领域、专题和吵闹条件。我们采用了光学字符识别(OCR)法,在相应的视频字幕中为YouTube数据生成音频/文字分解候选人,同时使用高质量的ASR抄录系统为Podcast数据生成音频/文本配对候选人。然后我们提出了一个新的端对端标签误检方法,以进一步验证和过滤候选人。我们还提供了三个人工贴有标签的高品质测试套,与WenetSpeech一起用于评价 -- -- 用于培训的交叉校验目的设计、测试网,从互联网上收集测试;Test_MeechMeelsm,记录为更具挑战性的测试记录。与WenetSpeetSpeech的基线系统进行了培训,并提供了三种最大规模的语音识别识别工具。