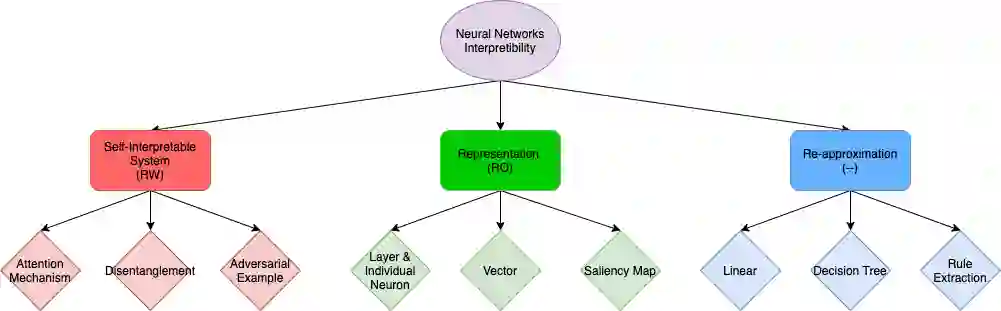

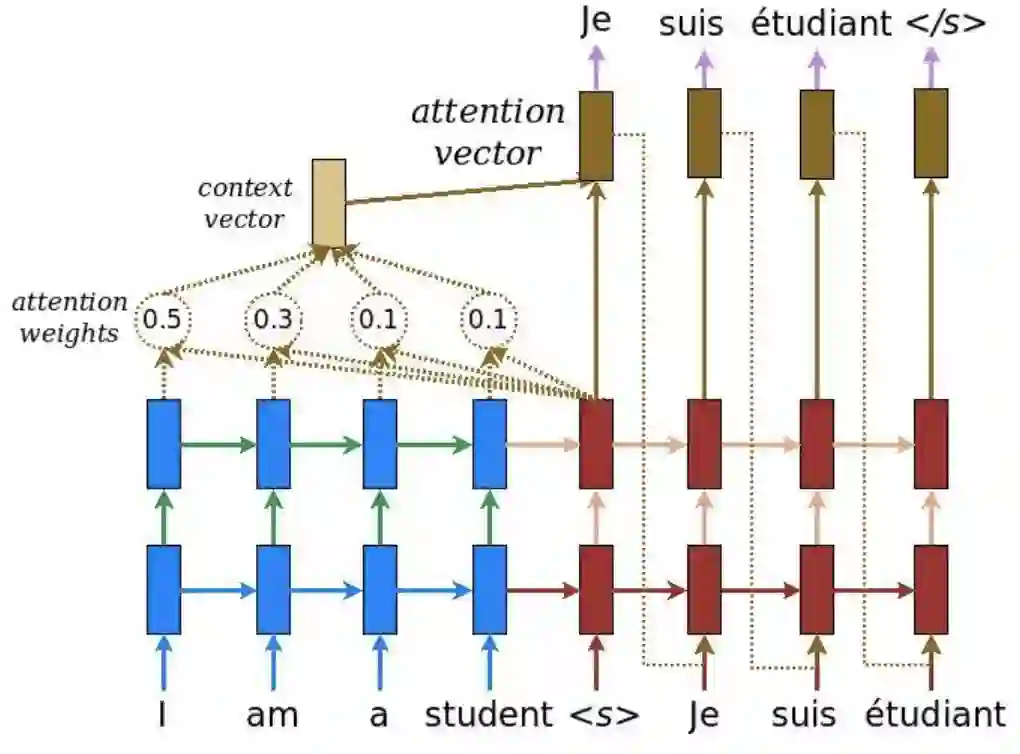

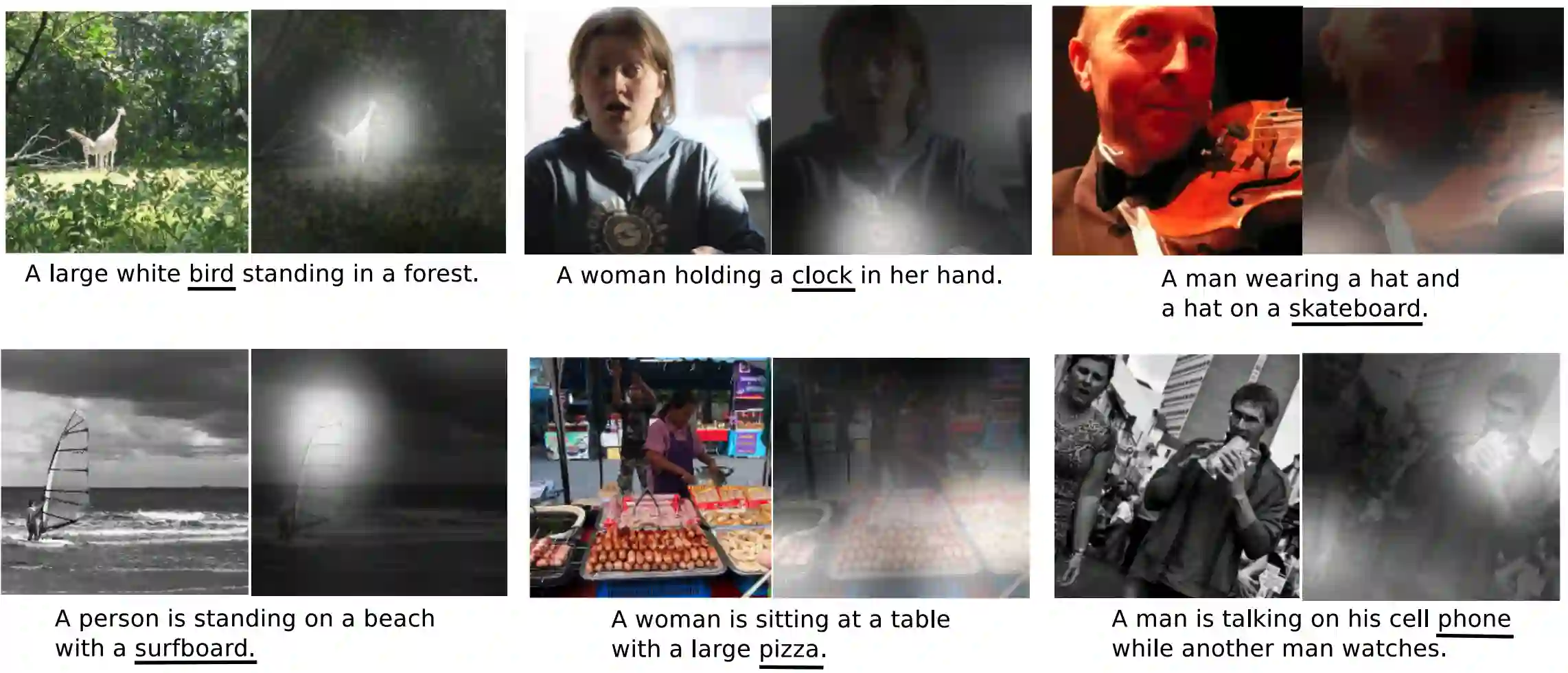

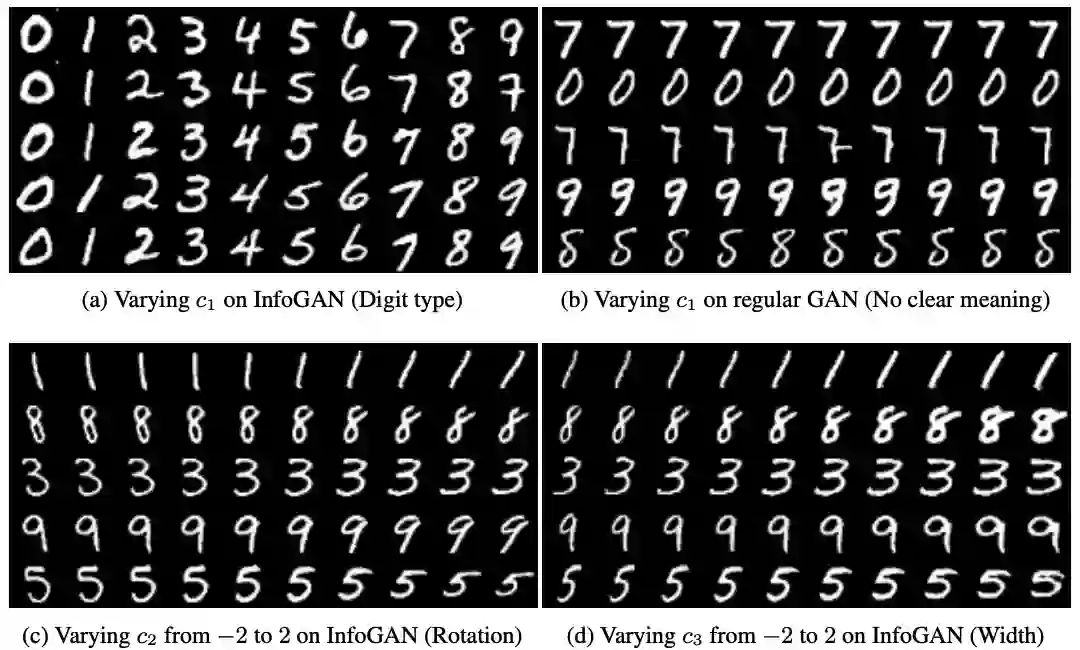

Modern deep learning algorithms tend to optimize an objective metric, such as minimize a cross entropy loss on a training dataset, to be able to learn. The problem is that the single metric is an incomplete description of the real world tasks. The single metric cannot explain why the algorithm learn. When an erroneous happens, the lack of interpretability causes a hardness of understanding and fixing the error. Recently, there are works done to tackle the problem of interpretability to provide insights into neural networks behavior and thought process. The works are important to identify potential bias and to ensure algorithm fairness as well as expected performance.

翻译:现代深层学习算法倾向于优化客观的衡量标准,例如将培训数据集上的交叉星体损失降到最低程度,以便能够学习。问题在于单一的衡量标准是对真实世界任务不完整的描述。单一的衡量标准无法解释算法学习的原因。当错误发生时,缺乏解释性会造成理解和纠正错误的难度。最近,为解决解释性问题,对神经网络的行为和思维过程提供了洞察力,做了一些工作。这些工作对于查明潜在偏差、确保算法公平以及预期业绩非常重要。