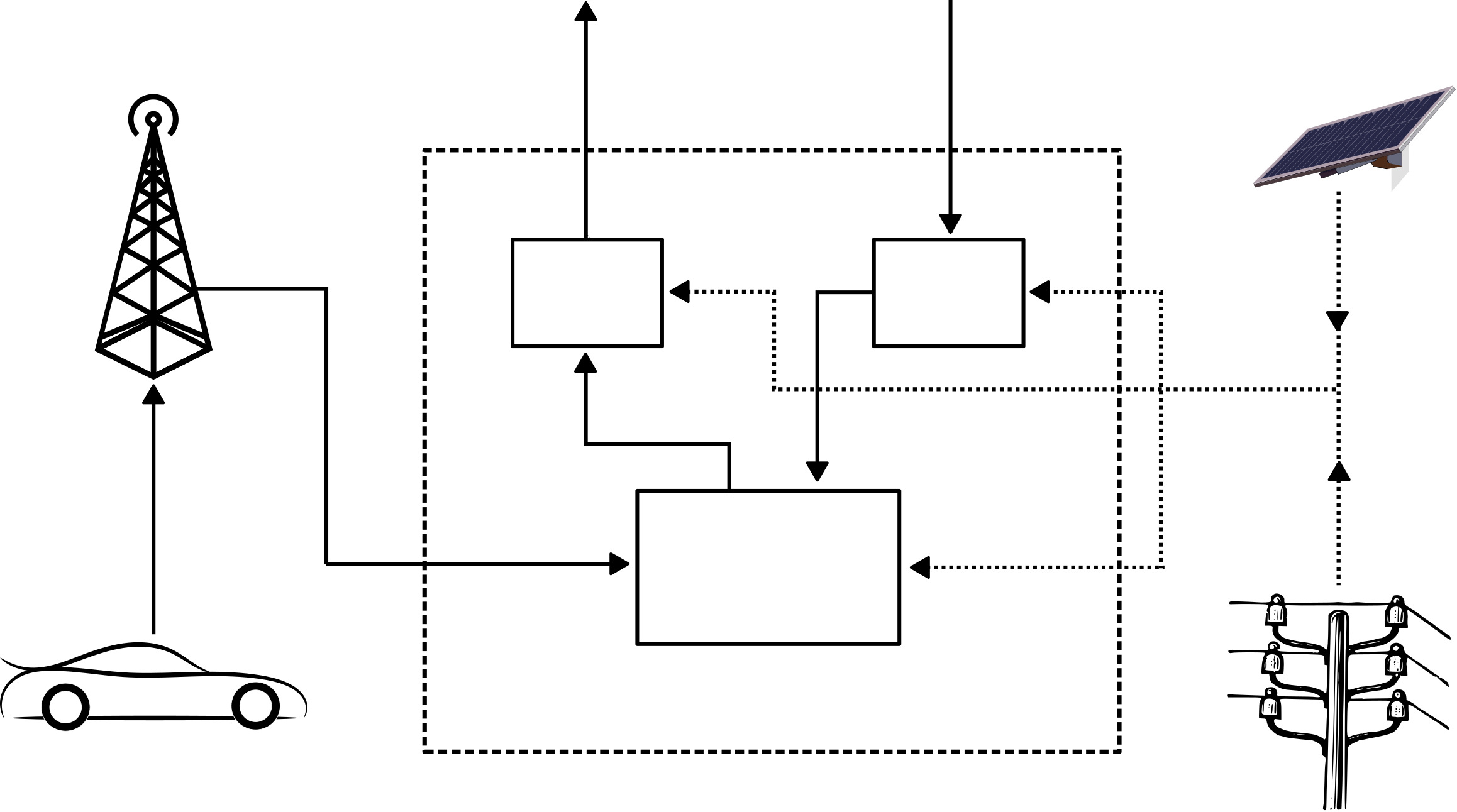

The energy sustainability of multi-access edge computing (MEC) platforms is addressed in this paper, by developing Energy-Aware job Scheduling at the Edge (EASE), a computing resource scheduler for edge servers co-powered by renewable energy resources and the power grid. The scenario under study involves the optimal allocation and migration of time-sensitive computing tasks in a resource-constrained internet of vehicles (IoV) context. This is achieved by tackling, as a main objective, the minimization of the carbon footprint of the edge network, whilst delivering adequate quality of service (QoS) to the end users (e.g., meeting task execution deadlines). EASE integrates a i) centralized optimization step, solved through model predictive control (MPC), to manage the renewable energy that is locally collected at the edge servers and their local computing resources, estimating their future availability, and ii) a distributed consensus step, solved via dual ascent in closed form, to reach agreement on service migrations. EASE is compared with existing strategies that always and never migrate the computing tasks. Quantitative results demonstrate the greater energy efficiency achieved by EASE, which often gets close to complete carbon neutrality, while also improving the QoS.

翻译:本文探讨了多接入边缘计算平台的能源可持续性问题,为此,在边缘开发了能源-Aware 工作调度系统(EASE),这是由可再生能源资源和电网共同驱动的边缘服务器的计算资源调度器,正在研究的情景是,在资源受限制的车辆互联网(IoV)背景下,最佳分配和迁移时间敏感的计算任务,作为主要目标,最大限度地减少边缘网络的碳足迹,同时向终端用户提供适当的服务质量(QOS),同时向终端用户(例如,完成任务执行期限)提供适足的服务质量(QOS)。 EASE整合了一个中央优化步骤,通过模型预测控制(MPC)解决,管理在边缘服务器及其本地计算资源当地收集的可再生能源,估计其未来可用性;二)一个分散的协商一致步骤,通过封闭形式的双重点解决,就服务迁移达成协议。EASE与现有战略相比较,始终且永远不迁移计算任务。量化的结果表明,EASEY实现了更高的能源效率,该步骤往往接近于完全的碳中性。