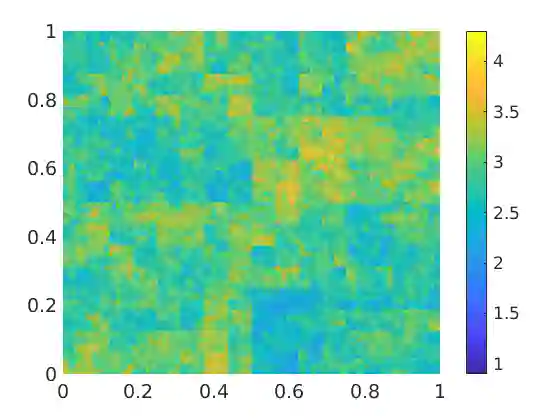

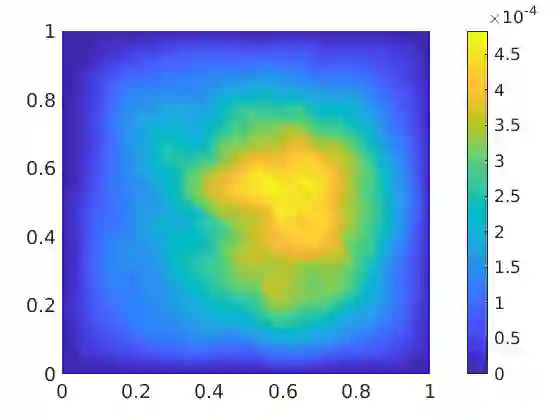

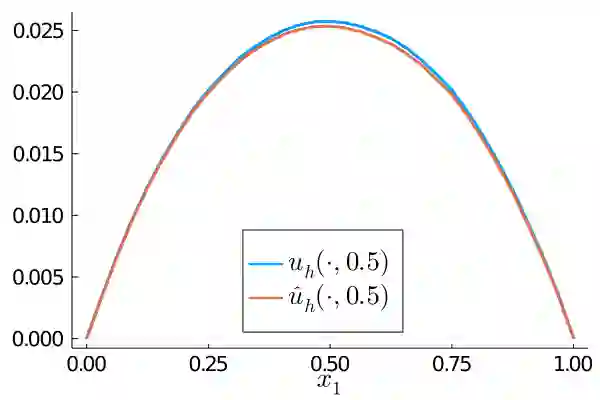

This paper studies the compression of partial differential operators using neural networks. We consider a family of operators, parameterized by a potentially high-dimensional space of coefficients that may vary on a large range of scales. Based on existing methods that compress such a multiscale operator to a finite-dimensional sparse surrogate model on a given target scale, we propose to directly approximate the coefficient-to-surrogate map with a neural network. We emulate local assembly structures of the surrogates and thus only require a moderately sized network that can be trained efficiently in an offline phase. This enables large compression ratios and the online computation of a surrogate based on simple forward passes through the network is substantially accelerated compared to classical numerical upscaling approaches. We apply the abstract framework to a family of prototypical second-order elliptic heterogeneous diffusion operators as a demonstrating example.

翻译:本文研究使用神经网络压缩部分差分操作器的问题。 我们考虑的是一组操作器, 其参数是可能具有高维的系数空间, 其参数范围可能大不相同。 根据将这种多尺度操作器压缩到一个特定目标规模的有限维度分散代孕模型的现有方法, 我们提议直接将系数图与神经网络相近。 我们模仿代孕器的本地组装结构, 因此只需要一个中等规模的网络, 可以在离线阶段进行有效的培训。 这使得能够使用大型压缩比率和在线计算基于网络简单远端传输的代孕器, 与传统的数字升级方法相比, 大大加快了速度。 我们用抽象框架来将一个原型第二级椭圆形异谱传播操作器组合作为示范。