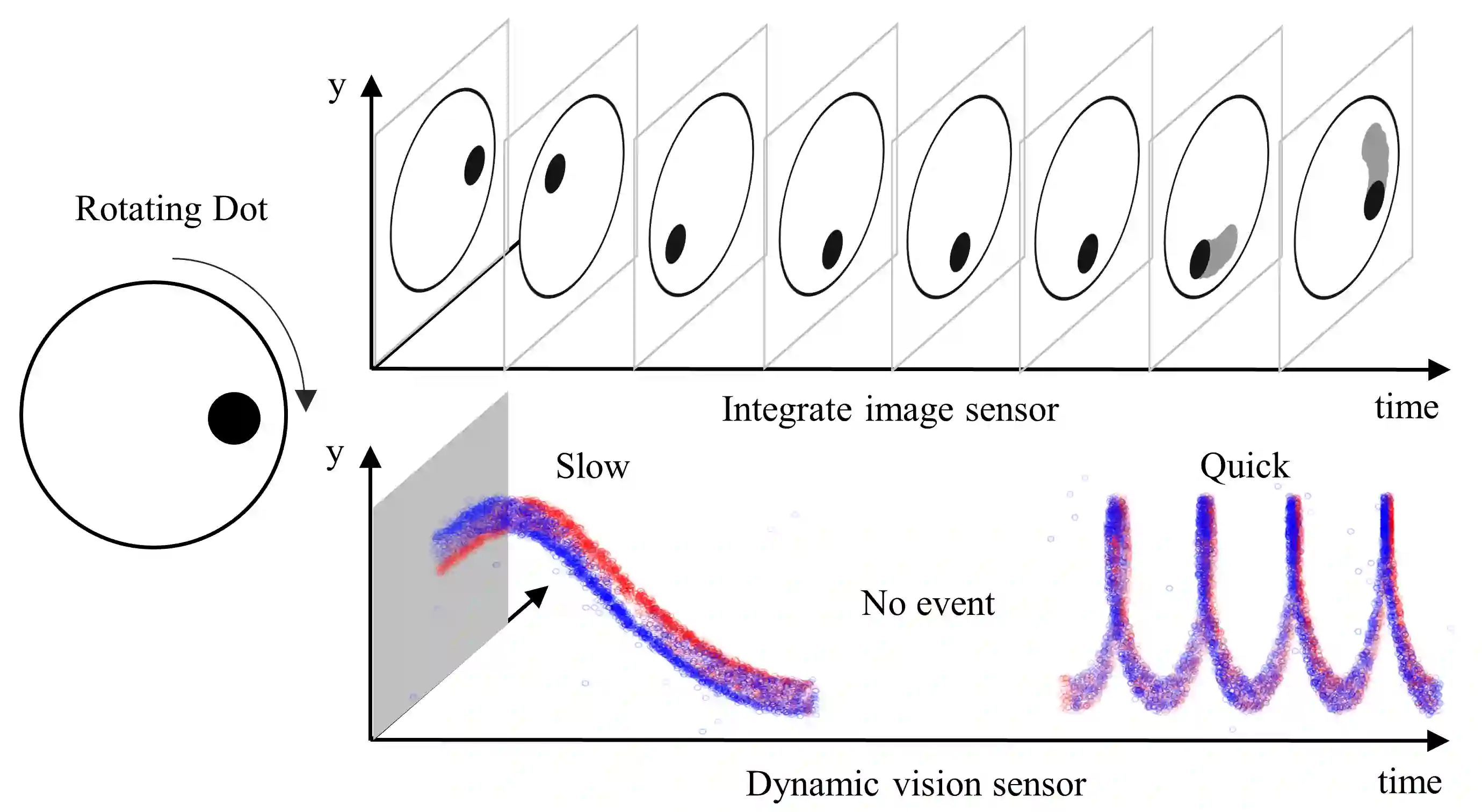

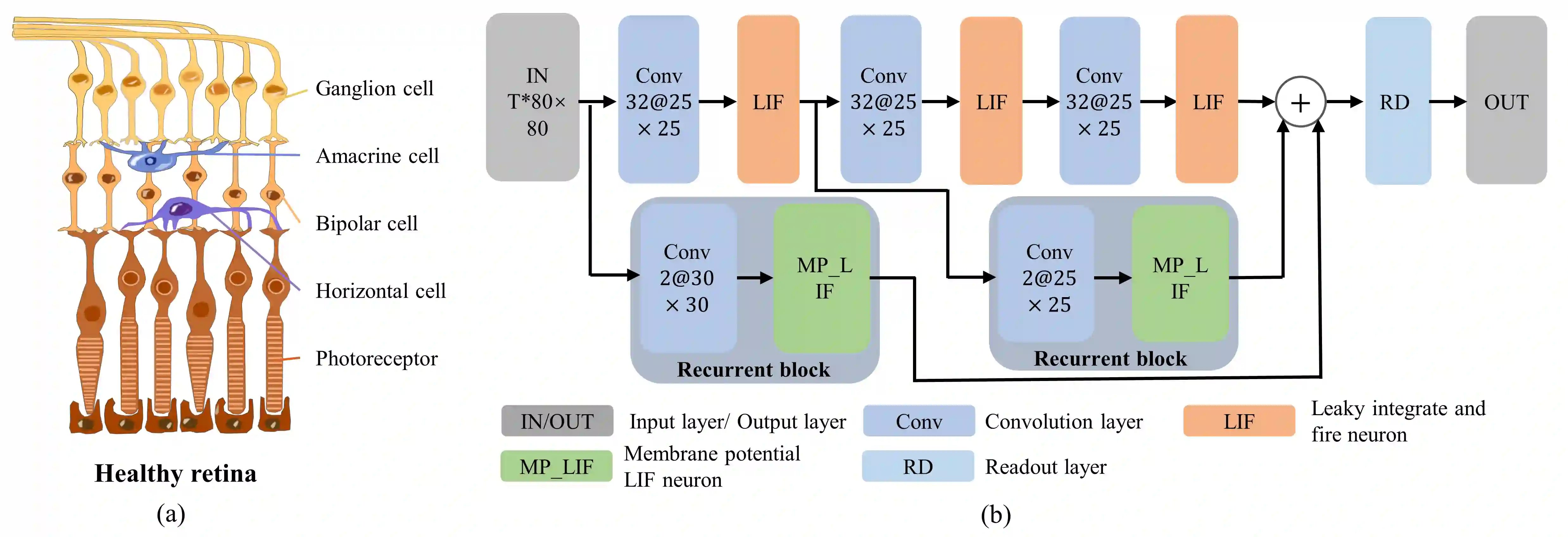

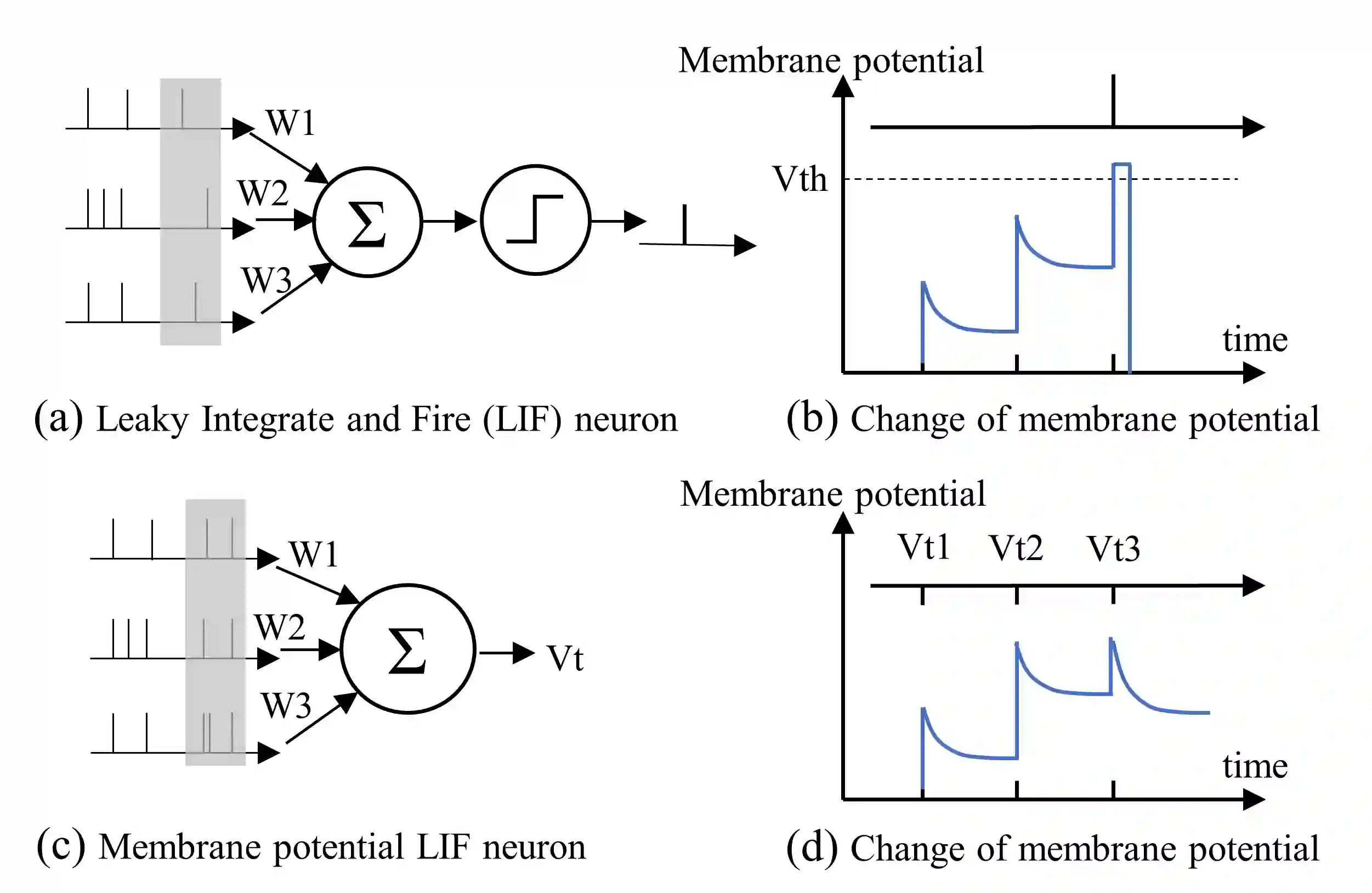

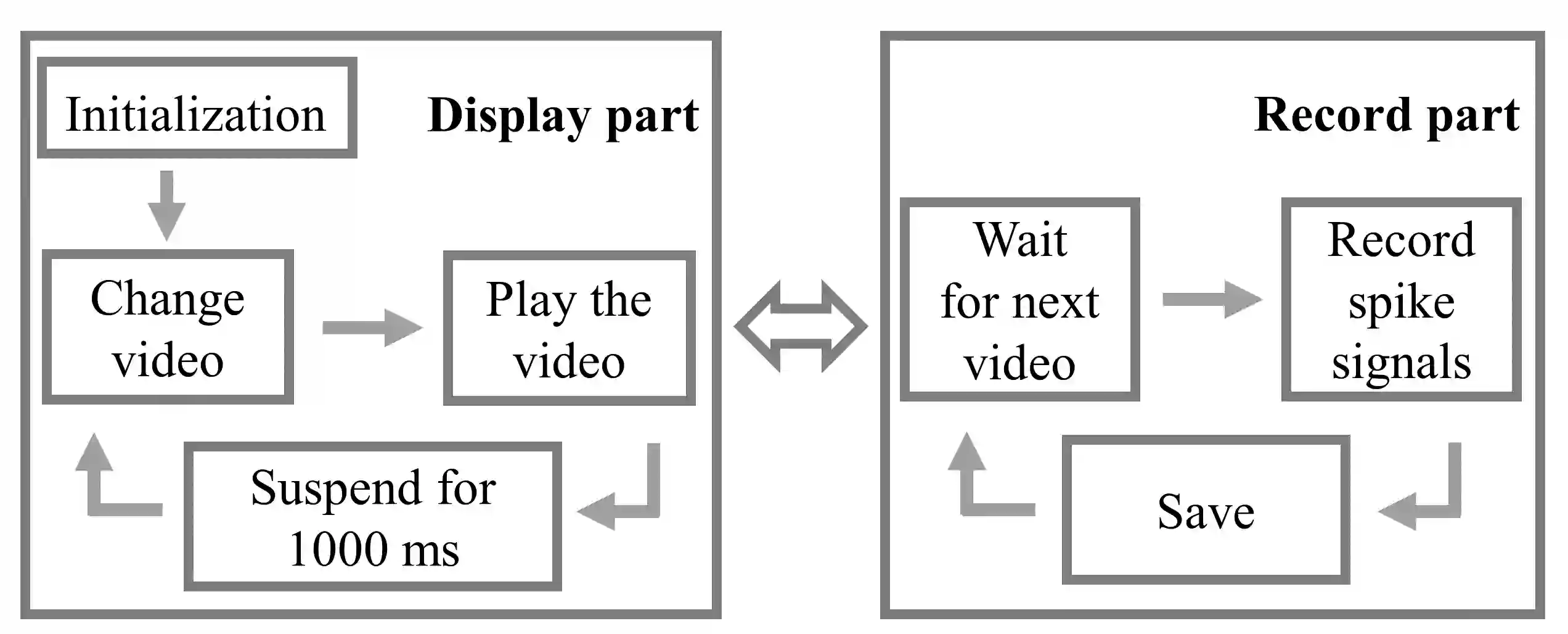

Intelligent and low-power retinal prostheses are highly demanded in this era, where wearable and implantable devices are used for numerous healthcare applications. In this paper, we propose an energy-efficient dynamic scenes processing framework (SpikeSEE) that combines a spike representation encoding technique and a bio-inspired spiking recurrent neural network (SRNN) model to achieve intelligent processing and extreme low-power computation for retinal prostheses. The spike representation encoding technique could interpret dynamic scenes with sparse spike trains, decreasing the data volume. The SRNN model, inspired by the human retina special structure and spike processing method, is adopted to predict the response of ganglion cells to dynamic scenes. Experimental results show that the Pearson correlation coefficient of the proposed SRNN model achieves 0.93, which outperforms the state of the art processing framework for retinal prostheses. Thanks to the spike representation and SRNN processing, the model can extract visual features in a multiplication-free fashion. The framework achieves 12 times power reduction compared with the convolutional recurrent neural network (CRNN) processing-based framework. Our proposed SpikeSEE predicts the response of ganglion cells more accurately with lower energy consumption, which alleviates the precision and power issues of retinal prostheses and provides a potential solution for wearable or implantable prostheses.

翻译:智能和低功率的视网膜假肢在这个时代需要非常高的智能和低功率的视网膜假肢,在这个时代,许多保健应用都使用可磨损和可植入的装置。在本文中,我们提议一个节能的动态场景处理框架(SpikeeSeeE),将高压代表代号编码技术与生物启发的反复神经网络模型(SRNN)模型结合起来,以实现智能处理和对视像的极低功率计算,为视网膜假肢进行极低的测试。在人类视网特殊结构和加压处理方法的启发下,SSRNNN模型被采用,以预测交织细胞对动态场景的反应。实验结果表明,拟议的SRNN模型的皮尔森相关系数达到了0.93,这超出了对视网的状态处理框架。由于峰值代表和SRNNN的处理,该模型可以以不重复的方式提取视觉特征。这个框架建议与同级的恒定式神经网络(CRNPN)相比,能够更准确地预测我们的恒定的节能反应。