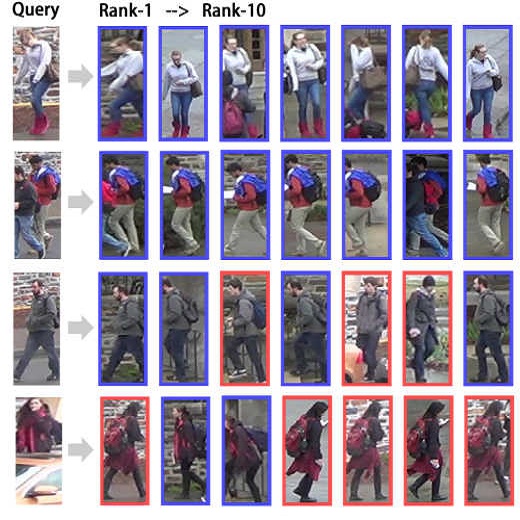

Human behavior understanding with unmanned aerial vehicles (UAVs) is of great significance for a wide range of applications, which simultaneously brings an urgent demand of large, challenging, and comprehensive benchmarks for the development and evaluation of UAV-based models. However, existing benchmarks have limitations in terms of the amount of captured data, types of data modalities, categories of provided tasks, and diversities of subjects and environments. Here we propose a new benchmark - UAVHuman - for human behavior understanding with UAVs, which contains 67,428 multi-modal video sequences and 119 subjects for action recognition, 22,476 frames for pose estimation, 41,290 frames and 1,144 identities for person re-identification, and 22,263 frames for attribute recognition. Our dataset was collected by a flying UAV in multiple urban and rural districts in both daytime and nighttime over three months, hence covering extensive diversities w.r.t subjects, backgrounds, illuminations, weathers, occlusions, camera motions, and UAV flying attitudes. Such a comprehensive and challenging benchmark shall be able to promote the research of UAV-based human behavior understanding, including action recognition, pose estimation, re-identification, and attribute recognition. Furthermore, we propose a fisheye-based action recognition method that mitigates the distortions in fisheye videos via learning unbounded transformations guided by flat RGB videos. Experiments show the efficacy of our method on the UAV-Human dataset.

翻译:与无人驾驶飞行器(无人驾驶飞行器)的人类行为理解对于广泛的应用具有非常重要的意义,这些应用同时为开发和评价基于无人驾驶飞行器的模型提出了庞大、富有挑战性和全面基准的迫切需要,但现有基准在所采集的数据数量、数据模式类型、提供的任务类别以及主题和环境的多样性方面都存在局限性。我们在这里提议了一个新的基准——无人驾驶飞行器人类行为理解基准——无人驾驶飞行器,其中包括67,428个多式视频序列和119个行动识别主题,22,476个配置估计框架,41,290个框架和1,144个个人再识别身份的特征,22,263个属性识别框架。我们的数据集是在三个月的白天和夜间由多个城市和农村地区飞行的无人驾驶飞行器收集的,因此涵盖广泛的多样性主题、背景、污点、天气、闭路、摄感和UAV飞行态度。这种全面和具有挑战性的基准应能促进研究基于无人驾驶飞行器的人类行为效率评估、41,290个框架和1,以及22,263个属性识别框架。我们的数据集是通过行动识别方法,我们通过行动识别、展示方法,从而显示我们通过行动认识、改变鱼类的行为方法。