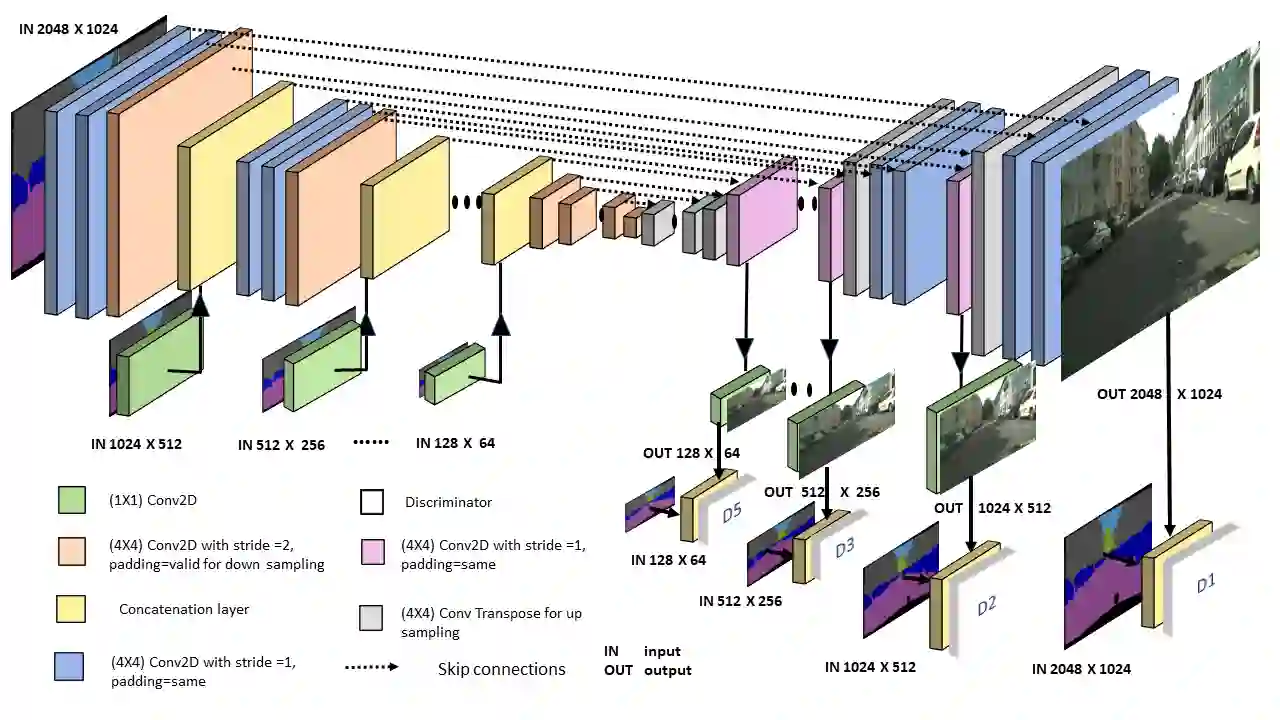

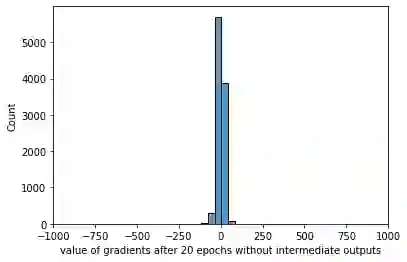

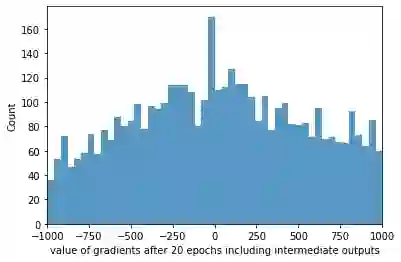

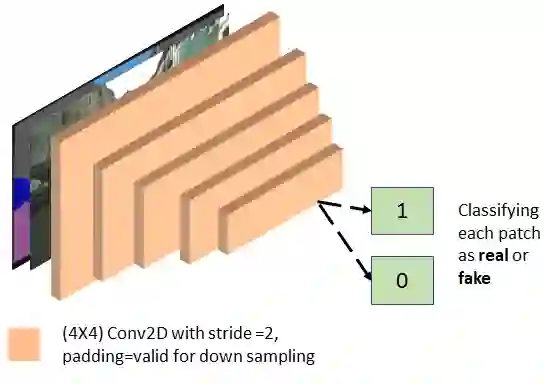

Recently, Conditional Generative Adversarial Network (Conditional GAN) have shown very promising performance in several image-to-image translation applications. However, the uses of these conditional GANs are quite limited to low-resolution images, such as 256X256.The Pix2Pix-HD is a recent attempt to utilize the conditional GAN for high-resolution image synthesis. In this paper, we propose a Multi-Scale Gradient based U-Net (MSG U-Net) model for high-resolution image-to-image translation up to 2048X1024 resolution. The proposed model is trained by allowing the flow of gradients from multiple-discriminators to a single generator at multiple scales. The proposed MSG U-Net architecture leads to photo-realistic high-resolution image-to-image translation. Moreover, the proposed model is computationally efficient as com-pared to the Pix2Pix-HD with an improvement in the inference time nearly by 2.5 times. We provide the code of MSG U-Net model at https://github.com/laxmaniron/MSG-U-Net.

翻译:最近,有条件的生成反影网络(有条件的GAN)在若干图像到图像翻译应用程序中表现出非常有希望的性能,然而,这些有条件的GAN的用途相当限于低分辨率图像,如256X256.Pix2Pix-HD是最近试图利用有条件的GAN进行高分辨率图像合成的尝试。在本文件中,我们提议了一个基于多比例的U-Net(MSG U-Net)模型,用于高分辨率图像到图像翻译,直至2048X1024年分辨率。我们通过允许多分辨器的梯度向多个尺度的单一生成器流动来培训拟议的模型。拟议的MSG U-Net结构导致高分辨率图像到图像转换。此外,拟议的模型在计算上效率很高,与Pix2Pix-HD(MS U-Net)连接,在推断时间上改进了近2.5倍。我们在 https://githubub.commassionMS/G-G-Net提供MSG UNet模型的代码。