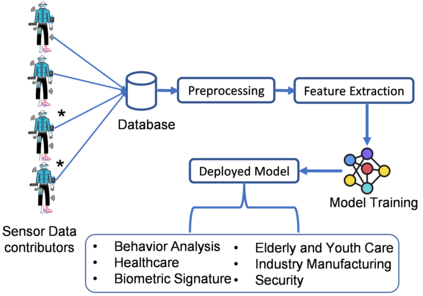

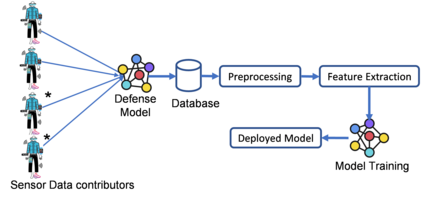

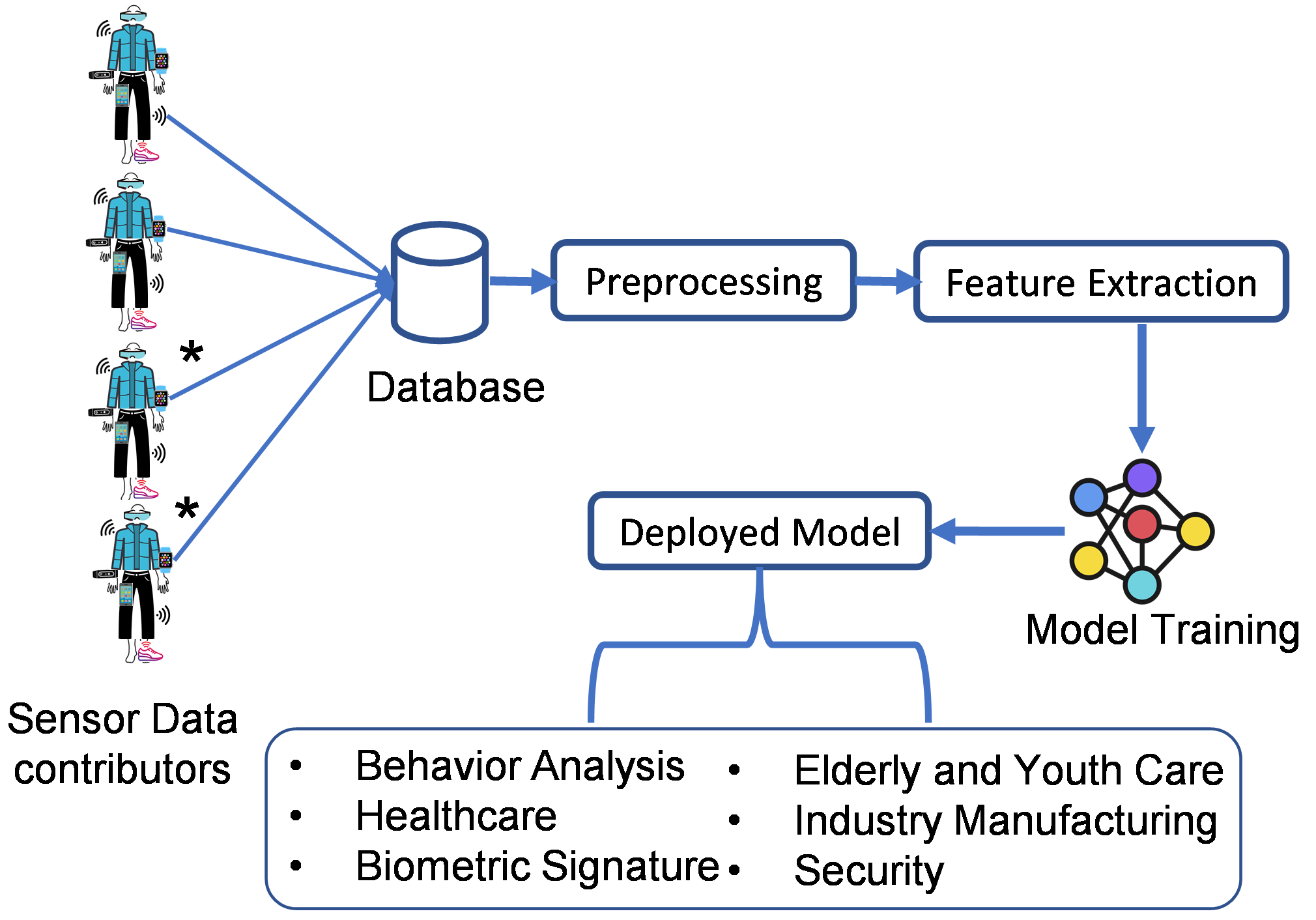

Human Activity Recognition (HAR) is a problem of interpreting sensor data to human movement using an efficient machine learning (ML) approach. The HAR systems rely on data from untrusted users, making them susceptible to data poisoning attacks. In a poisoning attack, attackers manipulate the sensor readings to contaminate the training set, misleading the HAR to produce erroneous outcomes. This paper presents the design of a label flipping data poisoning attack for a HAR system, where the label of a sensor reading is maliciously changed in the data collection phase. Due to high noise and uncertainty in the sensing environment, such an attack poses a severe threat to the recognition system. Besides, vulnerability to label flipping attacks is dangerous when activity recognition models are deployed in safety-critical applications. This paper shades light on how to carry out the attack in practice through smartphone-based sensor data collection applications. This is an earlier research work, to our knowledge, that explores attacking the HAR models via label flipping poisoning. We implement the proposed attack and test it on activity recognition models based on the following machine learning algorithms: multi-layer perceptron, decision tree, random forest, and XGBoost. Finally, we evaluate the effectiveness of K-nearest neighbors (KNN)-based defense mechanism against the proposed attack.

翻译:人类活动识别(HAR)是使用高效机器学习(ML)方法将感官数据解读为人类运动的一个问题。HAR系统依赖来自不受信任用户的数据,使其容易受到数据中毒袭击。在一次中毒袭击中,攻击者操纵感官读数来污染训练组,误导HAR产生错误的结果。本文介绍了HAR系统翻转数据中毒袭击标签的设计,HAR系统的感官读数标签在数据收集阶段被恶意地改变。由于感官读数的标签在感测环境中的高度噪音和不确定性,这种袭击对识别系统构成严重威胁。此外,当活动识别模型被安装在安全临界应用程序中时,贴标签反弹攻击是危险的。本文展示了如何通过智能手机传感器数据收集应用程序在实践中进行攻击的灯光。据我们所知,这是早期的研究工作,它探索通过标签反弹中毒袭击HAR模型。我们根据以下机器学习算法执行拟议的攻击和测试活动识别模型:多层过敏、决定树、随机森林和XGBNO-防御机制。最后,我们评估了拟议对KNON-M-攻击中的安全效率。我们评估了拟议的K-KNO-NO-Profor-destreforg-destris-destris-fer-fervalt-t-t-t-weg-t-t-weg-weg-t-t-weg-weg-s-s-s-s-weg-weg-s-s-s-s-wevalvalvalvalvalvalvalvalvalvalvalvalvalvalvalvalt-t-t-t-t-t-t-t-t-t-t-t-t-t-t-t-t-t-t-s-s-s-t-t-s-s-s-s-s-s-t-t-t-t-t-t-t-t-t-t-s-s-s-s-s-s-svalvalvalvalvalvalvalvalvalvalvalvalvalvalvalvalvalvalvalvalt-s。