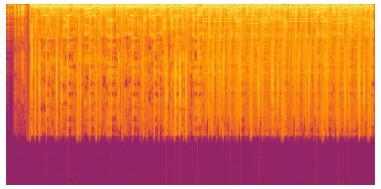

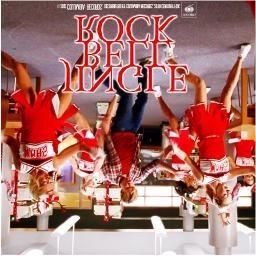

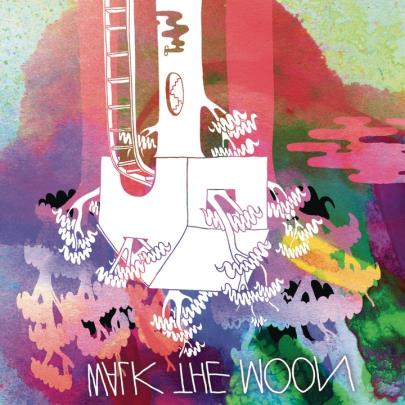

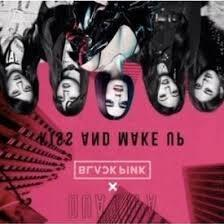

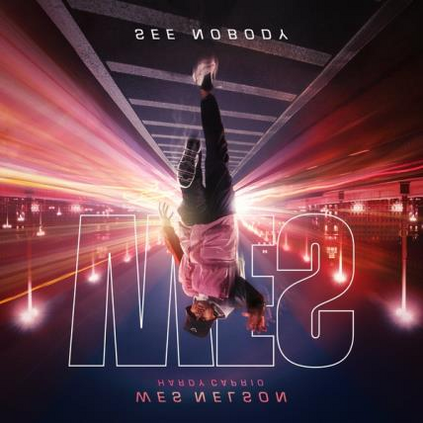

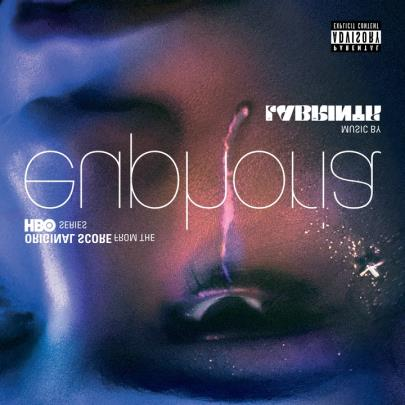

In this paper, we propose a cross-modal variational auto-encoder (CMVAE) for content-based micro-video background music recommendation. CMVAE is a hierarchical Bayesian generative model that matches relevant background music to a micro-video by projecting these two multimodal inputs into a shared low-dimensional latent space, where the alignment of two corresponding embeddings of a matched video-music pair is achieved by cross-generation. Moreover, the multimodal information is fused by the product-of-experts (PoE) principle, where the semantic information in visual and textual modalities of the micro-video are weighted according to their variance estimations such that the modality with a lower noise level is given more weights. Therefore, the micro-video latent variables contain less irrelevant information that results in a more robust model generalization. Furthermore, we establish a large-scale content-based micro-video background music recommendation dataset, TT-150k, composed of approximately 3,000 different background music clips associated to 150,000 micro-videos from different users. Extensive experiments on the established TT-150k dataset demonstrate the effectiveness of the proposed method. A qualitative assessment of CMVAE by visualizing some recommendation results is also included.

翻译:在本文中,我们建议对基于内容的微型视频背景音乐建议采用跨模式变式自动编码器(CMVAE)。CMVAE是一种将相关背景音乐与微视相匹配的Bayesian基因化模式,将这两个多式投入投射到一个共同的低维潜在空间中,通过交叉生成实现匹配视频音乐配对配对的两个相应嵌入的低维潜在空间;此外,多式联运信息还结合了专家产品(PoE)原则,根据对微视视和文字模式中的语义信息的差异估计进行加权,使低噪声水平模式具有更大的分量;因此,微视潜在变量含有较少的无关性信息,从而形成一个更强有力的模型集成;此外,我们建立了一个基于内容的大型微型视频背景音乐建议数据集,TT-150k,由来自不同用户的大约3 000种不同的背景音乐剪辑组成,与150 000种微型视频相关。对已建立的TTT-150k数据集进行的广泛实验,也通过视觉方法展示了CMV建议的有效性。