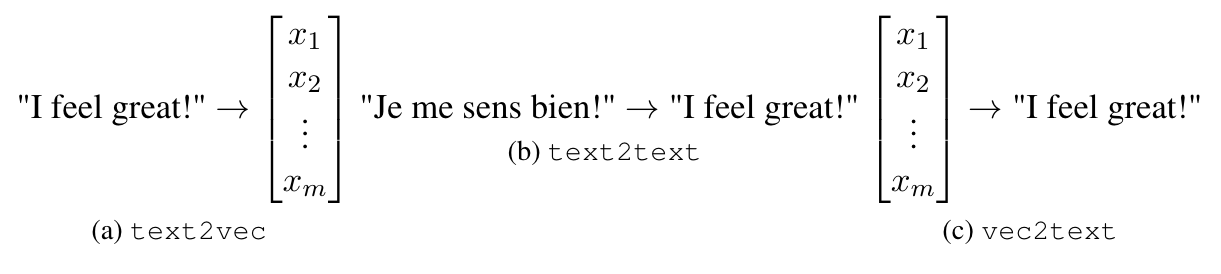

We investigate models that can generate arbitrary natural language text (e.g. all English sentences) from a bounded, convex and well-behaved control space. We call them universal vec2text models. Such models would allow making semantic decisions in the vector space (e.g. via reinforcement learning) while the natural language generation is handled by the vec2text model. We propose four desired properties: universality, diversity, fluency, and semantic structure, that such vec2text models should possess and we provide quantitative and qualitative methods to assess them. We implement a vec2text model by adding a bottleneck to a 250M parameters Transformer model and training it with an auto-encoding objective on 400M sentences (10B tokens) extracted from a massive web corpus. We propose a simple data augmentation technique based on round-trip translations and show in extensive experiments that the resulting vec2text model surprisingly leads to vector spaces that fulfill our four desired properties and that this model strongly outperforms both standard and denoising auto-encoders.

翻译:我们研究能够从一个捆绑的、粘结的和有良好品味的控制空间产生任意自然语言文字的模型(例如所有英文句子),我们称之为通用的Vec2text模型。这些模型将允许在矢量空间作出语义决定(例如通过强化学习),而自然语言生成则由Vec2text模型处理。我们提出了四种理想的特性:普遍性、多样性、流畅性和语义结构,这些Vec2text模型应当拥有,我们提供定量和定性方法来评估它们。我们通过在250M参数变异器模型中添加一个瓶子,并用从大型网络文体中提取的400M句(10B符号)自动编码目标对其进行培训。我们提出一个简单的数据增强技术,以圆轨翻译为基础,并在广泛的实验中显示,由此形成的Vec2text模型令人惊讶地导致达到我们四种理想特性的矢量空间,而且该模型大大超出标准和非自动解码。