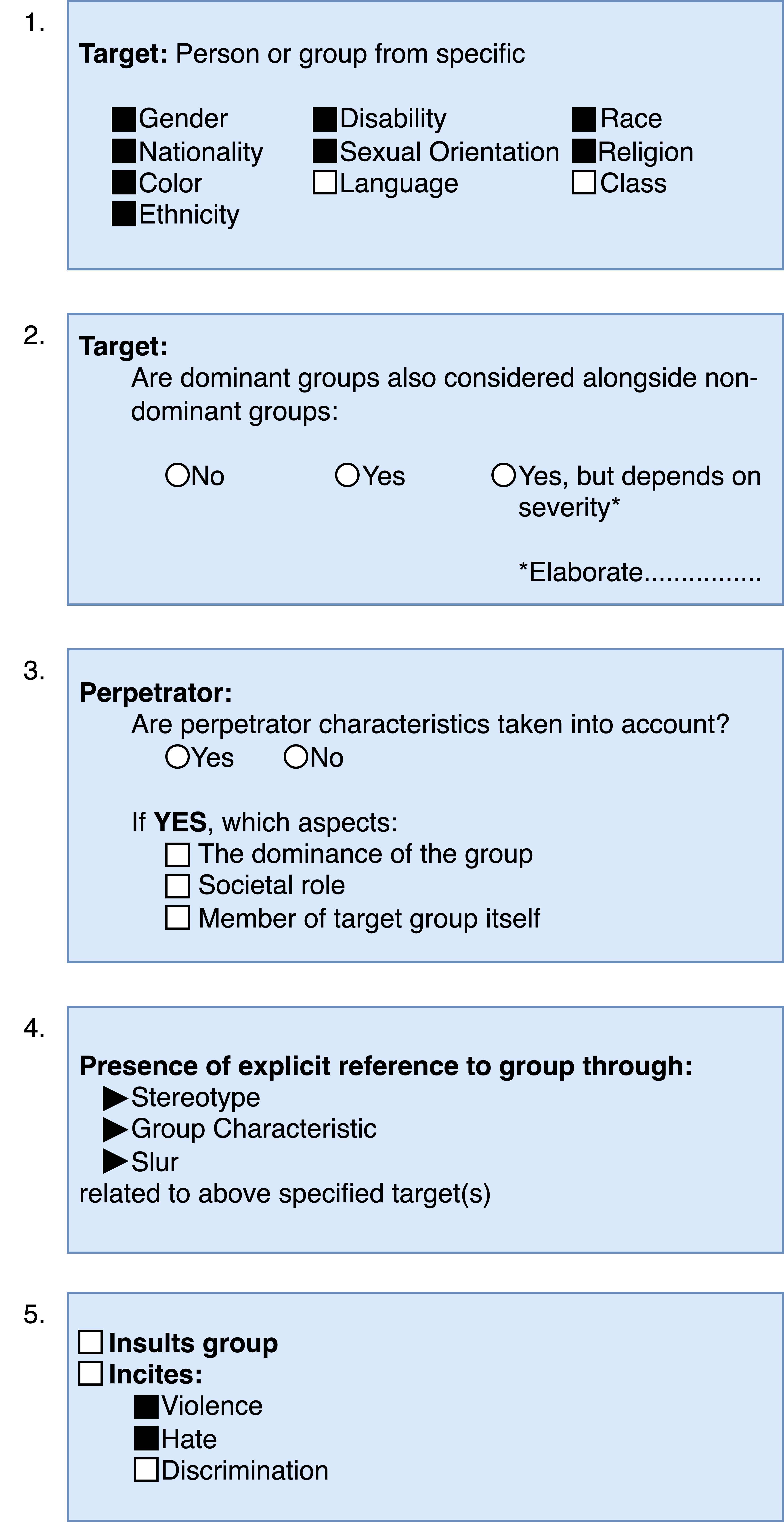

\textbf{Offensive Content Warning}: This paper contains offensive language only for providing examples that clarify this research and do not reflect the authors' opinions. Please be aware that these examples are offensive and may cause you distress. The subjectivity of recognizing \textit{hate speech} makes it a complex task. This is also reflected by different and incomplete definitions in NLP. We present \textit{hate speech} criteria, developed with perspectives from law and social science, with the aim of helping researchers create more precise definitions and annotation guidelines on five aspects: (1) target groups, (2) dominance, (3) perpetrator characteristics, (4) type of negative group reference, and the (5) type of potential consequences/effects. Definitions can be structured so that they cover a more broad or more narrow phenomenon. As such, conscious choices can be made on specifying criteria or leaving them open. We argue that the goal and exact task developers have in mind should determine how the scope of \textit{hate speech} is defined. We provide an overview of the properties of English datasets from \url{hatespeechdata.com} that may help select the most suitable dataset for a specific scenario.

翻译:\ textbf{ afference confense control} : 本文仅载有冒犯性语言, 仅用于提供澄清此项研究的示例, 而不反映作者的意见。 请注意这些示例是冒犯性的, 并可能造成您的痛苦。 承认\ textit{ hate speech} 的主观性使得它成为一项复杂的任务。 这一点也反映在NLP 中不同和不完整的定义中。 我们提出\ textit{ hate speech} 标准, 这些标准是从法律和社会科学的角度来制定的, 目的是帮助研究人员就以下五个方面制定更精确的定义和说明性指南:(1) 目标群体, (2) 主导性, (3) 行为人特征, (4) 负面群体参考类型, 以及 (5) 潜在后果/ 后果/ 后果/ 类型。 定义可以结构化, 从而涵盖范围更广或更窄的现象。 因此, 可以有意识地选择具体的标准或予以开放。 我们争辩说, 目标和确切的任务开发者在意中应该确定\ text { hate spech} 的界定范围 。 我们提供了对来自\ hatech data 的英文数据集属性的属性的概览 。