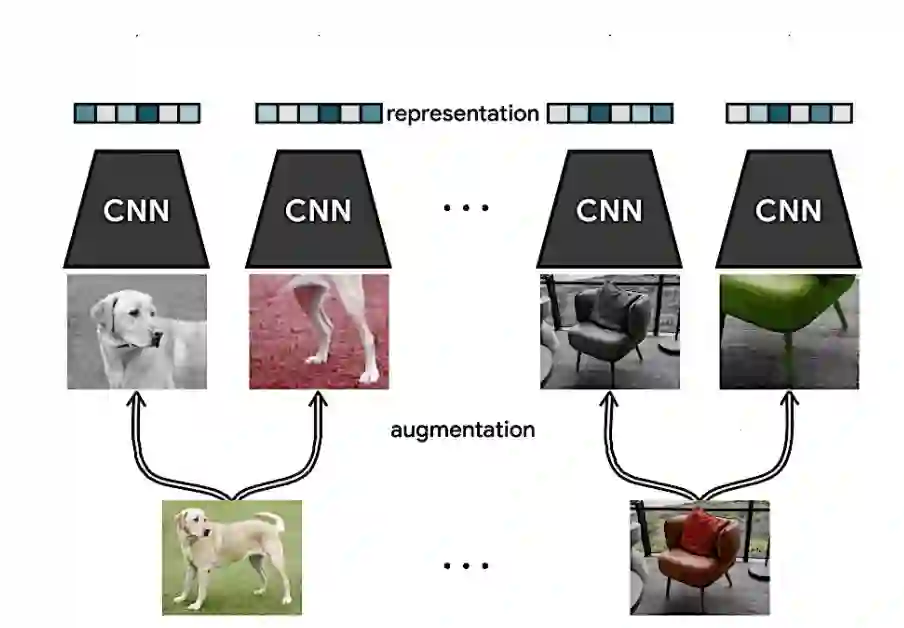

Recent advances in contrastive learning have enlightened diverse applications across various semi-supervised fields. Jointly training supervised learning and unsupervised learning with a shared feature encoder becomes a common scheme. Though it benefits from taking advantage of both feature-dependent information from self-supervised learning and label-dependent information from supervised learning, this scheme remains suffering from bias of the classifier. In this work, we systematically explore the relationship between self-supervised learning and supervised learning, and study how self-supervised learning helps robust data-efficient deep learning. We propose hyperspherical consistency regularization (HCR), a simple yet effective plug-and-play method, to regularize the classifier using feature-dependent information and thus avoid bias from labels. Specifically, HCR first projects logits from the classifier and feature projections from the projection head on the respective hypersphere, then it enforces data points on hyperspheres to have similar structures by minimizing binary cross entropy of pairwise distances' similarity metrics. Extensive experiments on semi-supervised and weakly-supervised learning demonstrate the effectiveness of our method, by showing superior performance with HCR.

翻译:对比式学习的最近进展在各种半监督领域的不同应用中开明了。 联合培训以共享特性编码器进行监管的学习和不受监督的学习与共享特性编码器的常见方案。 虽然它得益于利用自监督学习和受监督学习的标签依赖信息而获得的基于特性的信息,但这一办法仍然受到分类者的偏向。 在这项工作中,我们系统地探索自监督学习和受监督学习之间的关系,并研究自监督学习如何有助于稳健的数据高效深层次学习。我们建议采用简单而有效的插头和游戏方法,即超球一致性方法(HCR),即使用基于特性的信息对分类器进行规范,从而避免标签上的偏差。具体地说,HCR首先从分类器中进行登录,并将投影头的预测显示在相应的超球上,然后通过尽量减少双向距离的双向交叉光谱度测量仪的双向交叉谱,从而实现类似结构的相似性能。我们对半监督和微弱监控的学习方法进行了广泛的实验,展示了我们方法的效能,与高超镜。