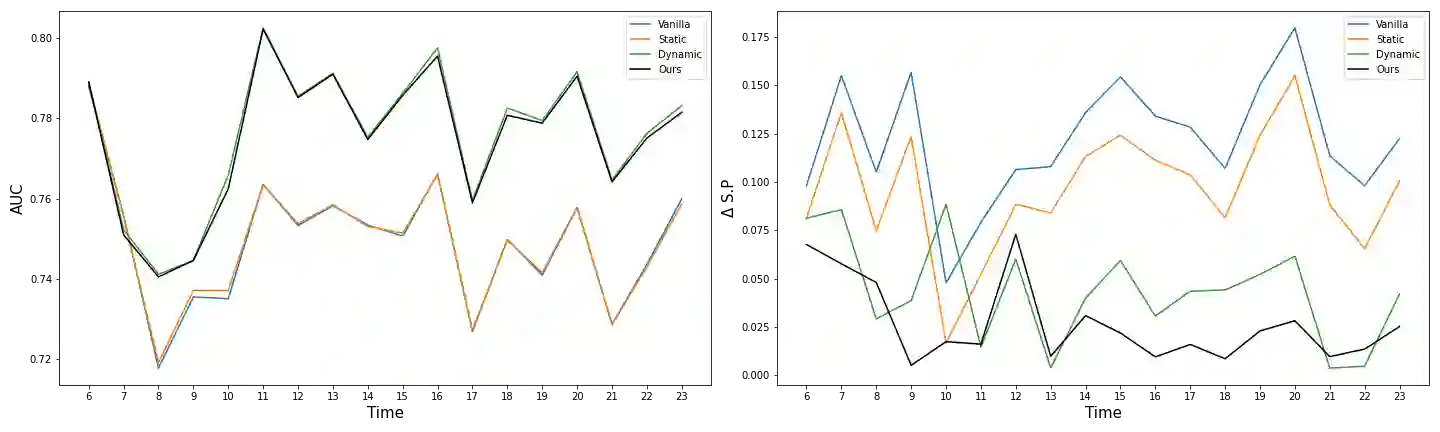

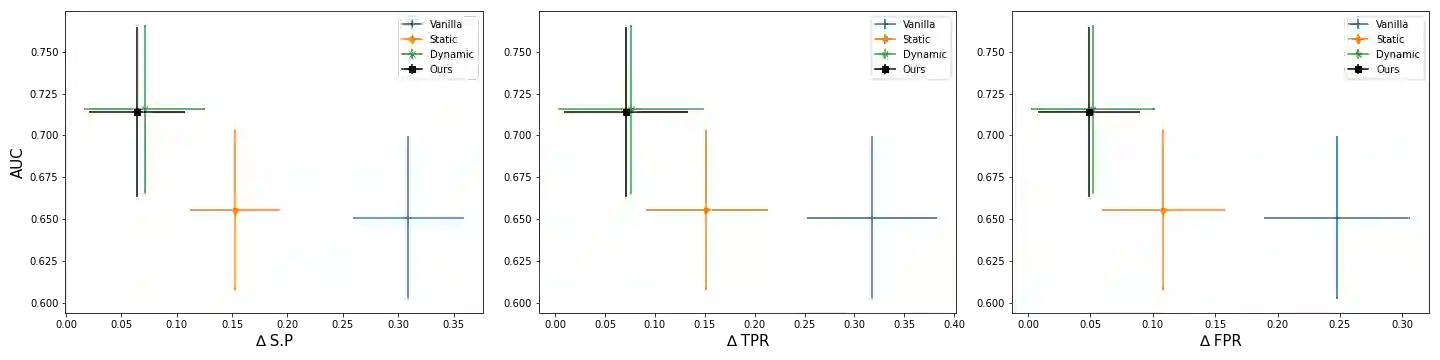

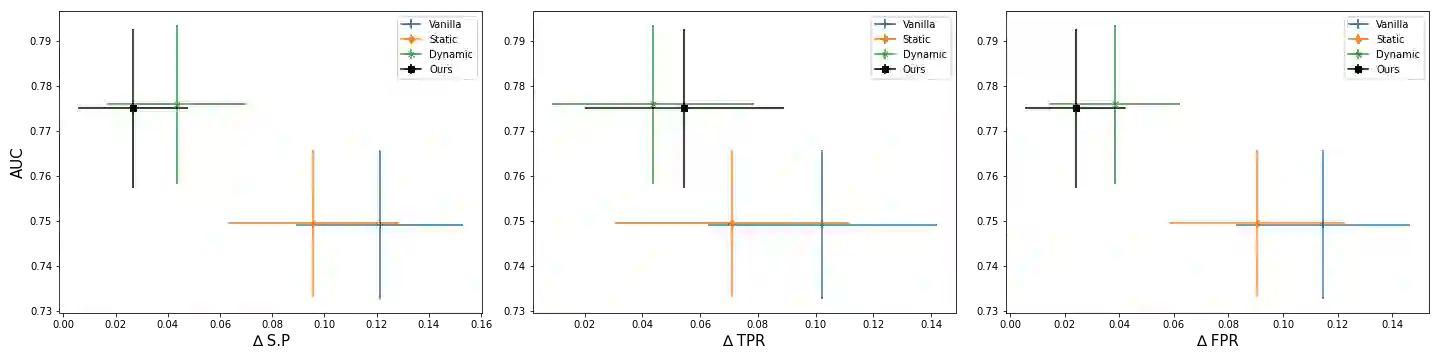

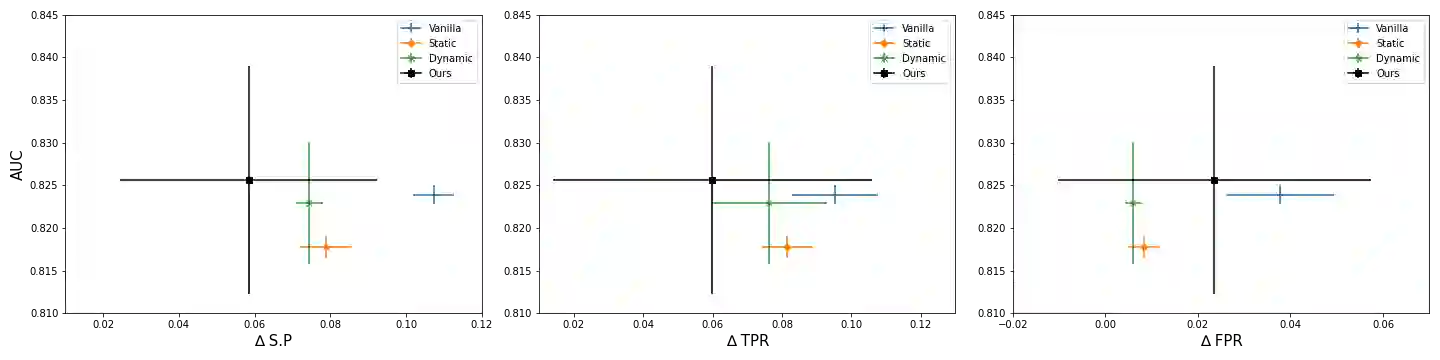

The idealization of a static machine-learned model, trained once and deployed forever, is not practical. As input distributions change over time, the model will not only lose accuracy, any constraints to reduce bias against a protected class may fail to work as intended. Thus, researchers have begun to explore ways to maintain algorithmic fairness over time. One line of work focuses on dynamic learning: retraining after each batch, and the other on robust learning which tries to make algorithms robust against all possible future changes. Dynamic learning seeks to reduce biases soon after they have occurred and robust learning often yields (overly) conservative models. We propose an anticipatory dynamic learning approach for correcting the algorithm to mitigate bias before it occurs. Specifically, we make use of anticipations regarding the relative distributions of population subgroups (e.g., relative ratios of male and female applicants) in the next cycle to identify the right parameters for an importance weighing fairness approach. Results from experiments over multiple real-world datasets suggest that this approach has promise for anticipatory bias correction.

翻译:一次性培训和永久部署的静态机器学习模式的理想化并不现实。 随着投入分布随时间变化而变化,该模式不仅会失去准确性,任何旨在减少对受保护阶级偏见的制约因素都可能无法如预期的那样发挥作用。 因此,研究人员已开始探索如何在一段时间内保持算法公平。 一项工作侧重于动态学习:每批后再培训,另一项工作侧重于大力学习,力求使算法在将来的所有可能变化中保持稳健。 动态学习力求在出现偏差后很快减少偏差,而稳健学习往往产生保守模式。 我们提出一种预测性动态学习方法,以纠正算法,在出现偏差之前减少偏差。 具体地说,我们利用对下一个周期中人口分组相对分布的预期(如男女申请人相对比率)来确定衡量公平方法重要性的正确参数。 多个现实世界数据集的实验结果表明,这一方法有望对预测性偏差校正。