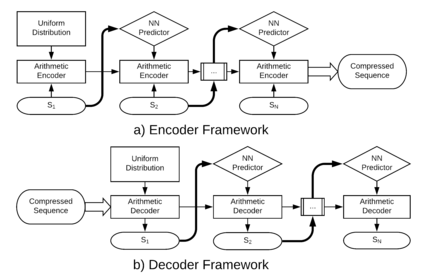

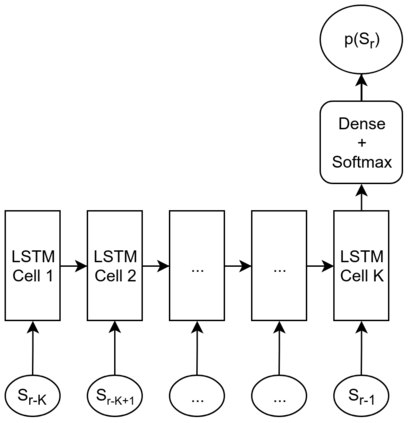

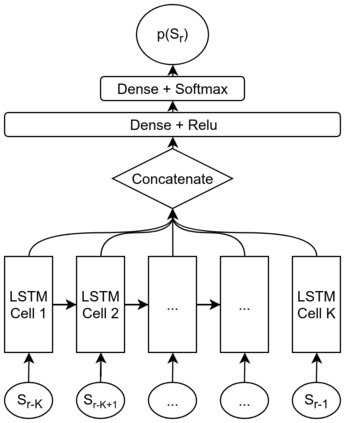

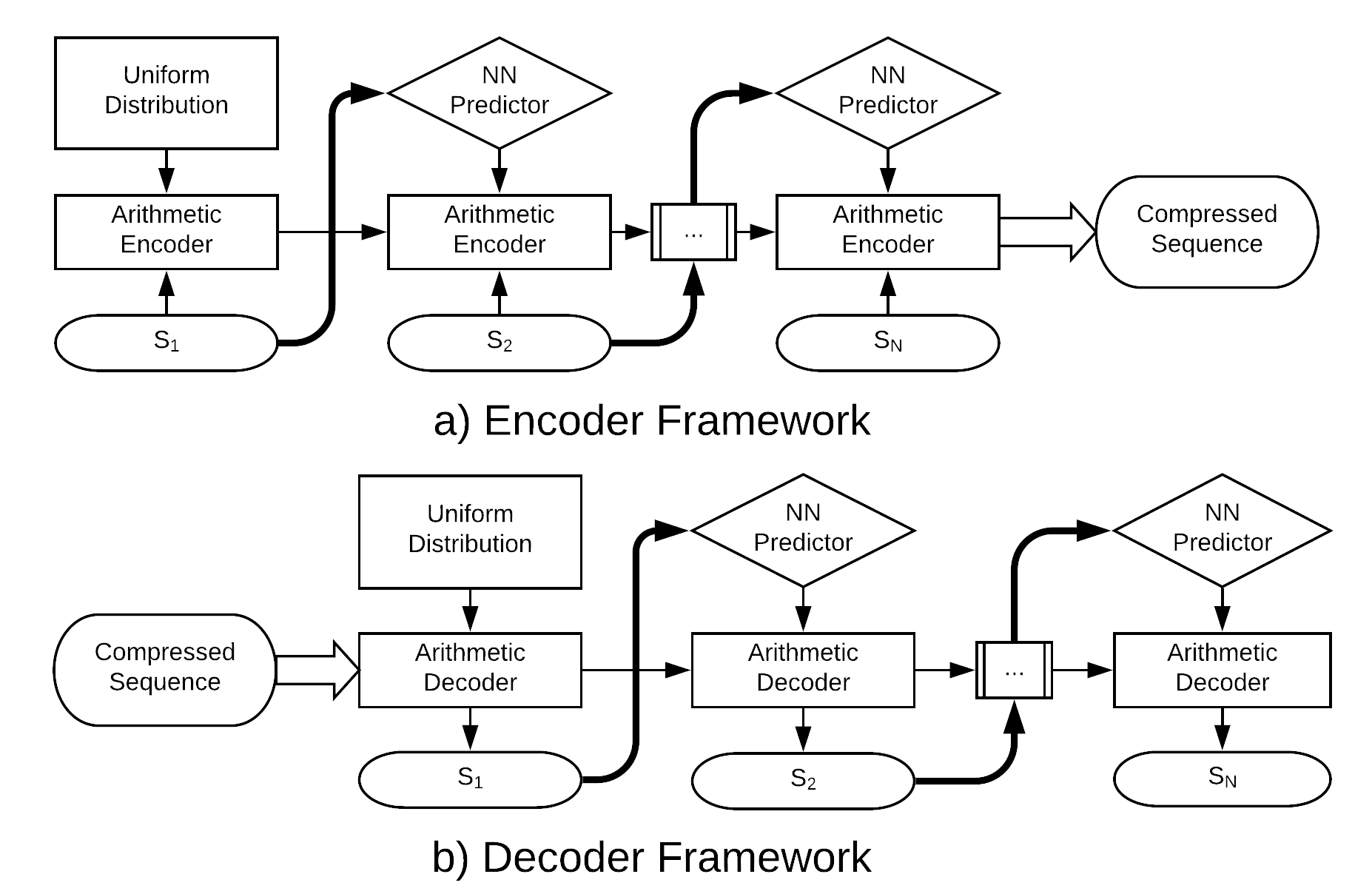

Sequential data is being generated at an unprecedented pace in various forms, including text and genomic data. This creates the need for efficient compression mechanisms to enable better storage, transmission and processing of such data. To solve this problem, many of the existing compressors attempt to learn models for the data and perform prediction-based compression. Since neural networks are known as universal function approximators with the capability to learn arbitrarily complex mappings, and in practice show excellent performance in prediction tasks, we explore and devise methods to compress sequential data using neural network predictors. We combine recurrent neural network predictors with an arithmetic coder and losslessly compress a variety of synthetic, text and genomic datasets. The proposed compressor outperforms Gzip on the real datasets and achieves near-optimal compression for the synthetic datasets. The results also help understand why and where neural networks are good alternatives for traditional finite context models

翻译:正在以前所未有的速度以各种形式生成序列数据,包括文本和基因组数据。 这就需要高效压缩机制,以便能够更好地储存、传输和处理这些数据。 解决这个问题,许多现有的压缩机试图学习数据模型并进行基于预测的压缩。 由于神经网络被称为通用功能近似器,有能力学习任意复杂的绘图,在实践中,在预测任务方面表现出色,我们探索和设计方法,利用神经网络预测器压缩连续数据。我们把经常性神经网络预测器与一个计算解码器结合起来,并且无损压缩各种合成、文本和基因组数据集。拟议的压缩器超越了真实数据集上的Gzip,并实现了合成数据集的近于最佳的压缩。结果也有助于理解为什么和在哪些方面神经网络是传统有限环境模型的良好替代品。