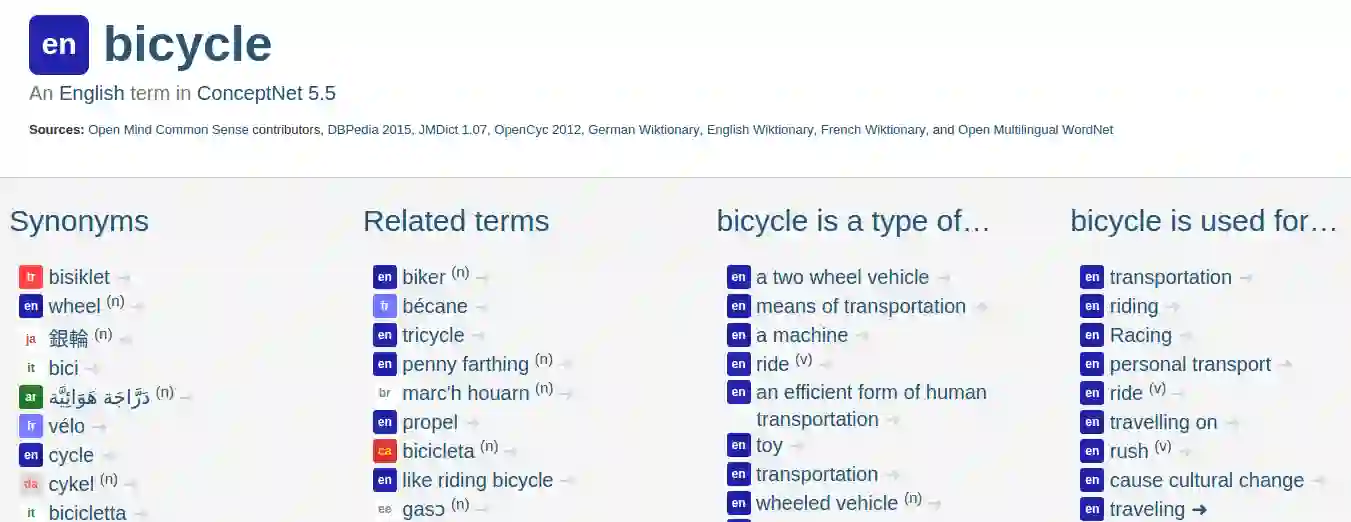

Machine learning about language can be improved by supplying it with specific knowledge and sources of external information. We present here a new version of the linked open data resource ConceptNet that is particularly well suited to be used with modern NLP techniques such as word embeddings. ConceptNet is a knowledge graph that connects words and phrases of natural language with labeled edges. Its knowledge is collected from many sources that include expert-created resources, crowd-sourcing, and games with a purpose. It is designed to represent the general knowledge involved in understanding language, improving natural language applications by allowing the application to better understand the meanings behind the words people use. When ConceptNet is combined with word embeddings acquired from distributional semantics (such as word2vec), it provides applications with understanding that they would not acquire from distributional semantics alone, nor from narrower resources such as WordNet or DBPedia. We demonstrate this with state-of-the-art results on intrinsic evaluations of word relatedness that translate into improvements on applications of word vectors, including solving SAT-style analogies.

翻译:有关语言的机器学习可以通过提供特定的知识和外部信息来源加以改进。 我们在此展示了链接的开放数据资源概念网的新版本,该新版本特别适合用于现代NLP技术,如字嵌入。 概念网是一个知识图,将自然语言的文字和短语与标签边缘连接起来。 它的知识来自许多来源,包括专家创造的资源、众包和有目的的游戏。 它旨在代表理解语言的一般知识,改进自然语言应用,使应用程序能够更好地理解人们使用词的含义。 当概念网与从分布式语义学(如Word2vec)获得的词嵌入结合起来时,它提供了应用,并理解它们不会仅仅从分布式语义学中获取,也不会从WordNet或DBBPedia等狭小的资源获取。 我们用对与词汇有关的内在评价的最新结果来证明这一点,这些内在评价可以转化为对文字矢量的应用的改进,包括解决SAT式类类比。