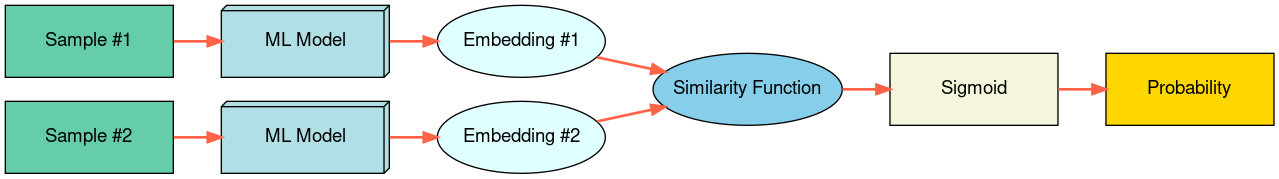

Clinical Natural Language Processing (NLP) has become an emerging technology in healthcare that leverages a large amount of free-text data in electronic health records (EHRs) to improve patient care, support clinical decisions, and facilitate clinical and translational science research. Deep learning has achieved state-of-the-art performance in many clinical NLP tasks. However, training deep learning models usually require large annotated datasets, which are normally not publicly available and can be time-consuming to build in clinical domains. Working with smaller annotated datasets is typical in clinical NLP and therefore, ensuring that deep learning models perform well is crucial for the models to be used in real-world applications. A widely adopted approach is fine-tuning existing Pre-trained Language Models (PLMs), but these attempts fall short when the training dataset contains only a few annotated samples. Few-Shot Learning (FSL) has recently been investigated to tackle this problem. Siamese Neural Network (SNN) has been widely utilized as an FSL approach in computer vision, but has not been studied well in NLP. Furthermore, the literature on its applications in clinical domains is scarce. In this paper, we propose two SNN-based FSL approaches for clinical NLP, including pre-trained SNN (PT-SNN) and SNN with second-order embeddings (SOE-SNN). We evaluated the proposed approaches on two clinical tasks, namely clinical text classification and clinical named entity recognition. We tested three few-shot settings including 4-shot, 8-shot, and 16-shot learning. Both clinical NLP tasks were benchmarked using three PLMs, including BERT, BioBERT, and BioClinicalBERT. The experimental results verified the effectiveness of the proposed SNN-based FSL approaches in both clinical NLP tasks.

翻译:深入学习在许多临床自然语言处理(NLP)任务中达到了最先进的性能。然而,深层次学习模式通常需要大量的附加说明的数据集,这些数据通常不公开提供,而且可以在临床领域建立耗时。与较小的附加说明的数据集合作是临床NLP的典型做法,因此,确保深度学习模型运行良好对于在现实应用中使用模型至关重要。广泛采用的方法是微调现有的预先培训语言模型(PLM),但当培训数据集只包含少量附加说明的样本时,这些尝试就显得不够了。最近对少的热学习(FSL)进行了调查以解决这一问题。 西亚神经网络(SNNNN)的第二个附加附加说明数据集(SNNNP)已被广泛用作基于FL的血液成本,但NB的深度模型模型模型运行对于在现实应用中使用模型模型至关重要。 此外,在临床应用中,包括SNPL在内的三部、包括S-NFS-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S