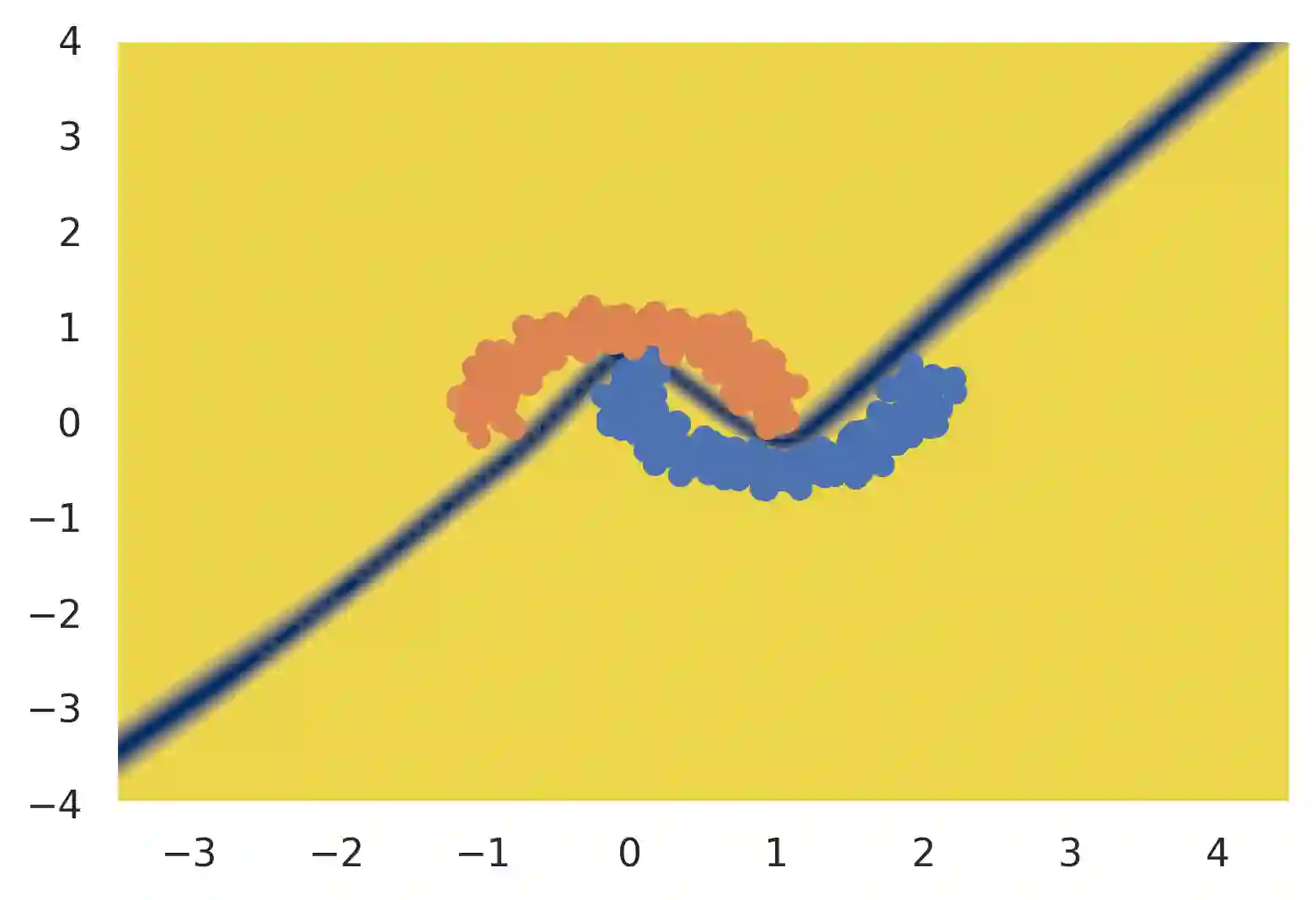

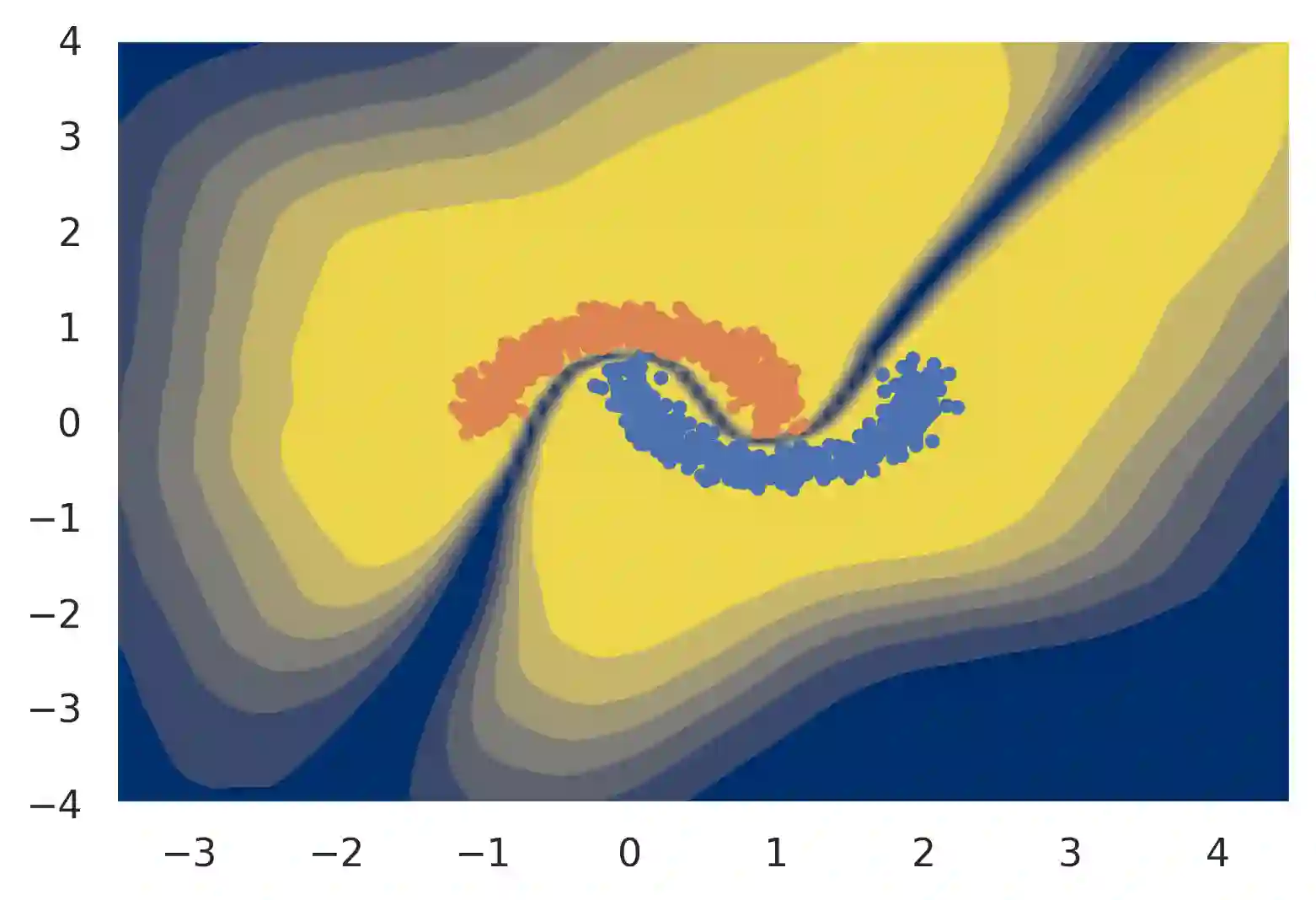

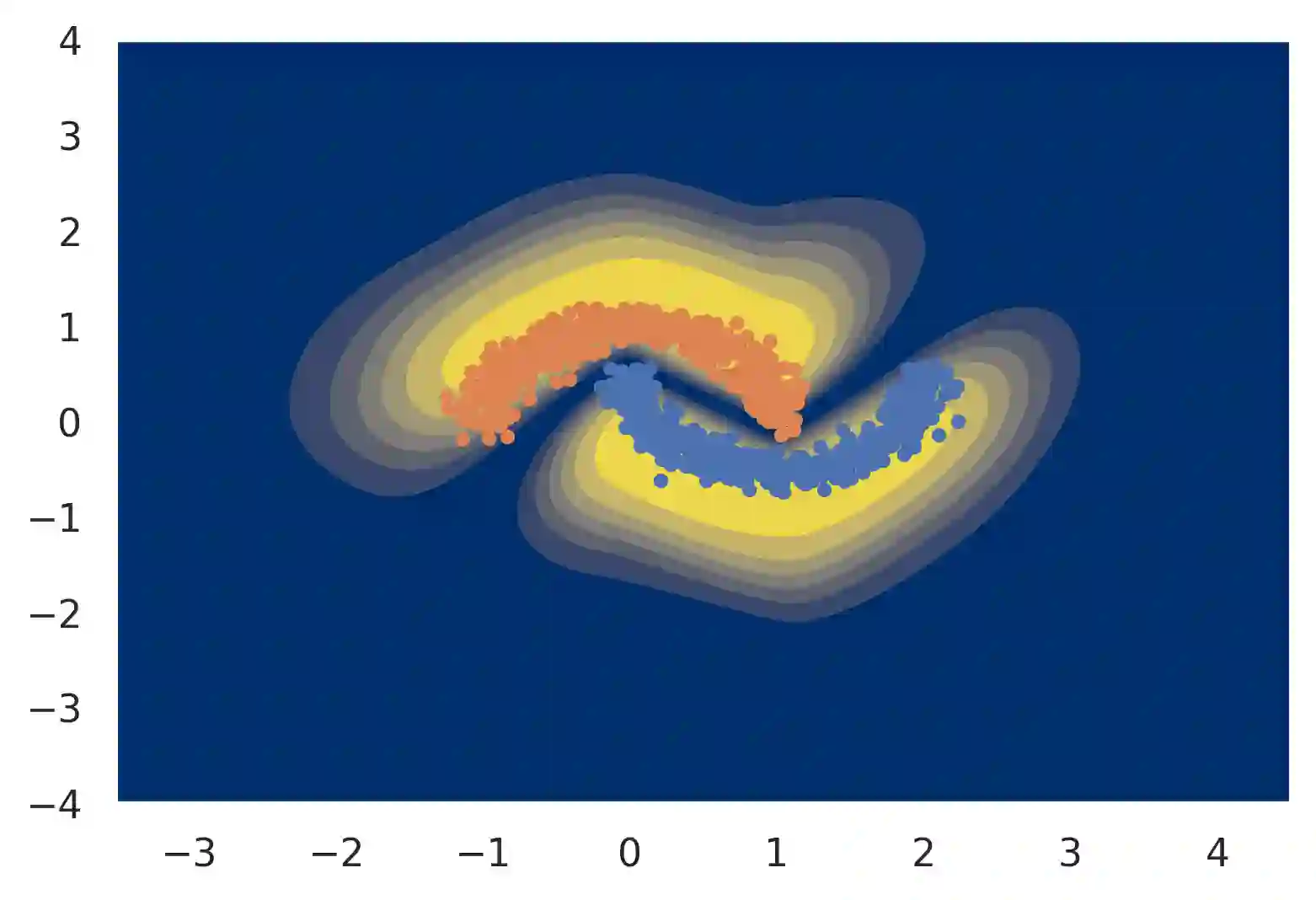

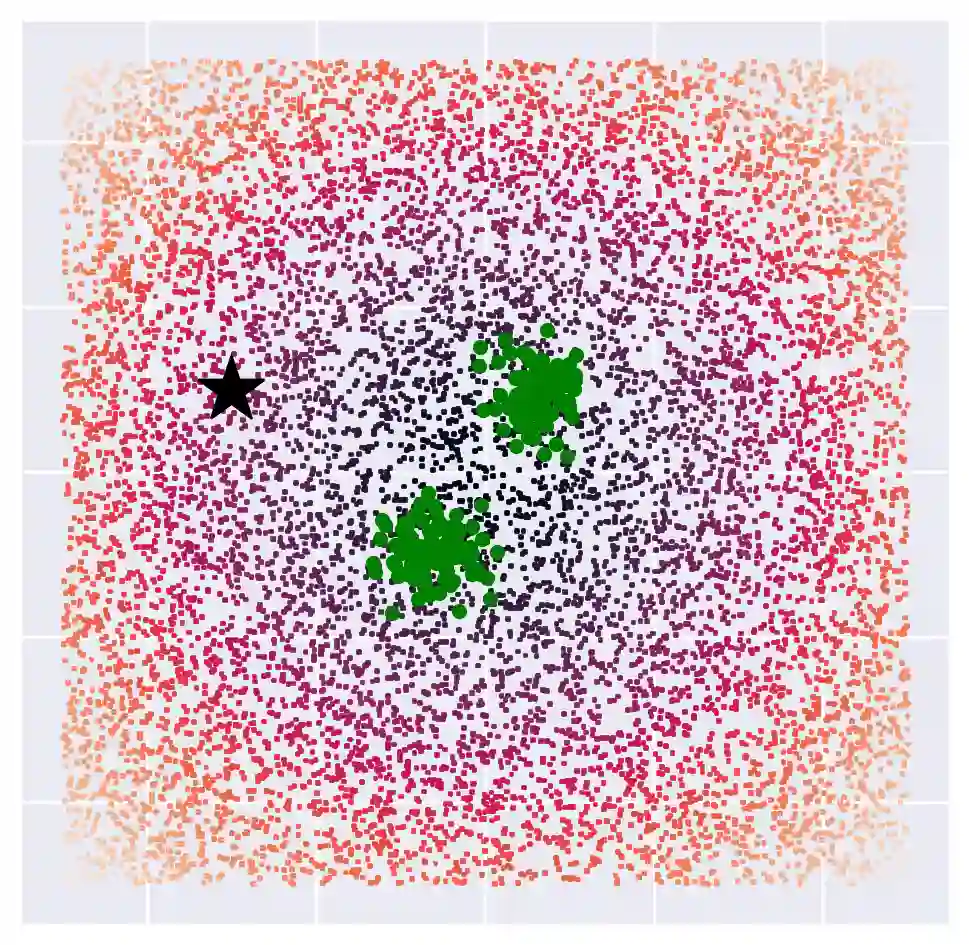

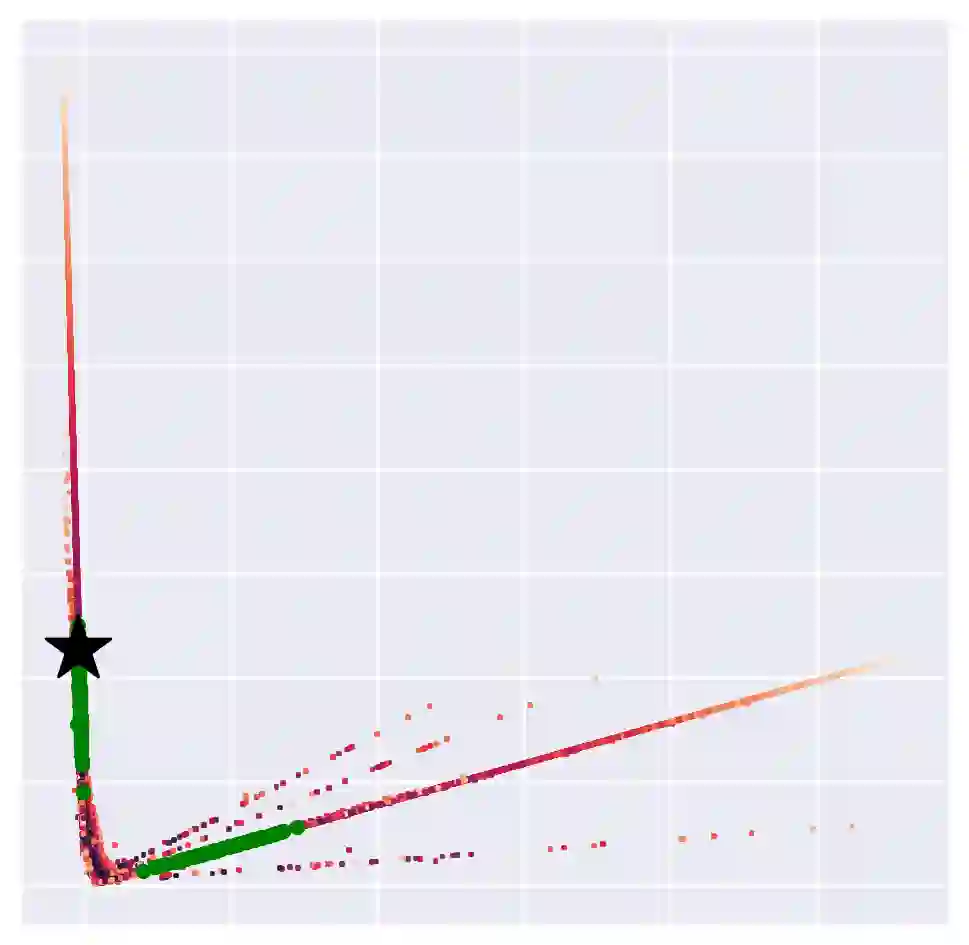

Gaussian processes are often considered a gold standard in uncertainty estimation with low dimensional data, but they have difficulty scaling to high dimensional inputs. Deep Kernel Learning (DKL) was introduced as a solution to this problem: a deep feature extractor is used to transform the inputs over which a Gaussian process' kernel is defined. However, DKL has been shown to provide unreliable uncertainty estimates in practice. We study why, and show that for certain feature extractors, "far-away" data points are mapped to the same features as those of training-set points. With this insight we propose to constrain DKL's feature extractor to approximately preserve distances through a bi-Lipschitz constraint, resulting in a feature space favorable to DKL. We obtain a model, DUE, which demonstrates uncertainty quality outperforming previous DKL and single forward pass uncertainty methods, while maintaining the speed and accuracy of softmax neural networks.

翻译:Gausian 进程通常被视为低维数据不确定性估算的黄金标准,但是它们很难推广到高维输入中。 深核心学习(DKL)是作为解决这个问题的一种解决办法引入的: 深度地物提取器被用来转换高斯进程内核定义的输入。 然而, DKL 在实践中被证明提供了不可靠的不确定性估算。 我们研究并显示,对于某些地物提取器来说,“ 远处” 数据点与培训设置点的特征一样,被映射成相同的特征。 我们建议通过这种洞察来限制 DKL 的特征提取器,通过双利普西茨限制来保持大致的距离。 我们获得了一种模型,即 DUE, 显示不确定性的质量超过以前的 DKL 和 单一的远端不确定方法, 同时保持软式神经网络的速度和准确性 。