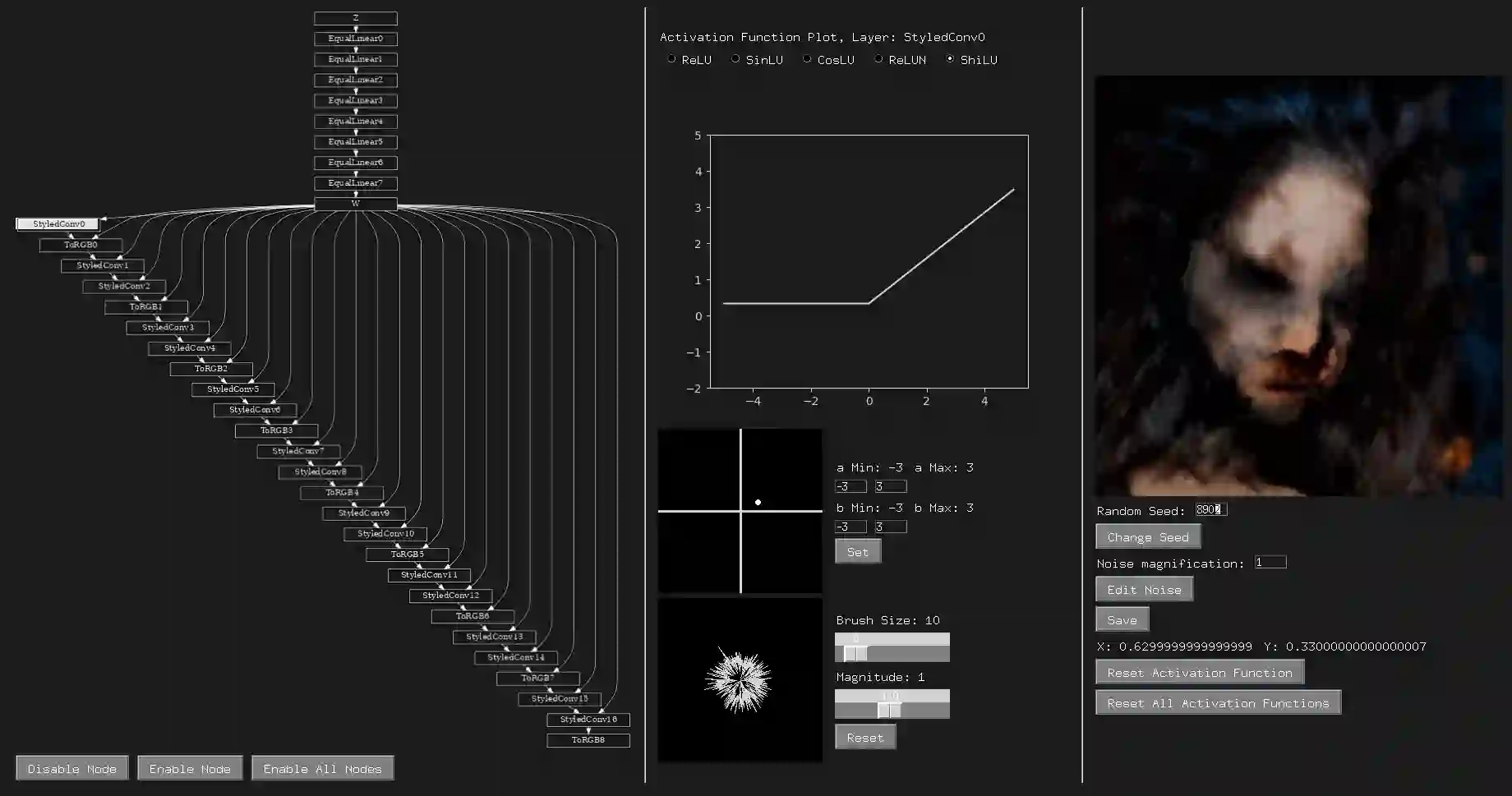

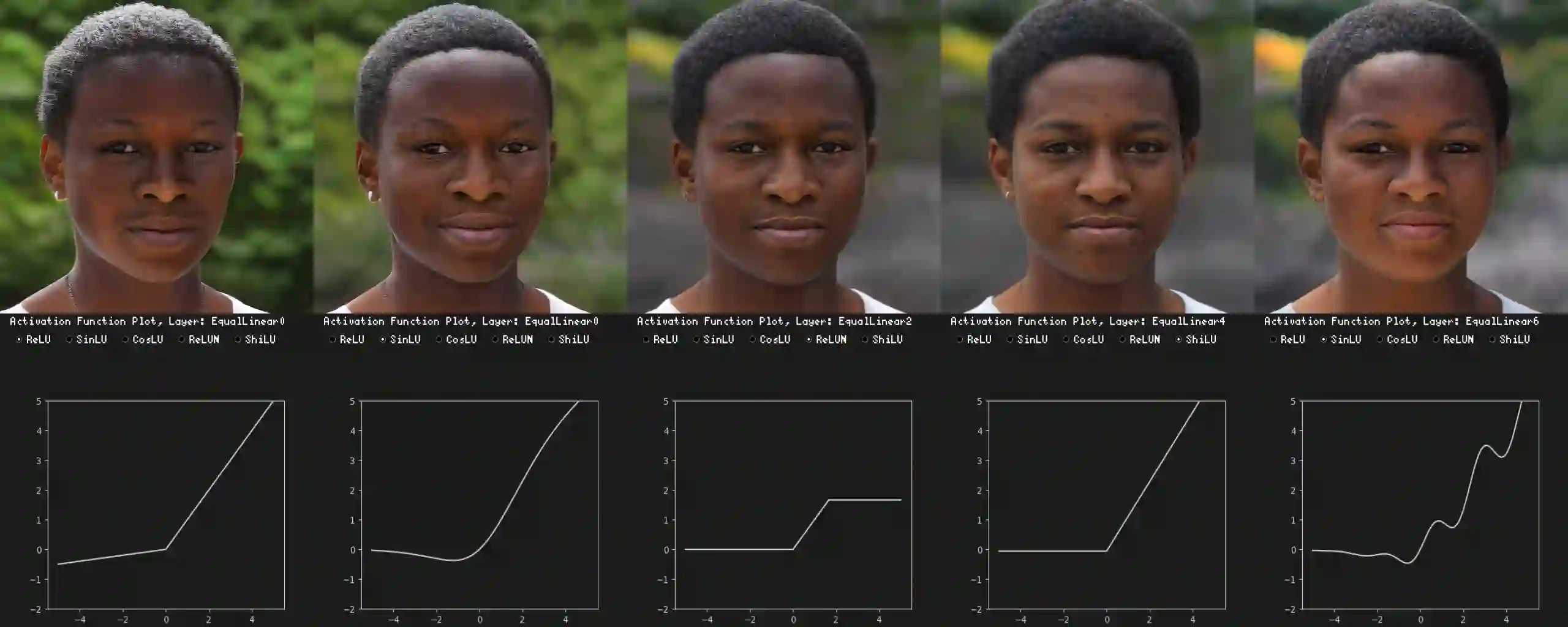

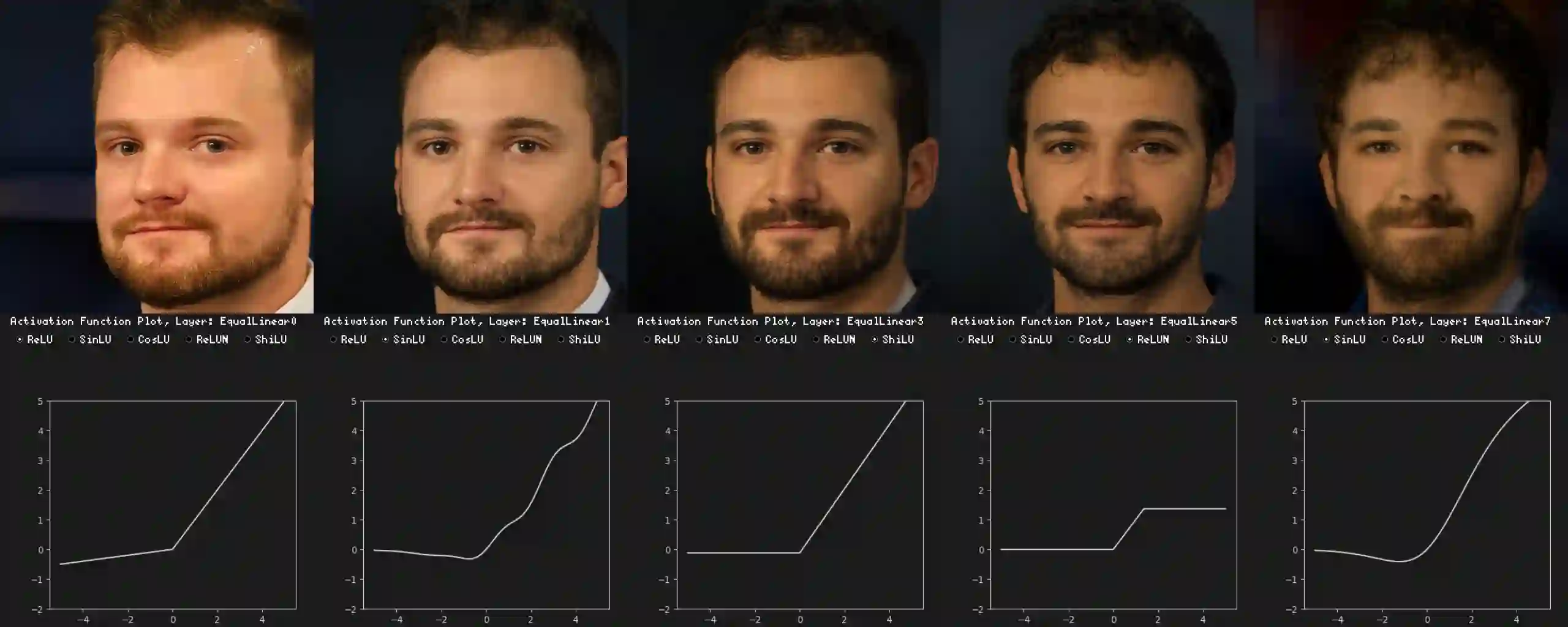

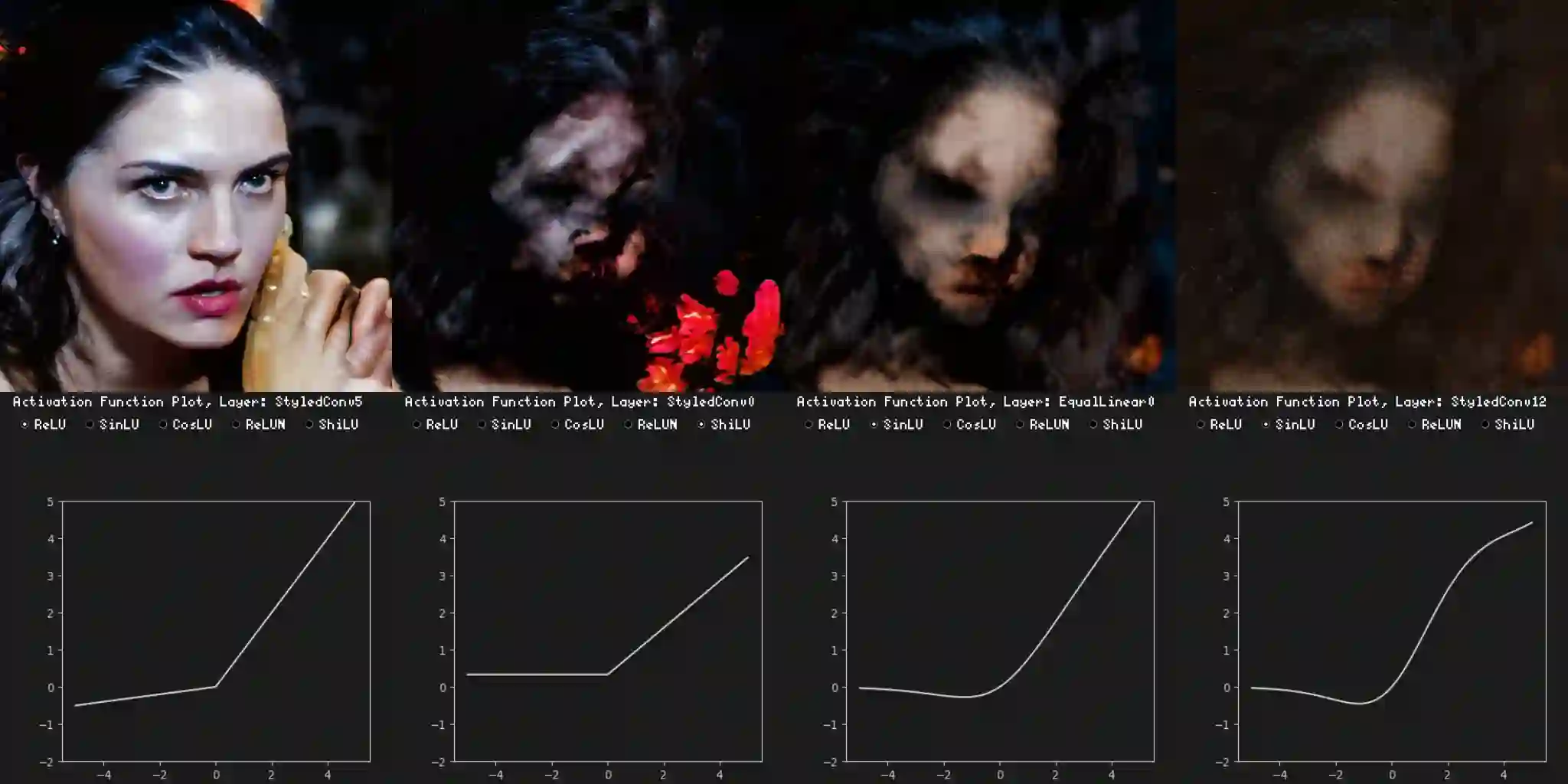

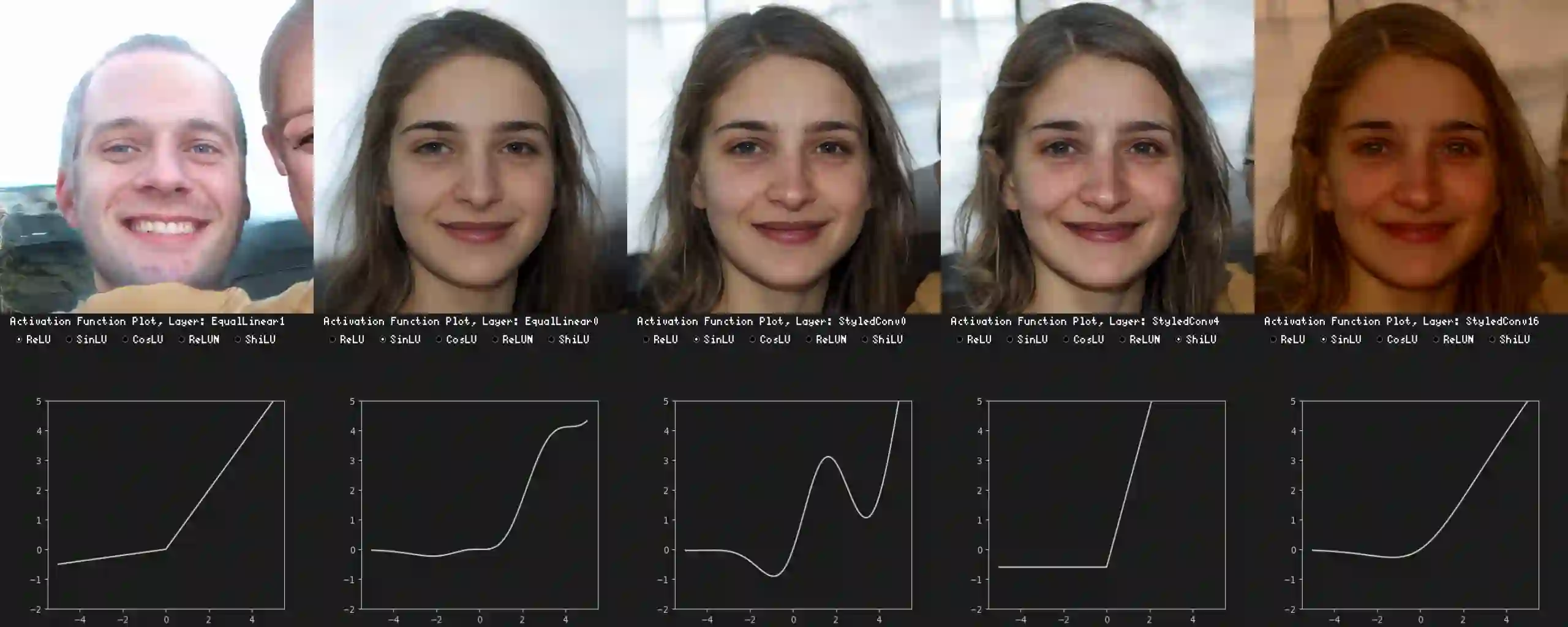

As image generative models continue to increase not only in their fidelity but also in their ubiquity the development of tools that leverage direct interaction with their internal mechanisms in an interpretable way has received little attention In this work we introduce a system that allows users to develop a better understanding of the model through interaction and experimentation By giving users the ability to replace activation functions of a generative network with parametric ones and a way to set the parameters of these functions we introduce an alternative approach to control the networks output We demonstrate the use of our method on StyleGAN2 and BigGAN networks trained on FFHQ and ImageNet respectively.

翻译:暂无翻译

相关内容

专知会员服务

36+阅读 · 2019年10月17日

Arxiv

12+阅读 · 2021年5月7日

Arxiv

10+阅读 · 2018年3月20日