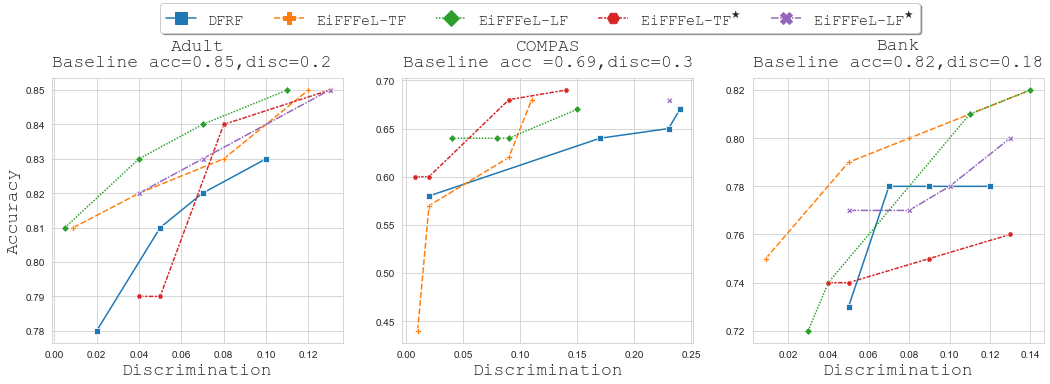

Nowadays Machine Learning (ML) techniques are extensively adopted in many socially sensitive systems, thus requiring to carefully study the fairness of the decisions taken by such systems. Many approaches have been proposed to address and to make sure there is no bias against individuals or specific groups which might originally come from biased training datasets or algorithm design. In this regard, we propose a fairness enforcing approach called EiFFFeL:Enforcing Fairness in Forests by Flipping Leaves which exploits tree-based or leaf-based post-processing strategies to relabel leaves of selected decision trees of a given forest. Experimental results show that our approach achieves a user defined group fairness degree without losing a significant amount of accuracy.

翻译:现今,许多社会敏感系统广泛采用机器学习技术,因此需要认真研究这些系统所作决定的公平性,已提出许多办法处理和确保没有针对个人或特定群体的偏见,这些偏见最初可能来自偏颇的培训数据集或算法设计,在这方面,我们提议采取公平执行办法,称为EiFFFeL:通过滑叶促进森林公平,利用树基或叶基后处理战略将特定森林某些决策树叶重新贴上标签。实验结果显示,我们的办法在不失去大量准确性的情况下达到了用户定义的群体公平程度。