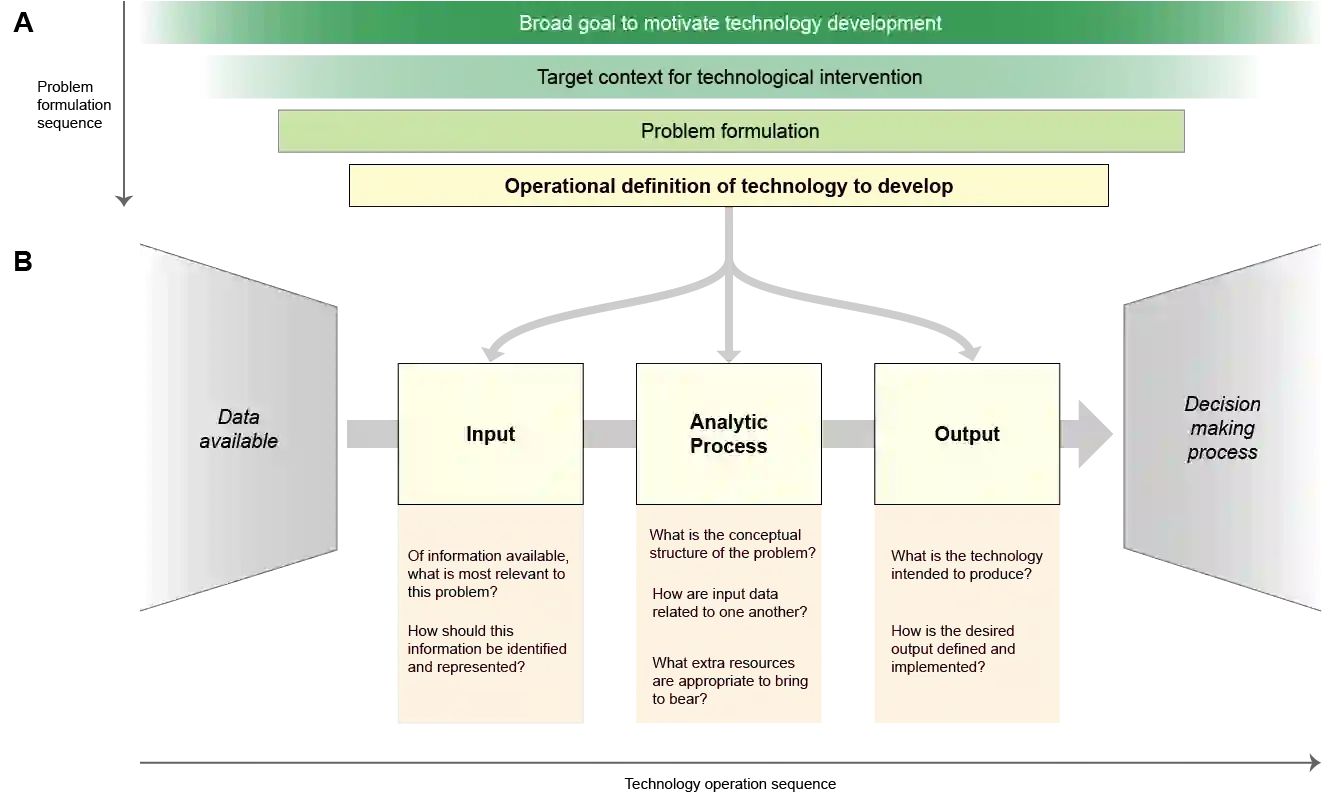

Disabled people are subject to a wide variety of complex decision-making processes in diverse areas such as healthcare, employment, and government policy. These contexts, which are already often opaque to the people they affect and lack adequate representation of disabled perspectives, are rapidly adopting artificial intelligence (AI) technologies for data analytics to inform decision making, creating an increased risk of harm due to inappropriate or inequitable algorithms. This article presents a framework for critically examining AI data analytics technologies through a disability lens and investigates how the definition of disability chosen by the designers of an AI technology affects its impact on disabled subjects of analysis. We consider three conceptual models of disability: the medical model, the social model, and the relational model; and show how AI technologies designed under each of these models differ so significantly as to be incompatible with and contradictory to one another. Through a discussion of common use cases for AI analytics in healthcare and government disability benefits, we illustrate specific considerations and decision points in the technology design process that affect power dynamics and inclusion in these settings and help determine their orientation towards marginalisation or support. The framework we present can serve as a foundation for in-depth critical examination of AI technologies and the development of a design praxis for disability-related AI analytics.

翻译:残疾人在保健、就业和政府政策等不同领域受到多种多样的复杂决策进程的制约,这些背景对他们所受影响的人来说往往不透明,而且没有适当代表残疾人的观点,这些背景正在迅速采用人工智能(AI)技术来进行数据分析,以便为决策提供信息,从而增加因不适当或不公平的算法而造成伤害的风险。本条款提供了一个框架,以便从残疾角度认真审查AI数据分析技术,并调查AI技术设计者所选择的残疾定义如何影响其对分析的残疾主题的影响。我们考虑了三种残疾概念模型:医学模型、社会模型和关系模型;并表明在每一个模型下设计的AI技术是如何大相径庭的,以致于不相容和自相矛盾的。通过讨论在保健和政府残疾福利方面对AI分析的共同使用案例的讨论,我们说明了技术设计过程中影响这些环境中的权力动态和包容性的具体考虑和决定点,并帮助确定这些技术走向边缘化或支持。我们提出的框架可以作为深入严格审查AI相关技术和设计设计残疾技术的基础。