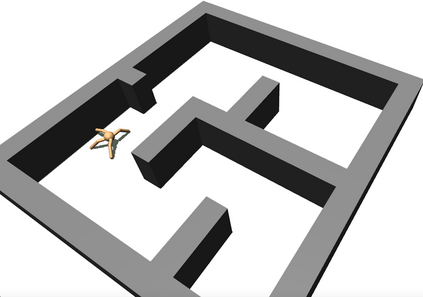

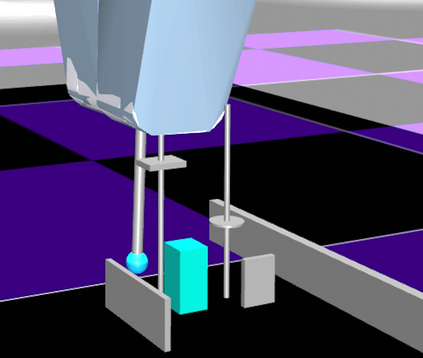

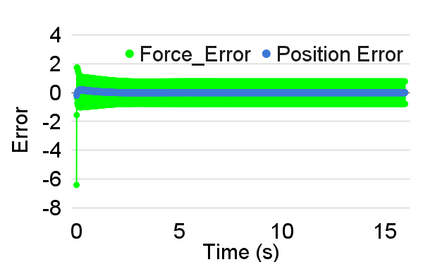

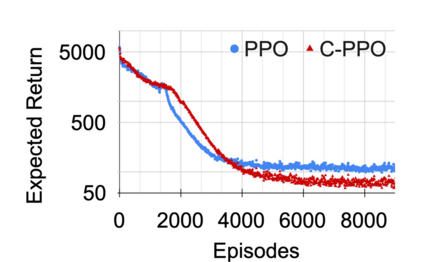

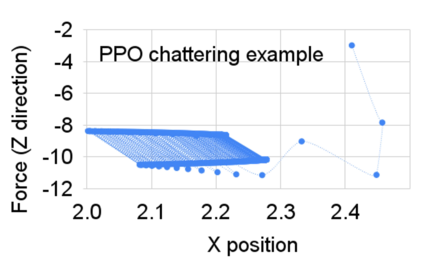

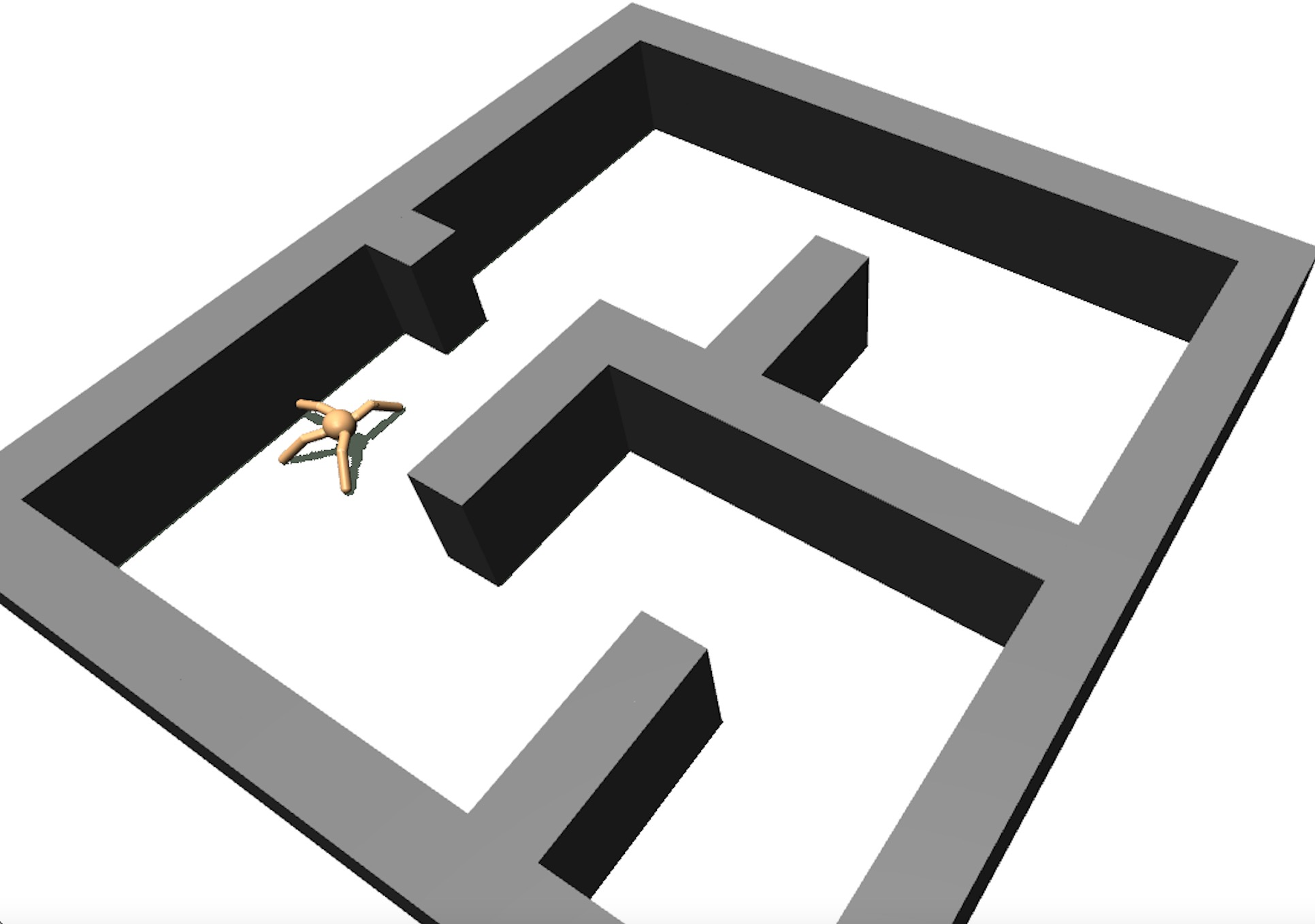

Lack of stability guarantees strongly limits the use of reinforcement learning (RL) in safety critical robotic applications. Here we propose a control system architecture for continuous RL control and derive corresponding stability theorems via contraction analysis, yielding constraints on the network weights to ensure stability. The control architecture can be implemented in general RL algorithms and improve their stability, robustness, and sample efficiency. We demonstrate the importance and benefits of such guarantees for RL on two standard examples, PPO learning of a 2D problem and HIRO learning of maze tasks.

翻译:缺乏稳定性保证了在安全关键机器人应用中使用强化学习(RL)的严格限制。在这里,我们提出一个控制系统架构,用于持续RL控制,并通过收缩分析得出相应的稳定性定理,对网络权重施加限制,以确保稳定性。控制架构可以在一般RL算法中实施,并提高其稳定性、稳健性和样本效率。我们通过两个标准例子,即PPPO学习2D问题和HIRO学习迷宫任务,来证明这种保障对RL的重要性和好处。

相关内容

Source: Apple - iOS 8