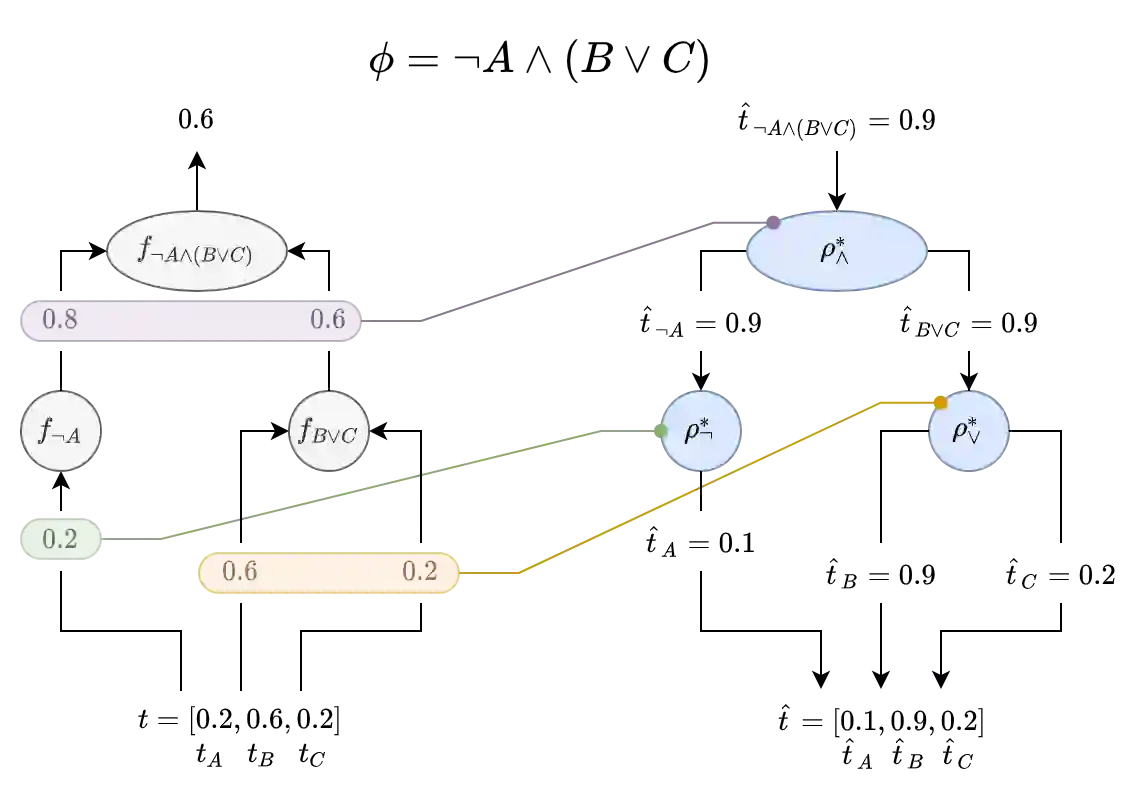

Recent work has showed we can use logical background knowledge in learning system to compensate for a lack of labeled training data. Many such methods work by creating a loss function that encodes this knowledge. However, often the logic is discarded after training, even if it is still useful at test-time. Instead, we ensure neural network predictions satisfy the knowledge by refining the predictions with an extra computation step. We introduce differentiable refinement functions that find a corrected prediction close to the original prediction. We study how to effectively and efficiently compute these refinement functions. Using a new algorithm, we combine refinement functions to find refined predictions for logical formulas of any complexity. This algorithm finds optimal refinements on complex SAT formulas in significantly fewer iterations and frequently finds solutions where gradient descent can not.

翻译:最近的工作表明,我们可以利用学习系统中的逻辑背景知识来弥补缺乏标签培训数据的情况。许多这类方法通过创建一种记录这种知识的损失函数而发挥作用。然而,即使这种逻辑在测试时仍然有用,但往往在培训后被抛弃。相反,我们通过用额外的计算步骤来改进预测,确保神经网络预测能够满足知识。我们引入了不同的改进功能,找到接近原始预测的纠正预测。我们研究如何有效和高效地计算这些改进功能。我们使用新的算法,将精细的功能结合起来,找到任何复杂逻辑公式的精细预测。这种算法发现对复杂的SAT公式的最佳改进,其迭代数要少得多,经常在梯度下降无法找到解决办法。