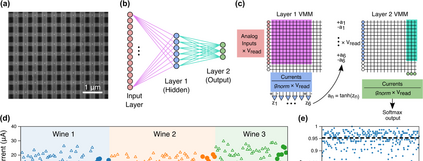

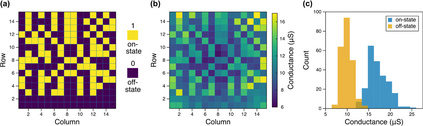

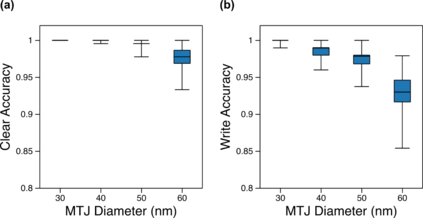

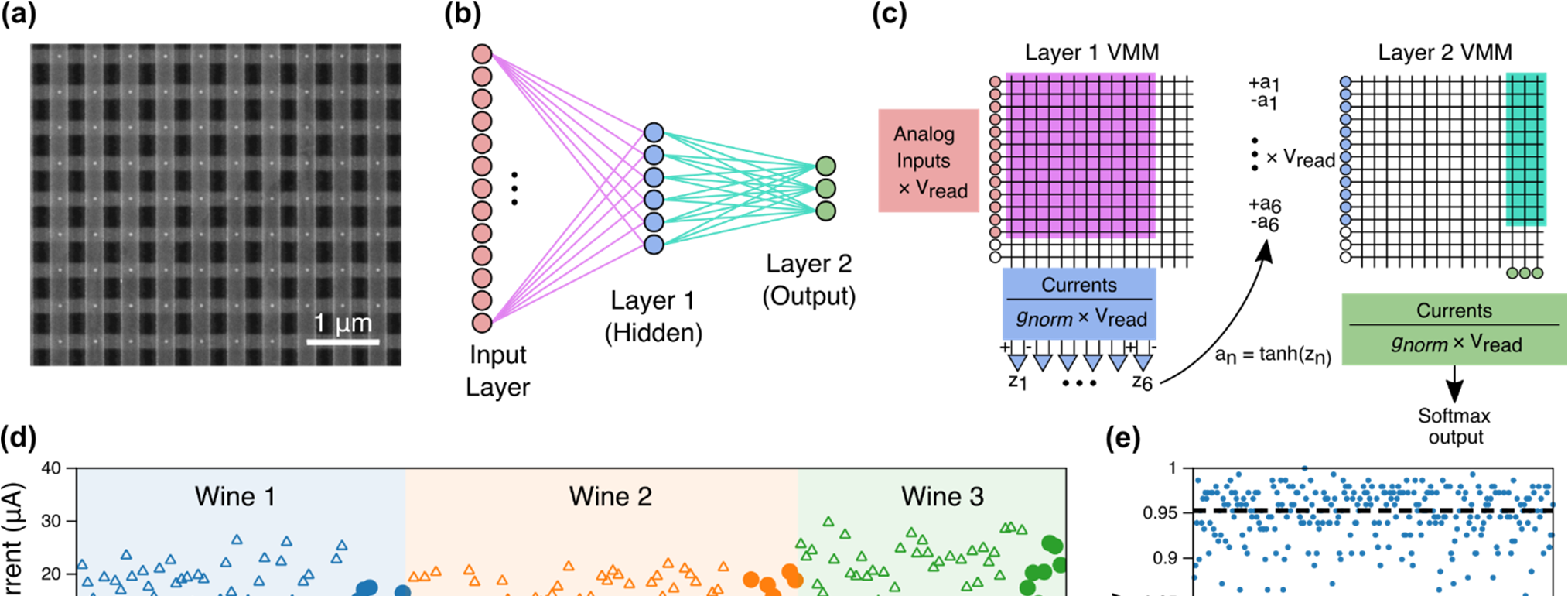

The increasing scale of neural networks and their growing application space have produced demand for more energy- and memory-efficient artificial-intelligence-specific hardware. Avenues to mitigate the main issue, the von Neumann bottleneck, include in-memory and near-memory architectures, as well as algorithmic approaches. Here we leverage the low-power and the inherently binary operation of magnetic tunnel junctions (MTJs) to demonstrate neural network hardware inference based on passive arrays of MTJs. In general, transferring a trained network model to hardware for inference is confronted by degradation in performance due to device-to-device variations, write errors, parasitic resistance, and nonidealities in the substrate. To quantify the effect of these hardware realities, we benchmark 300 unique weight matrix solutions of a 2-layer perceptron to classify the Wine dataset for both classification accuracy and write fidelity. Despite device imperfections, we achieve software-equivalent accuracy of up to 95.3 % with proper tuning of network parameters in 15 x 15 MTJ arrays having a range of device sizes. The success of this tuning process shows that new metrics are needed to characterize the performance and quality of networks reproduced in mixed signal hardware.

翻译:神经网络规模的扩大及其应用空间的扩大产生了对更能和记忆效率更高的人工智能专用硬件的需求。 用于缓解主要问题的大道, von Neumann 瓶颈, 包括内模和近模结构以及算法方法。 在这里,我们利用磁隧道交叉点(MTJs)的低功率和固有的二进制操作来显示基于MTJs被动阵列的神经网络硬件推断值。 一般来说, 将经过训练的网络模型转换成用于推断的硬件,由于设备到装置变异、写错误、寄生虫抗药性和非理想性在基体中的功能退化而面临。 为了量化这些硬件现实的影响,我们用二层感应器的300个独特的重量矩阵解决方案来对Wine数据集进行分类,以分类精确度和写正确性为基础。 尽管设备不完善,但我们实现了高达95.3%的软件等值准确度,同时对15xMTJ阵列的网络参数进行适当调整, 其功能变化、 写错、 寄生阻力阻力阻力以及非理想性特性, 将显示新的硬件的功能。