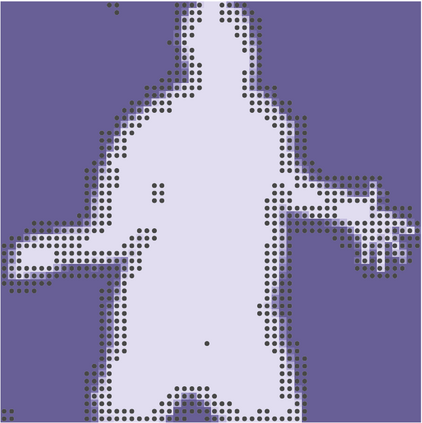

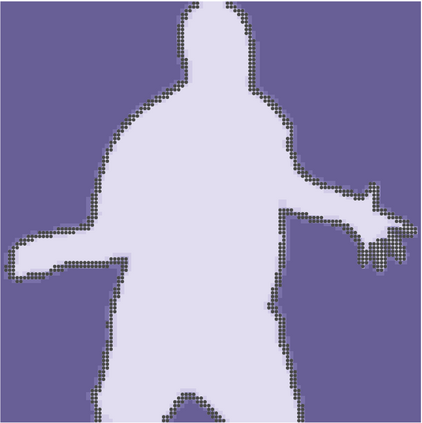

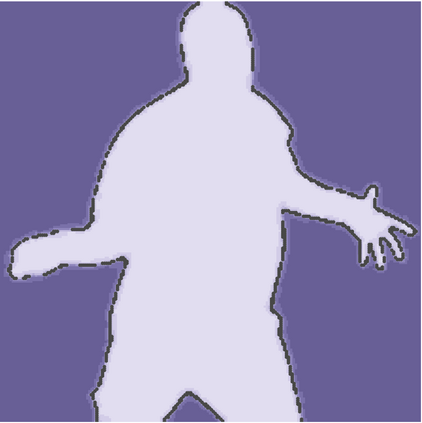

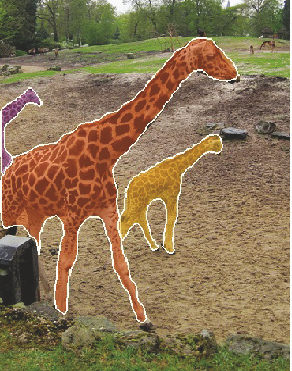

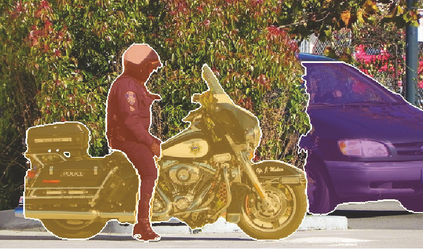

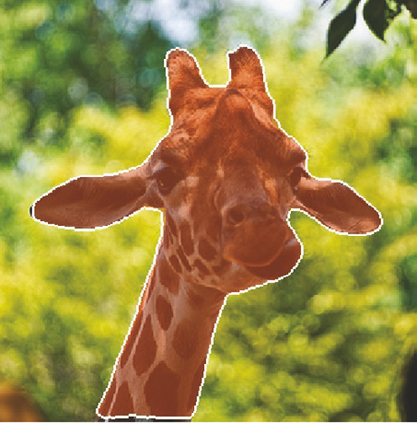

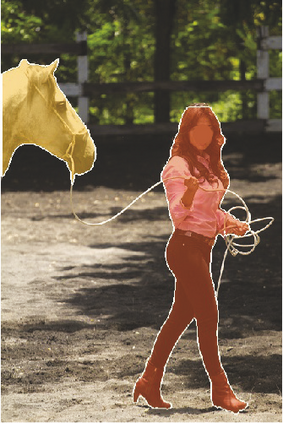

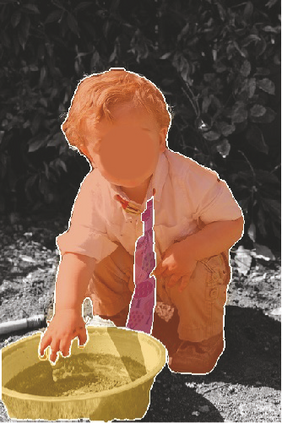

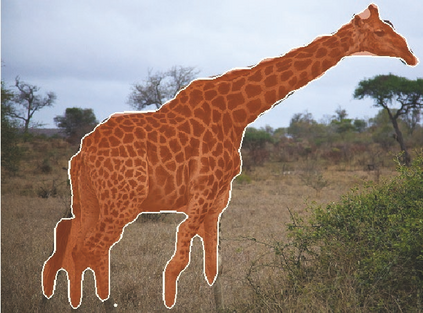

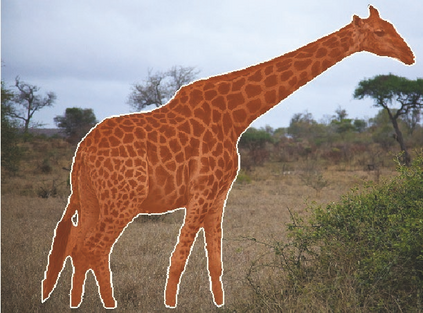

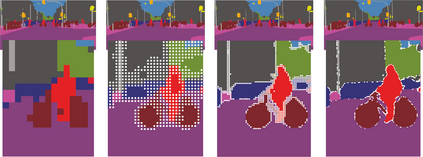

We present a new method for efficient high-quality image segmentation of objects and scenes. By analogizing classical computer graphics methods for efficient rendering with over- and undersampling challenges faced in pixel labeling tasks, we develop a unique perspective of image segmentation as a rendering problem. From this vantage, we present the PointRend (Point-based Rendering) neural network module: a module that performs point-based segmentation predictions at adaptively selected locations based on an iterative subdivision algorithm. PointRend can be flexibly applied to both instance and semantic segmentation tasks by building on top of existing state-of-the-art models. While many concrete implementations of the general idea are possible, we show that a simple design already achieves excellent results. Qualitatively, PointRend outputs crisp object boundaries in regions that are over-smoothed by previous methods. Quantitatively, PointRend yields significant gains on COCO and Cityscapes, for both instance and semantic segmentation. PointRend's efficiency enables output resolutions that are otherwise impractical in terms of memory or computation compared to existing approaches.

翻译:我们提出了一个高效高品质对象和场景图像分割的新方法。 通过模拟古典计算机图形图形方法, 以像素标签任务中遇到的过份和过低的抽样挑战来有效转换像素标签任务, 我们开发了一个独特的图像分割观点作为制造问题。 我们从这个模型中展示了PointRend(基于点的导出)神经网络模块: 一个模块, 该模块基于迭接子剖析算法, 在适应性选定的地点进行基于点的分解预测。 PointRend 可以在现有最先进的模型之上,灵活地应用到试度和语义分割任务。 虽然许多具体实施一般想法是可能的,但我们显示简单设计已经取得了极佳的结果。 定性的, 点Rend 输出点在被先前方法过度移动的区域的物体边界上, 定量而言, 点Rend 生成COCO和城景的显著收益, 在实例和语义分割法中, 。 点的效能使得输出分辨率在记忆或计算上与现有方法相比不切实际不切实际。