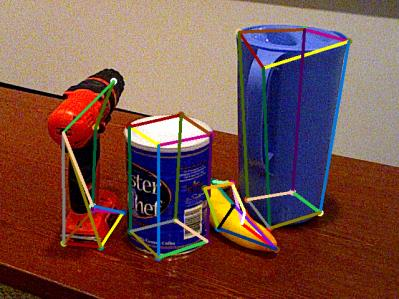

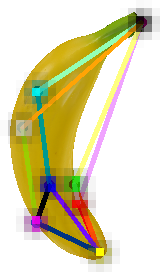

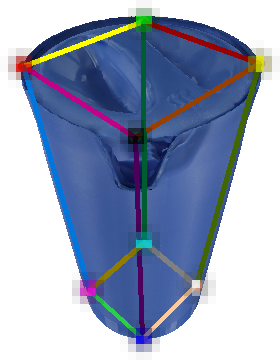

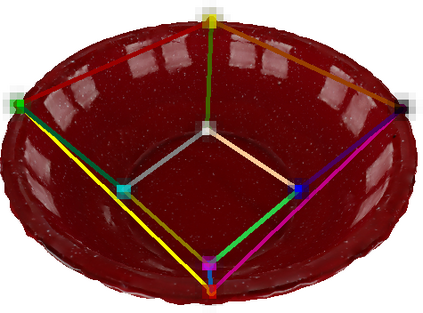

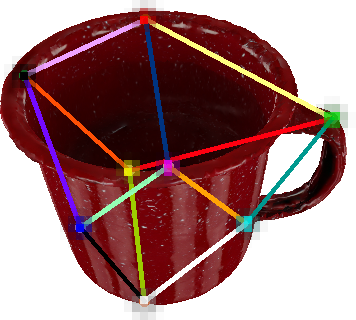

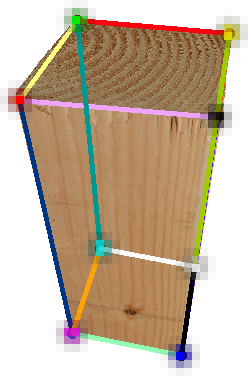

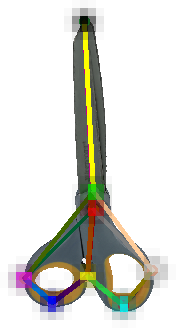

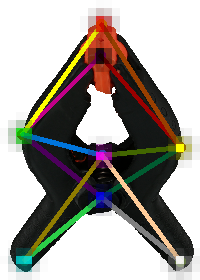

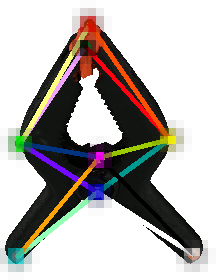

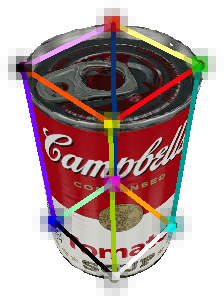

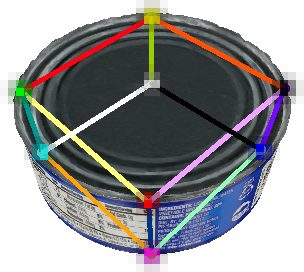

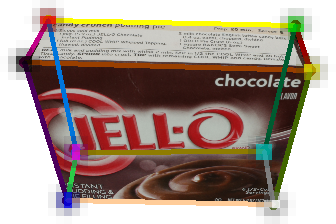

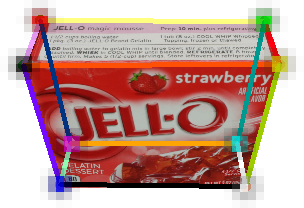

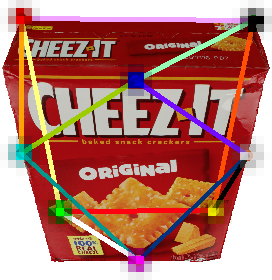

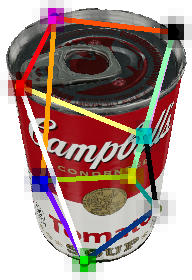

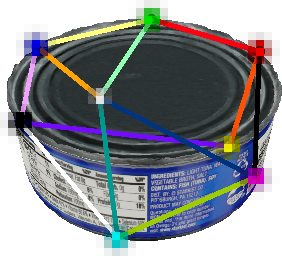

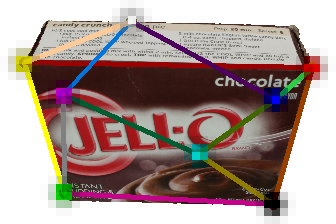

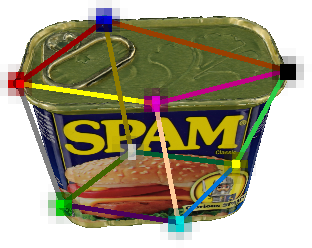

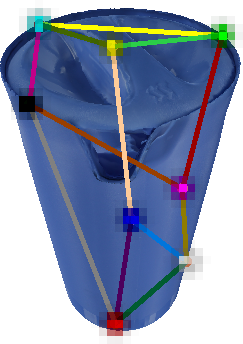

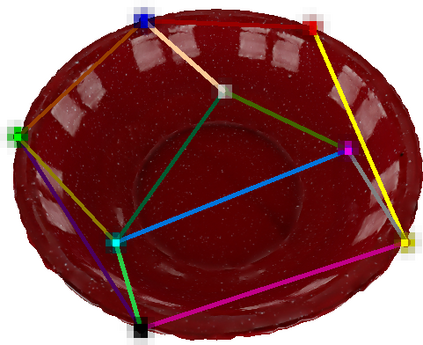

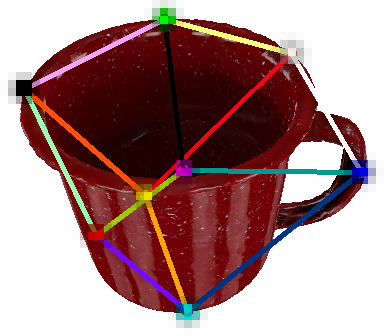

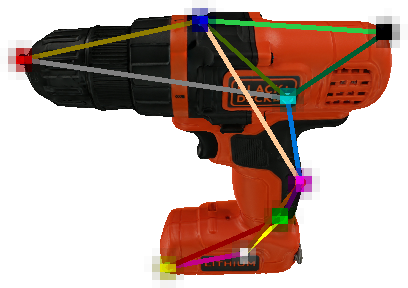

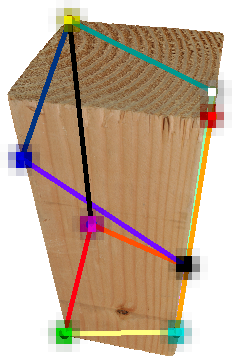

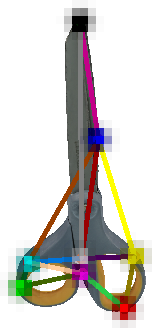

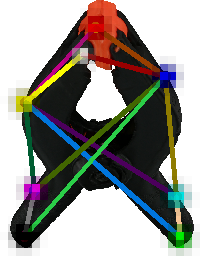

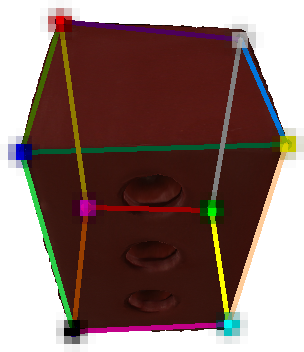

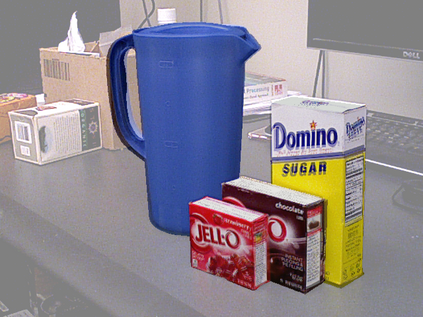

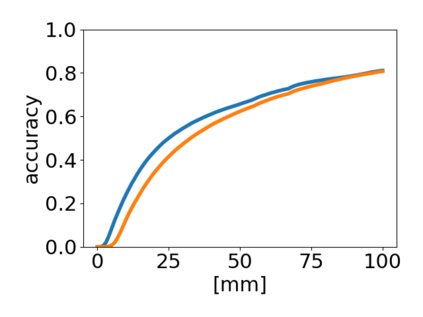

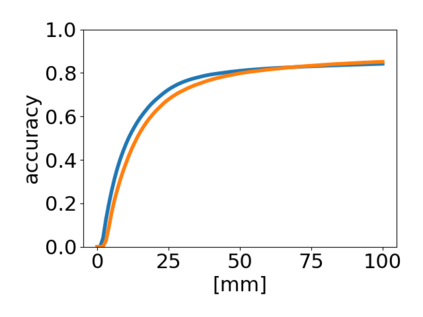

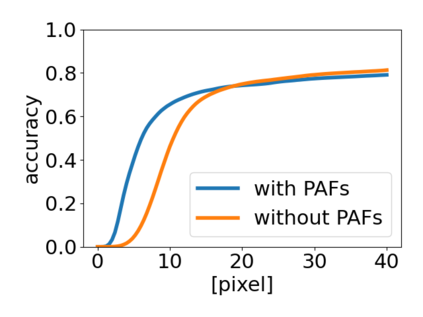

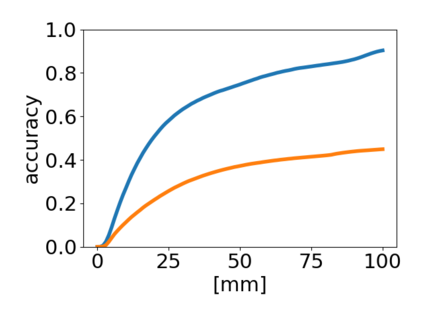

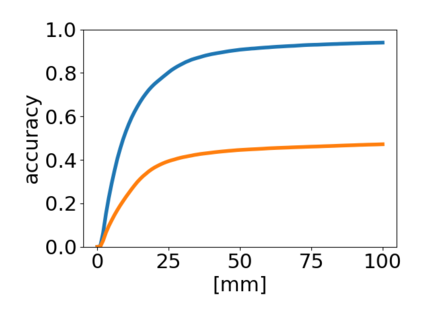

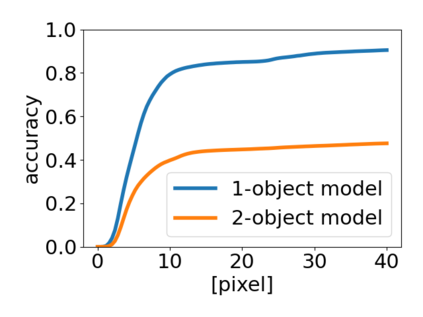

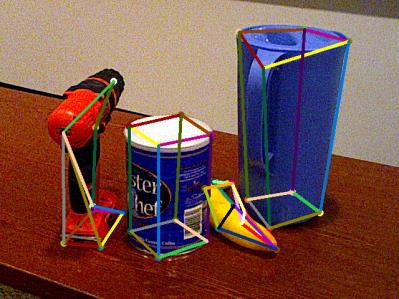

The task of 6D object pose estimation from RGB images is an important requirement for autonomous service robots to be able to interact with the real world. In this work, we present a two-step pipeline for estimating the 6 DoF translation and orientation of known objects. Keypoints and Part Affinity Fields (PAFs) are predicted from the input image adopting the OpenPose CNN architecture from human pose estimation. Object poses are then calculated from 2D-3D correspondences between detected and model keypoints via the PnP-RANSAC algorithm. The proposed approach is evaluated on the YCB-Video dataset and achieves accuracy on par with recent methods from the literature. Using PAFs to assemble detected keypoints into object instances proves advantageous over only using heatmaps. Models trained to predict keypoints of a single object class perform significantly better than models trained for several classes.

翻译:6D 对象根据 RGB 图像进行估计是自动服务机器人能够与真实世界互动的一个重要要求。 在这项工作中,我们提出了一个用于估计6 DoF 翻译和已知对象方向的两步管道。 使用 OpenPose CNN 结构的输入图像预测了关键点和部分亲近字段(PAFs) 。 然后,通过 PnP-RANSAC 算法,从检测到的2D-3D 关键点与模型关键点之间的对等中计算了对象。 在YCB-Video 数据集上评价了拟议方法,并实现了与文献最新方法相同的准确性。 使用 PAFs 将检测到的键点组合到目标实例中,证明仅使用热测器才比目标实例更有利。 用于预测单个对象类别关键点的模型经过培训,其运行比几个类的模型要好得多。