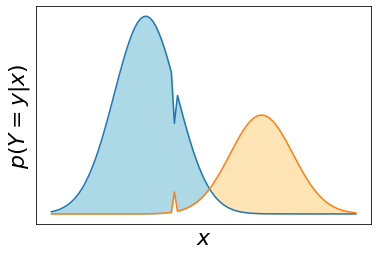

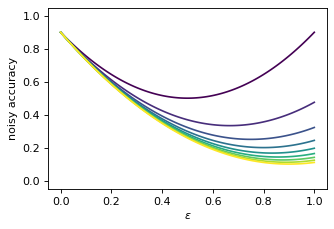

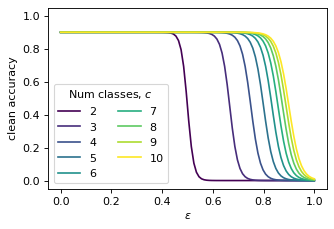

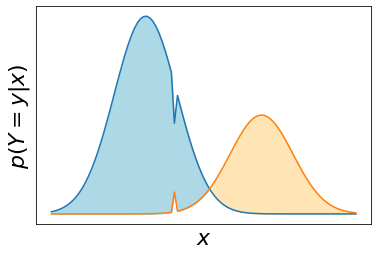

Machine learning classifiers have been demonstrated, both empirically and theoretically, to be robust to label noise under certain conditions -- notably the typical assumption is that label noise is independent of the features given the class label. We provide a theoretical framework that generalizes beyond this typical assumption by modeling label noise as a distribution over feature space. We show that both the scale and the shape of the noise distribution influence the posterior likelihood; and the shape of the noise distribution has a stronger impact on classification performance if the noise is concentrated in feature space where the decision boundary can be moved. For the special case of uniform label noise (independent of features and the class label), we show that the Bayes optimal classifier for $c$ classes is robust to label noise until the ratio of noisy samples goes above $\frac{c-1}{c}$ (e.g. 90% for 10 classes), which we call the tipping point. However, for the special case of class-dependent label noise (independent of features given the class label), the tipping point can be as low as 50%. Most importantly, we show that when the noise distribution targets decision boundaries (label noise is directly dependent on feature space), classification robustness can drop off even at a small scale of noise. Even when evaluating recent label-noise mitigation methods we see reduced accuracy when label noise is dependent on features. These findings explain why machine learning often handles label noise well if the noise distribution is uniform in feature-space; yet it also points to the difficulty of overcoming label noise when it is concentrated in a region of feature space where a decision boundary can move.

翻译:从经验上和理论上都可以看出,机器学习分类方法对于在某些条件下标注噪音非常有力,在某些条件下标出噪音非常有力 -- -- 特别是典型的假设是,标签噪音独立于等级标签的特性。我们提供了一个理论框架,通过模拟标签噪音作为特性空间分布的模型,超越典型的假设范围,我们提供了一个理论框架。我们显示,噪音分布的规模和形状都影响后继可能性;而且噪音分布的形状对分类性能的影响更大,如果噪音集中在可以移动决定界限的特性空间空间空间空间。对于统一标签噪音的特殊例子(取决于特性和类标签标签),我们显示,Bayes最优的特性分类方法对于标签噪音而言是稳妥的,直到噪音样品比重超过$\frac{c_1 ⁇ c}(例如,10个等级的90%),我们称之为倾斜坡点。然而,对于依赖阶级标签的噪音的特性特别案例,倾斜点可以低至50 %。最重要的是,我们显示,当噪音分布的小型指标比值达到决定目标时,在空间分布的精确度上,我们常常会降低一个比重度,当标签的标签的精确度会降低空间标签的比值时,当标签比值在空间标签的比值上,当标签的精确度会降低一个比值时,当标签的比值会降低。