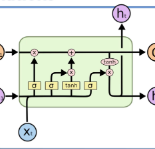

This study compares sequential image classification methods based on recurrent neural networks. We describe methods based on recurrent neural networks such as Long-Short-Term memory(LSTM), bidirectional Long-Short-Term memory(BiLSTM) architectures, etc. We also review the state-of-the-art sequential image classification architectures. We mainly focus on LSTM, BiLSTM, temporal convolution network, and independent recurrent neural network architecture in the study. It is known that RNN lacks in learning long-term dependencies in the input sequence. We use a simple feature construction method using orthogonal Ramanujan periodic transform on the input sequence. Experiments demonstrate that if these features are given to LSTM or BiLSTM networks, the performance increases drastically. Our focus in this study is to increase the training accuracy simultaneously reducing the training time for the LSTM and BiLSTM architecture, but not on pushing the state-of-the-art results, so we use simple LSTM/BiLSTM architecture. We compare sequential input with the constructed feature as input to single layer LSTM and BiLSTM network for MNIST and CIFAR datasets. We observe that sequential input to the LSTM network with 128 hidden unit training for five epochs results in training accuracy of 33% whereas constructed features as input to the same LSTM network results in training accuracy of 90% with 1/3 lesser time.

翻译:这项研究比较了基于经常性神经网络的顺序图像分类方法。 我们描述基于经常性神经网络的方法, 如长期短期内存(LSTM) 、双向长期内存(BILSTM) 结构等。 我们还审查了最先进的连续图像分类结构。 我们主要关注LSTM、 BILSTM、 时间变换网络和独立的经常性神经网络结构。 众所周知, RNN缺乏在输入序列中学习长期依赖性。 我们在输入序列中使用一种简单的特性构建方法, 使用或thoonal Ramanujan定期变换。 实验显示, 如果将这些特性提供给LSTM 或BILSTM 的双向长期内存(BILLSTM) 结构, 性能急剧增长。 我们的研究重点是提高培训的准确性, 同时减少LSTM 和 BILLSTM 的训练时间结构, 而不是推进最新结果, 因此我们使用简单的LSTM/BILSTM 结构。 我们将连续输入与构建的特性作为LSTM 一级LSTM 和 BILLLAS 的连续培训结果 的单个网络的单级系统 的单个输入结果, 我们用我们观察了LSDMIS 的单层LS 和BLS 的单个数据网络的单个数据网络的单个数据网络的单个输入结果。