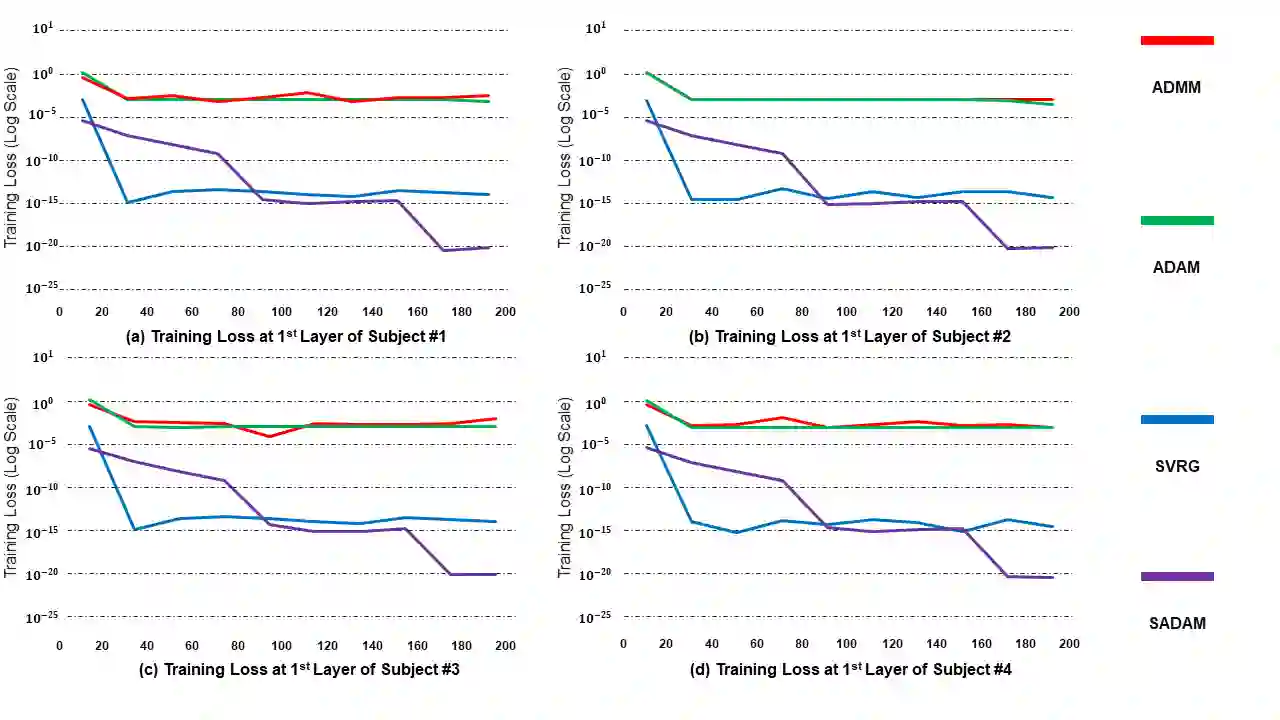

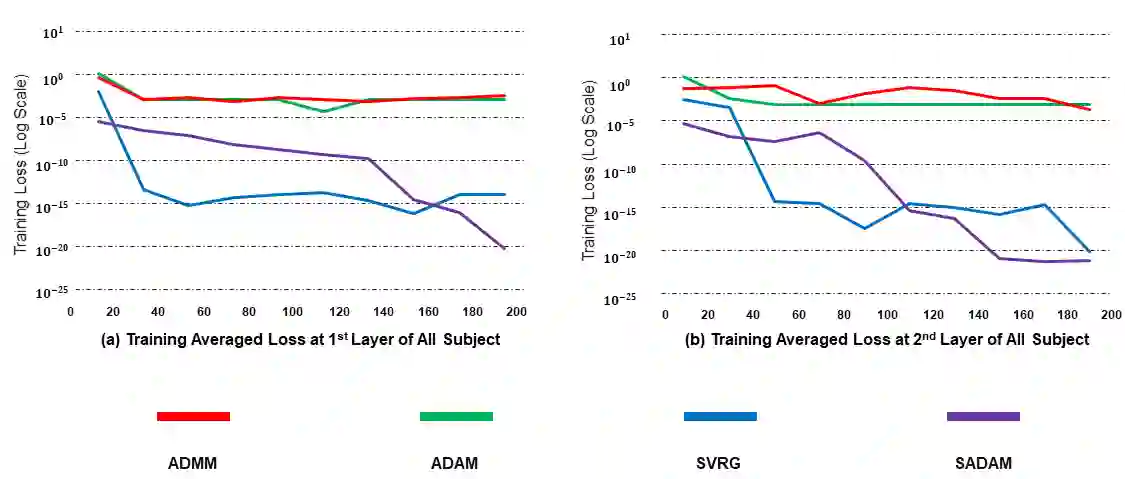

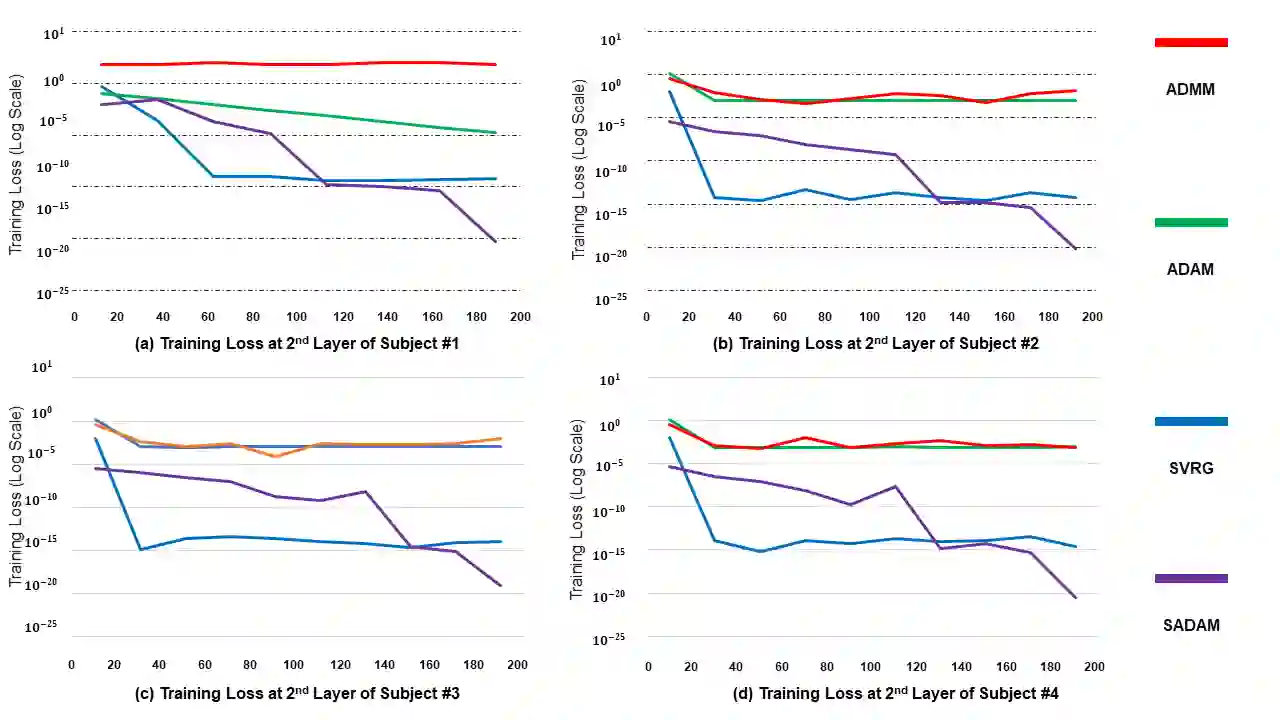

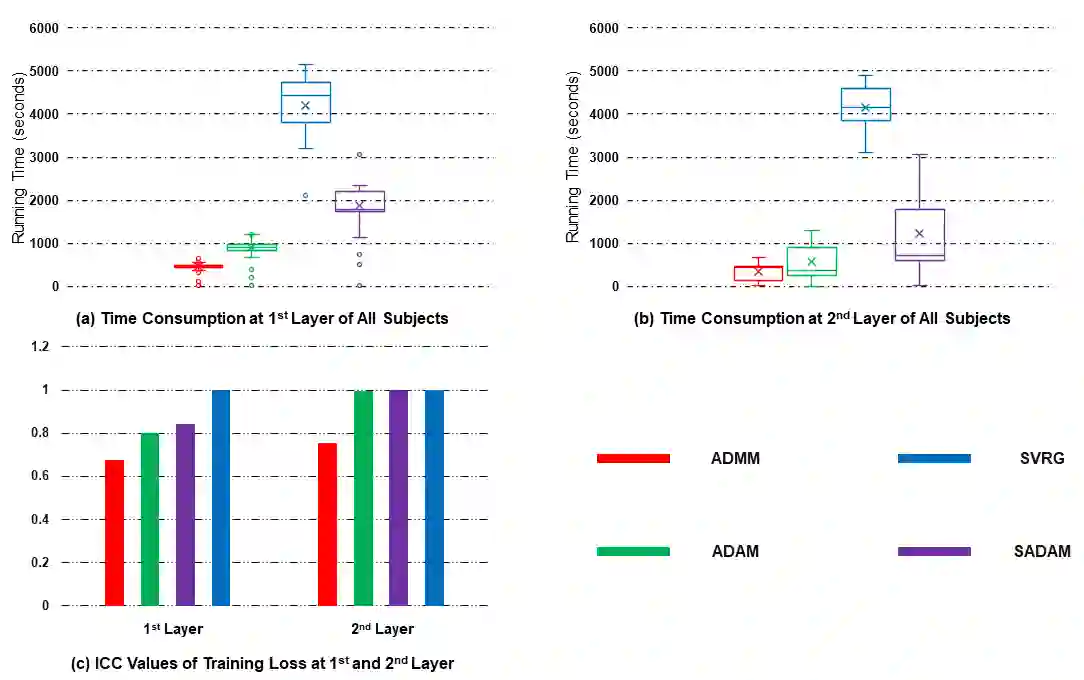

In this work, to efficiently help escape the stationary and saddle points, we propose, analyze, and generalize a stochastic strategy performed as an operator for a first-order gradient descent algorithm in order to increase the target accuracy and reduce time consumption. Unlike existing algorithms, the proposed stochastic the strategy does not require any batches and sampling techniques, enabling efficient implementation and maintaining the initial first-order optimizer's convergence rate, but provides an incomparable improvement of target accuracy when optimizing the target functions. In short, the proposed strategy is generalized, applied to Adam, and validated via the decomposition of biomedical signals using Deep Matrix Fitting and another four peer optimizers. The validation results show that the proposed random strategy can be easily generalized for first-order optimizers and efficiently improve the target accuracy.

翻译:在这项工作中,为了有效地帮助人们摆脱固定点和马鞍点,我们提议、分析和概括作为一级梯度下降算法操作者执行的随机战略,以提高目标准确性并减少时间消耗。 与现有的算法不同,拟议战略的随机性并不要求任何批量和取样技术,从而能够有效地实施并保持初始一级优化器的趋同率,但在优化目标功能时目标准确性得到无可比拟的提高。 简言之,拟议战略被普遍推广,适用于亚当,并通过利用深层矩阵调整和另外四个同行优化器对生物医学信号进行分解而得到验证。 验证结果表明,拟议的随机战略可以很容易地为一级优化器普及,并有效地提高目标准确性。