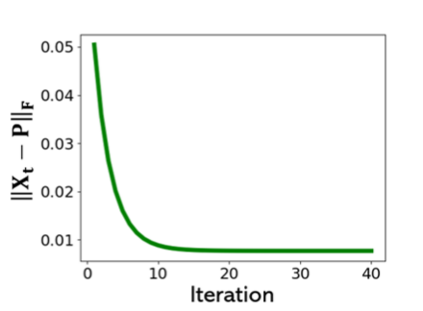

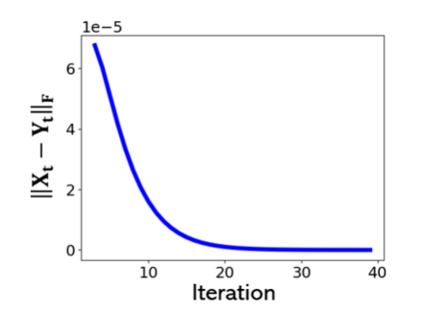

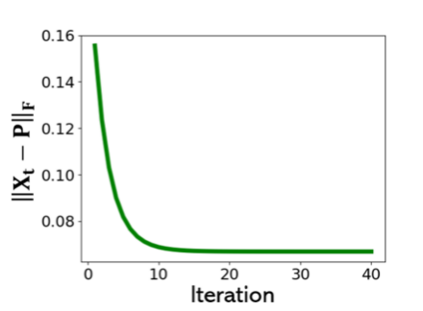

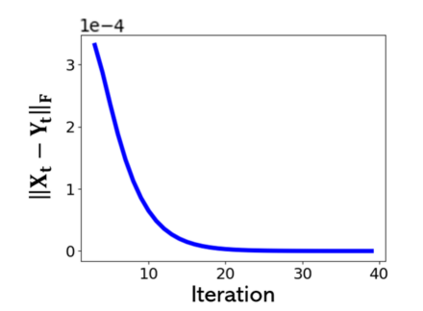

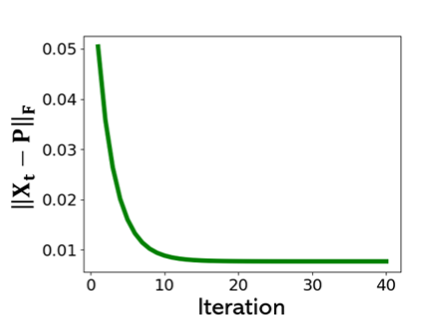

We consider optimization problems in which the goal is find a $k$-dimensional subspace of $\reals^n$, $k<<n$, which minimizes a convex and smooth loss. Such problemsgeneralize the fundamental task of principal component analysis (PCA) to include robust and sparse counterparts, and logistic PCA for binary data, among others. While this problem is not convex it admits natural algorithms with very efficient iterations and memory requirements, which is highly desired in high-dimensional regimes however, arguing about their fast convergence to a global optimal solution is difficult. On the other hand, there exists a simple convex relaxation for which convergence to the global optimum is straightforward, however corresponding algorithms are not efficient when the dimension is very large. In this work we present a natural deterministic sufficient condition so that the optimal solution to the convex relaxation is unique and is also the optimal solution to the original nonconvex problem. Mainly, we prove that under this condition, a natural highly-efficient nonconvex gradient method, which we refer to as \textit{gradient orthogonal iteration}, when initialized with a "warm-start", converges linearly for the nonconvex problem. We also establish similar results for the nonconvex projected gradient method, and the Frank-Wolfe method when applied to the convex relaxation. We conclude with empirical evidence on synthetic data which demonstrate the appeal of our approach.

翻译:我们考虑的是优化问题,即目标在其中找到一个美元-美元-美元-美元-美元-美元-美元-美元-美元-美元-美元-的维度子空间,可以最大限度地减少一个螺旋和平稳损失。这些问题概括了主要组成部分分析(PCA)的基本任务(PCA),包括稳健和稀少的对应方,以及二元数据等后勤的常设仲裁院。虽然这个问题不是同种问题,它承认具有非常高效的迭代和记忆要求的自然算法,但在高维度制度下非常需要,但争论它们与全球最佳解决办法迅速趋同。另一方面,存在着简单的松动松动,与全球最佳办法的趋同是直截面的,但当尺寸非常大时对应的算法并不有效。在这项工作中,我们提出了一个自然的确定性充分条件,因此,对convex放松的最好解决办法是独一无二的,也是最初的非convexx问题的最佳解决办法。我们主要证明,在这个条件下,一种自然的高效的非convex梯度方法是困难,我们称之为“Centrition{comlient oral orviquestalstalstalstal resligilation resligal resligal resligal resligild max) 。当我们使用了一种“我们使用了一种不折算方法,我们用的方法,我们用的方法,我们用了一个不折算算方法,我们用的方法,我们用在初步确定一种不折算方法,我们用在不折算法的方法。